Course

25 Top MLOps Tools You Need to Know in 2024

As we explore in our article on Getting Started with MLOps, MLOps is built on the fundamentals of DevOps, the software development strategy to efficiently write, deploy, and run enterprise applications.

It is an approach to managing machine learning projects at scale. MLOps enhance the collaboration between development, operational, and data science teams. As a result, you get faster model deployment, optimized team productivity, reduction in risk and cost, and continuous model monitoring in production.

Learn why MLOps is important and what problems it aims to solve by reading our blog on The Past, Present, and Future of MLOps.

In this post, we are going to learn about the best MLOps tools for model development, deployment, and monitoring to standardize, simplify, and streamline the machine learning ecosystem. To get a thorough introduction to the MLOps Fundamentals, check out our Skill Track.

Large Language Models (LLMs) Framework

With the introduction of GPT-3.5, the race has begun to produce large language models and realize the full potential of modern AI. LLMs require vector databases and integration frameworks for building intelligent AI applications.

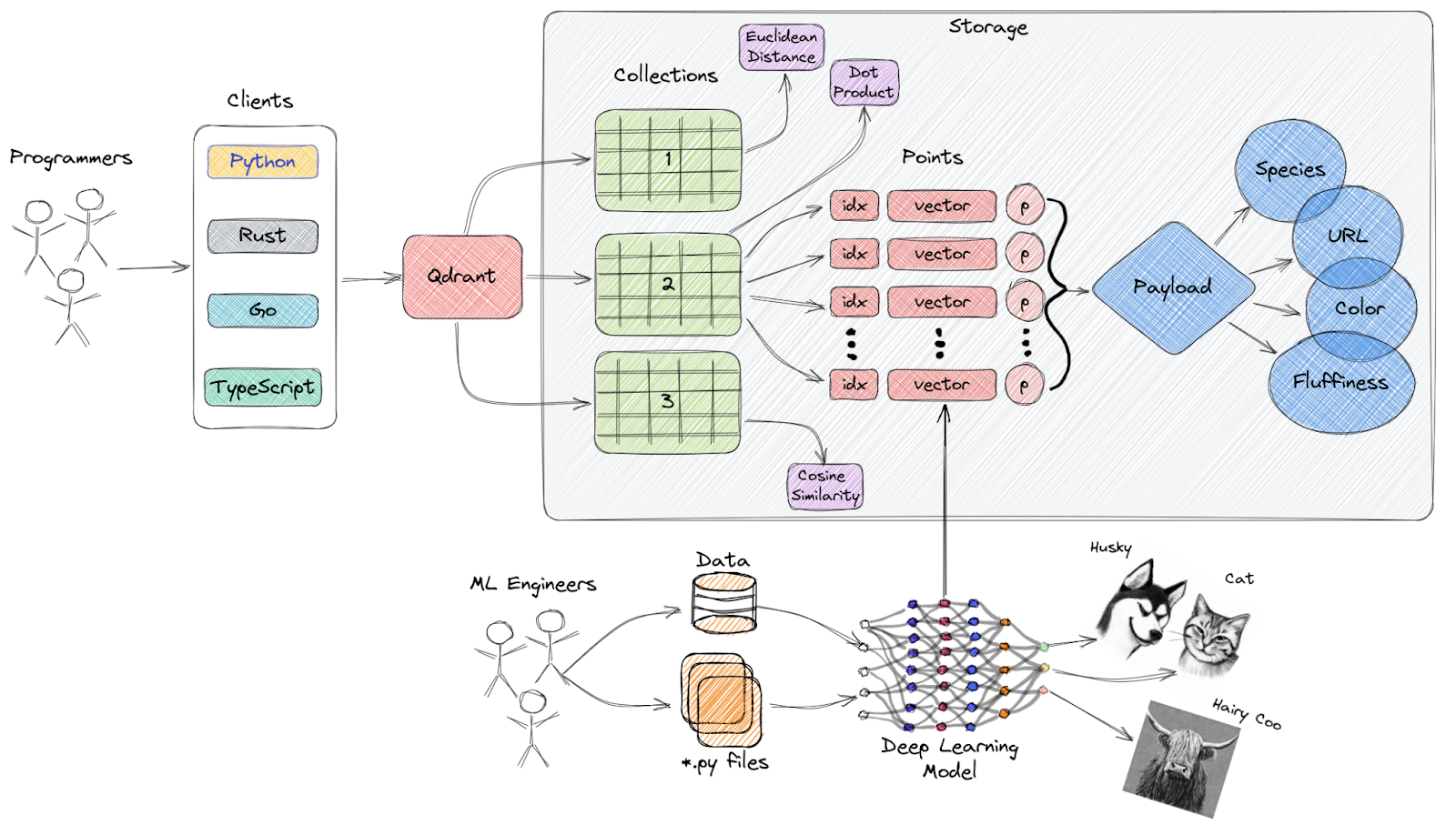

1. Qdrant

Qdrant is an open-source vector similarity search engine and vector database that provides a production-ready service with a convenient API, allowing you to store, search, and manage vector embeddings.

High-Level Overview of Qdrant’s Architecture

Key features:

- Easy-to-Use API: It provides an easy-to-use Python API and also allows developers to generate client libraries in multiple programming languages.

- Fast and Accurate: It uses a unique custom modification of the HNSW algorithm for Approximate Nearest Neighbor Search, providing state-of-the-art search speeds without compromising on accuracy.

- Rich Data Types: Qdrant supports a wide variety of data types and query conditions, including string matching, numerical ranges, geo-locations, and more.

- Distributed: It is cloud-native and can scale horizontally, allowing developers to use just the right amount of computational resources for any amount of data they need to serve.

- Efficient: Qdrant is developed entirely in Rust, a language known for its performance and resource efficiency.

Discover the top vector databases by reading The 5 Best Vector Databases | A List With Examples.

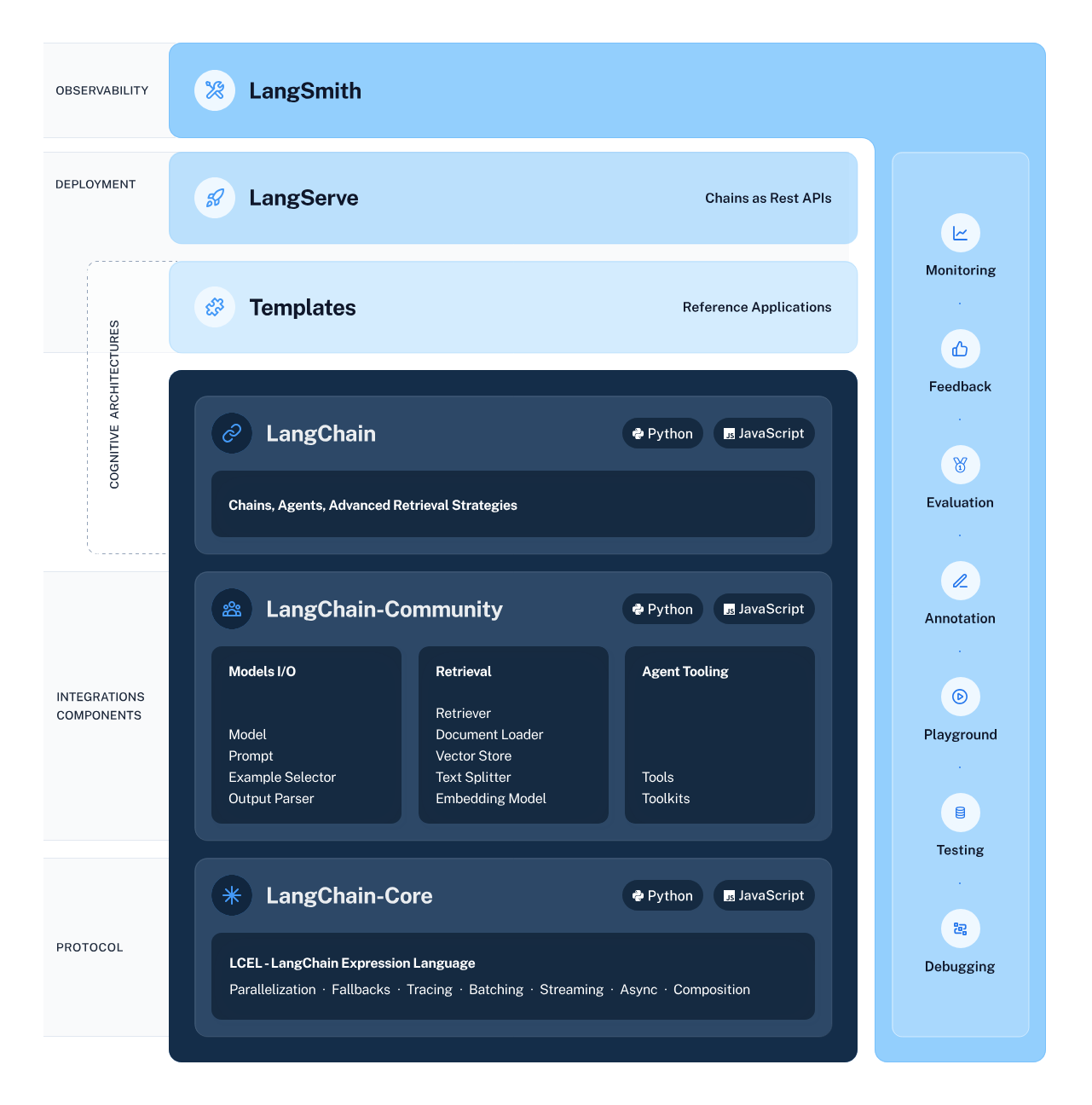

2. LangChain

LangChain is a versatile and powerful framework for developing applications powered by language models. It offers several components to enable developers to build, deploy, and monitor context-aware and reasoning-based applications.

The framework consists of 4 main components:

- LangChain Libraries: The Python and JavaScript libraries offer interfaces and integrations that allow you to develop context-aware reasoning applications.

- LangChain Templates: This collection of easily deployable reference architectures covers a wide variety of tasks, providing developers with pre-built solutions.

- LangServe: This library enables developers to deploy LangChain chains as a REST API.

- LangSmith: A platform that enables you to debug, test, evaluate, and monitor chains built on any LLM framework.

LangChain Ecosystem

Learn How to Build LLM Applications with LangChain and explore the untapped potential of large language models.

Experiment Tracking and Model Metadata Management Tools

These tools allow you to manage model metadata and help with experiment tracking:

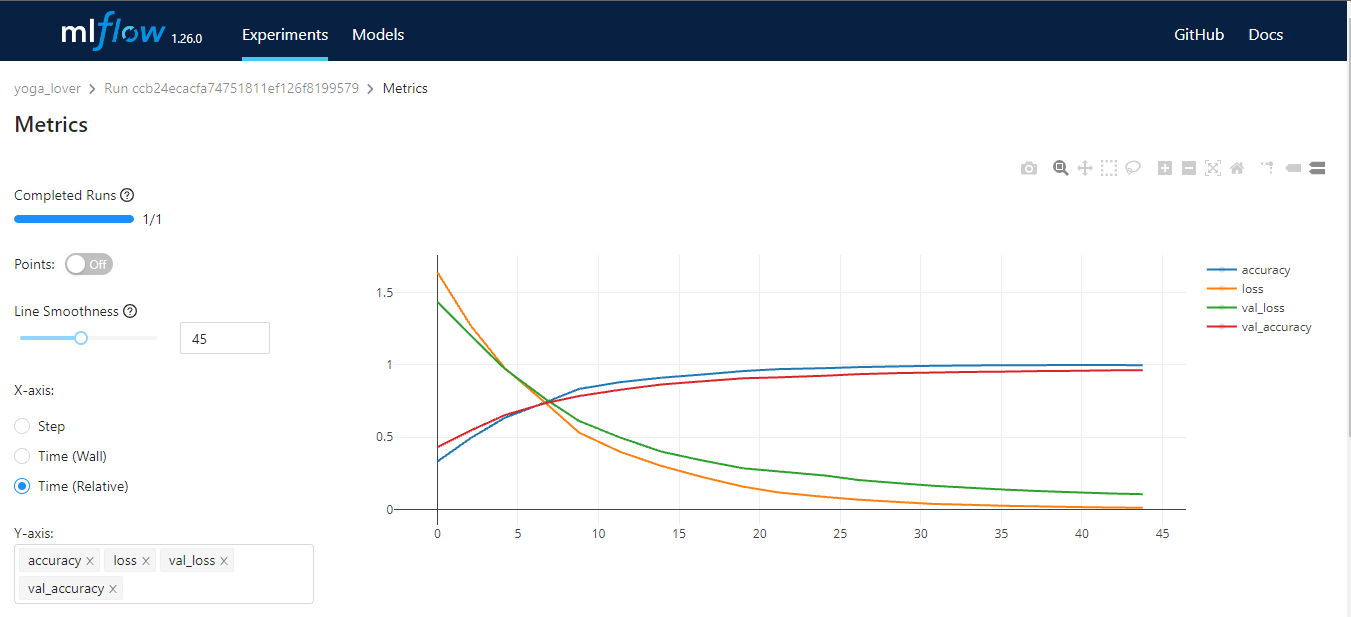

3. MLFlow

MLflow is an open-source tool that helps you manage core parts of the machine learning lifecycle. It is generally used for experiment tracking, but you can also use it for reproducibility, deployment, and model registry. You can manage the machine learning experiments and model metadata by using CLI, Python, R, Java, and REST API.

MLflow has four core functions:

- MLflow Tracking: storing and accessing code, data, configuration, and results.

- MLflow Projects: package data science source for reproducibility.

- MLflow Models: deploying and managing machine learning models to various serving environments.

- MLflow Model Registry: a central model store that provides versioning, stage transitions, annotations, and managing machine learning models.

Image by Author

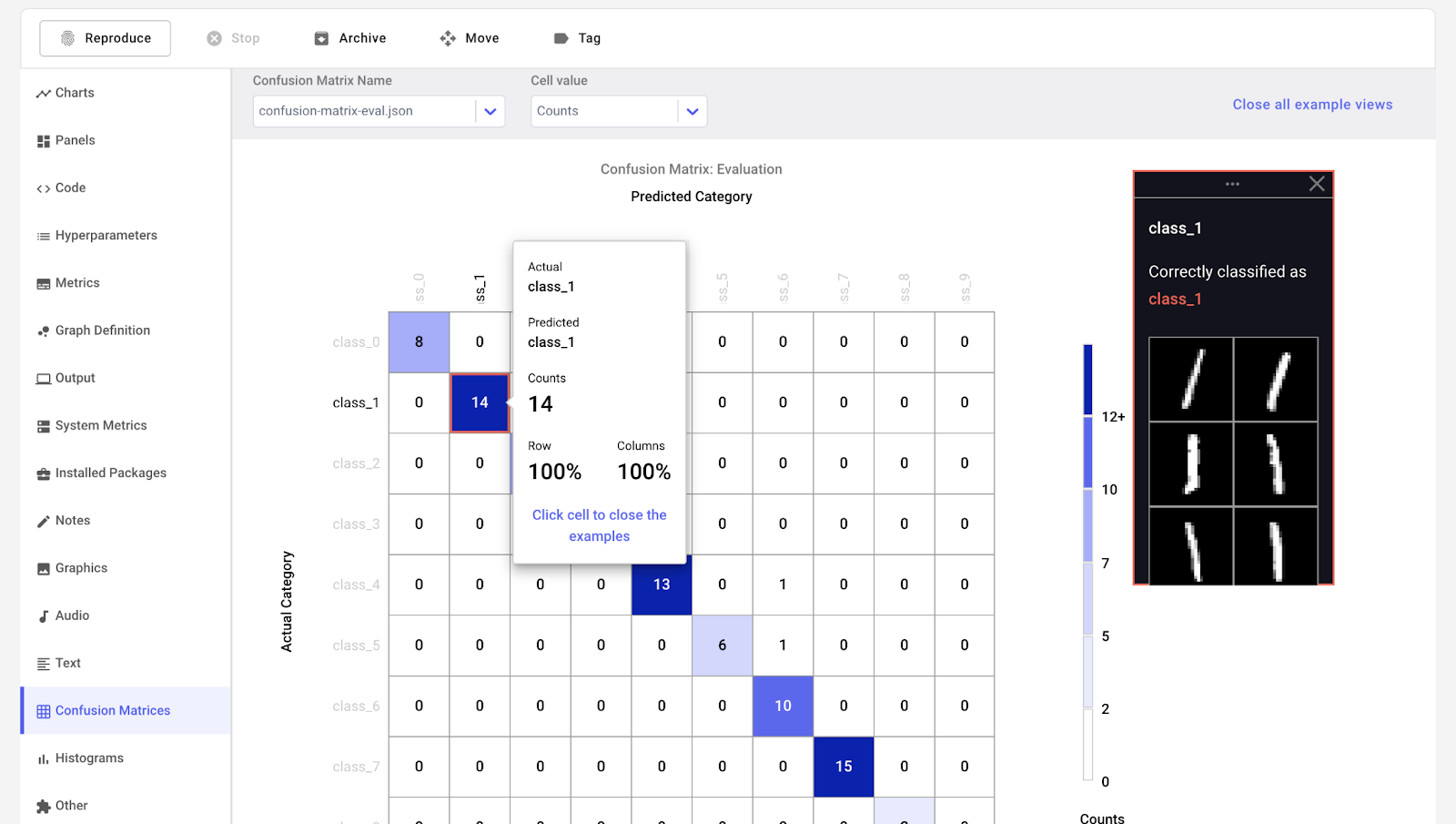

4. Comet ML

Comet ML is a platform for tracking, comparing, explaining, and optimizing machine learning models and experiments. You can use it with any machine learning library, such as Scikit-learn, Pytorch, TensorFlow, and HuggingFace.

Comet ML is for individuals, teams, enterprises, and academics. It allows anyone to easily visualize and compare the experiments. Furthermore, it enables you to visualize samples from images, audio, text, and tabular data.

Image from Comet ML

5. Weights & Biases

Weights & Biases is an ML platform for experiment tracking, data and model versioning, hyperparameter optimization, and model management. Furthermore, you can use it to log artifacts (datasets, models, dependencies, pipelines, and results) and visualize the datasets (audio, visual, text, and tabular).

Weights & Biases has a user-friendly central dashboard for machine learning experiments. Like Comet ML, you can integrate it with other machine learning libraries, such as Fastai, Keras, PyTorch, Hugging face, Yolov5, Spacy, and many more. You can check out our introduction to Weights & BIases in a separate article.

Gif from Weights & Biases

Note: You can also use TensorBoard, Pachyderm, DagsHub, and DVC Studio for experiment tracking and ML metadata management.

Orchestration and Workflow Pipelines MLOps Tools

These tools help you create data science projects and manage machine learning workflows:

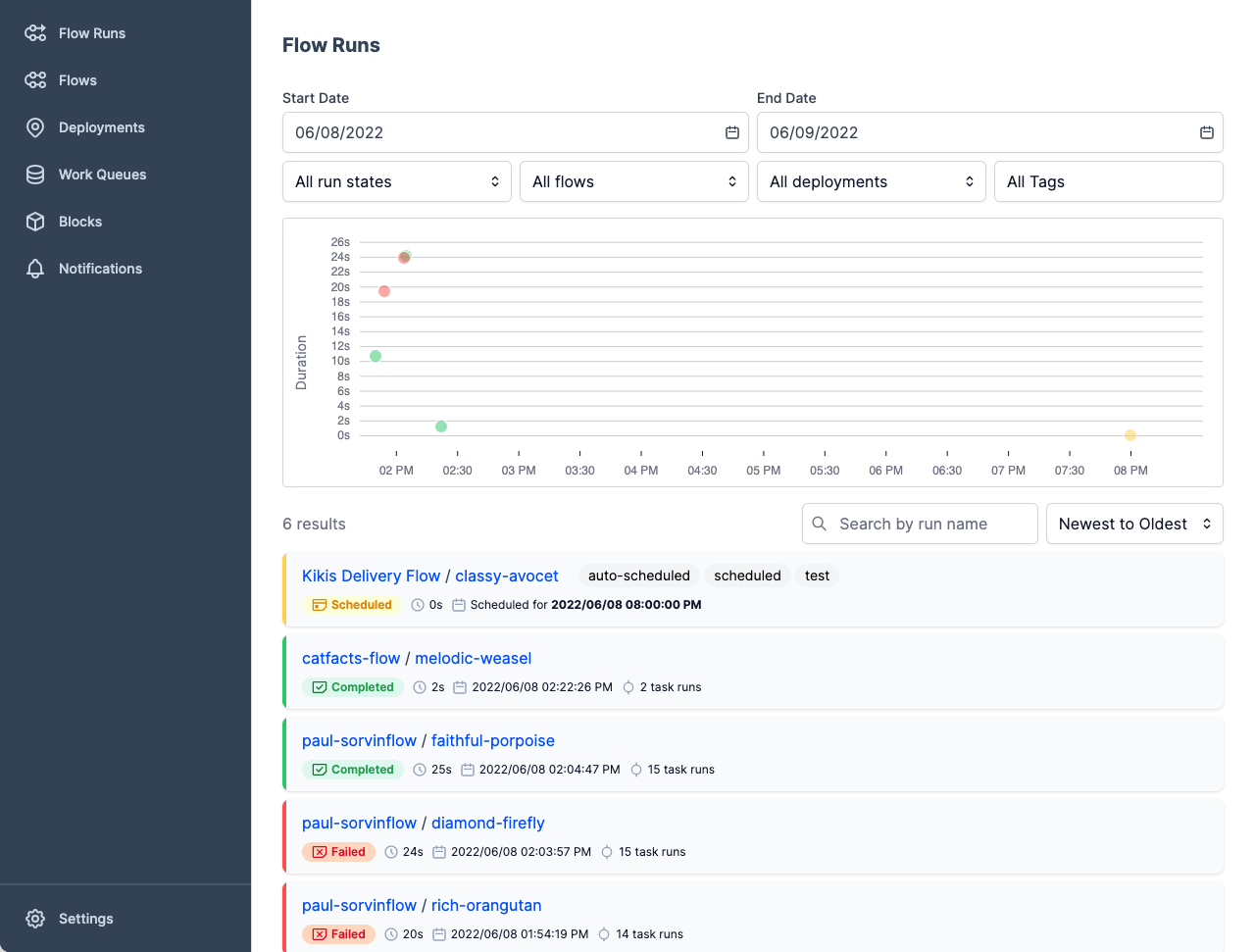

6. Prefect

The Prefect is a modern data stack for monitoring, coordinating, and orchestrating workflows between and across applications. It is an open-source, lightweight tool built for end-to-end machine-learning pipelines.

You can either use Prefect Orion UI or Prefect Cloud for the databases.

Prefect Orion UI is an open-source, locally hosted orchestration engine and API server. It provides you insights into the local Prefect Orion instance and the workflows.

Prefect Cloud is a hosted service for you to visualize flows, flow runs, and deployments. Furthermore, you can manage accounts, workspace, and team collaboration.

Image from Prefect

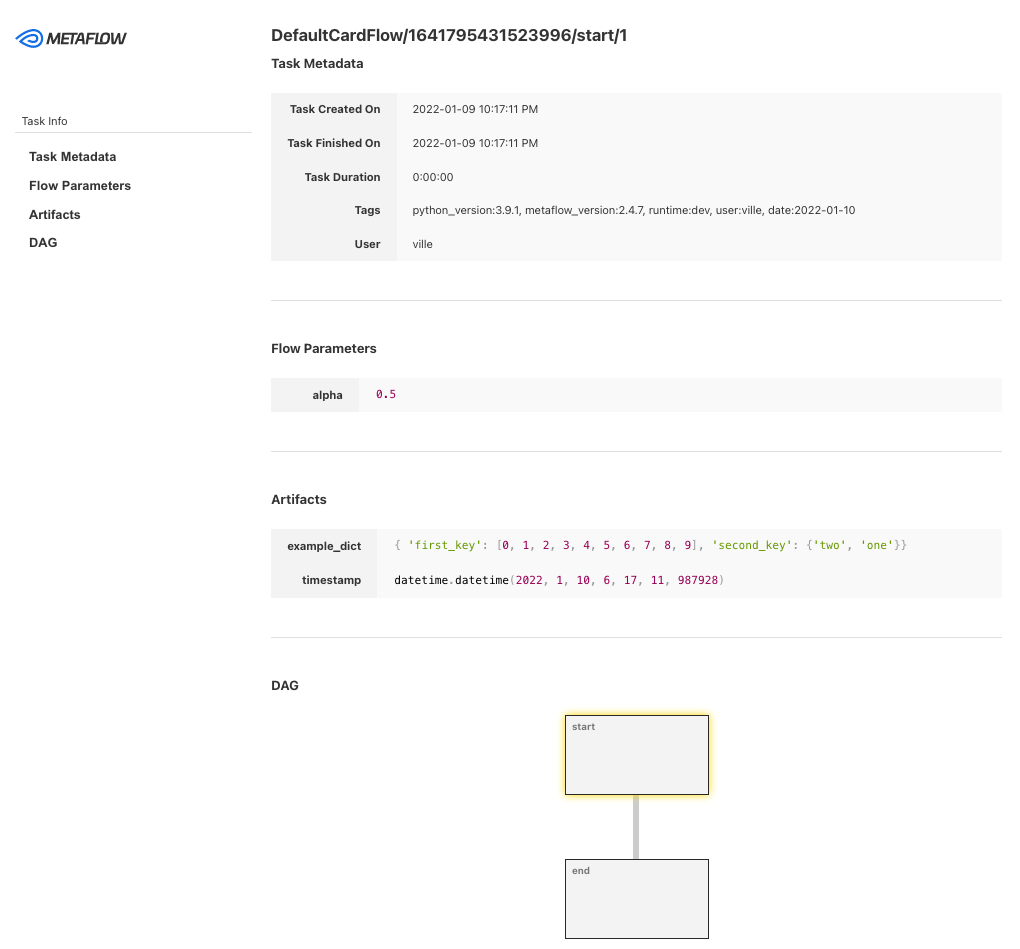

7. Metaflow

Metaflow is a powerful, battle-hardened workflow management tool for data science and machine learning projects. It was built for data scientists so they can focus on building models instead of worrying about MLOps engineering.

With Metaflow, you can design workflow, run it on the scale, and deploy the model in production. It tracks and version machine learning experiments and data automatically. Furthermore, you can visualize the results in the notebook.

Metaflow works with multiple clouds (including AWS, GCP, and Azure) and various machine-learning Python packages (like Scikit-learn and Tensorflow), and the API is available for R language too.

Image from Metaflow

8. Kedro

Kedro is a workflow orchestration tool based on Python. You can use it for creating reproducible, maintainable, and modular data science projects. It integrates the concepts from software engineering into machine learning, such as modularity, separation of concerns, and versioning.

With Kedro, you can:

- Set up dependencies and configuration.

- Set up data.

- Create, visualize, and run the pipelines.

- Logging and experiment tracking.

- Deployment on a single or distributed machine.

- Create maintainable data science code.

- Create modular, reusable code.

- Collaborate with teammates on projects.

Gif from Kedro

Note: you can also use Kubeflow and DVC for orchestration and Workflow pipelines.

Data and Pipeline Versioning Tools

With these MLOps tools, you can manage tasks around data and pipeline versioning:

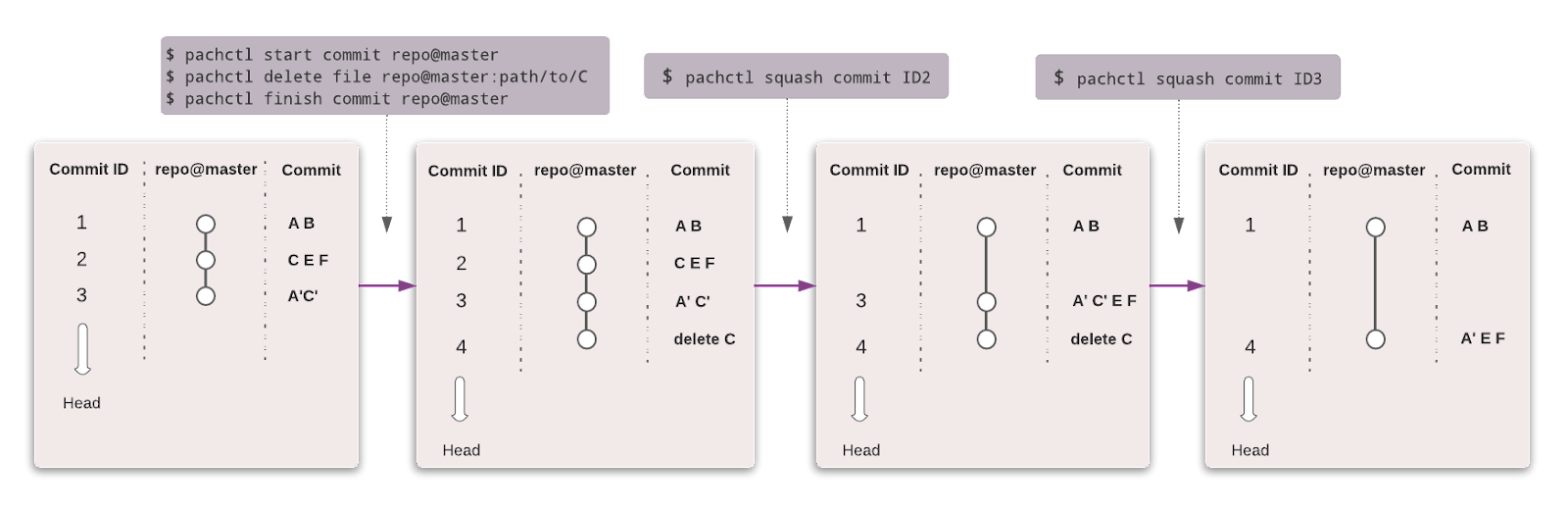

9. Pachyderm

Pachyderm automates data transformation with data versioning, lineage, and end-to-end pipelines on Kubernetes. You can integrate with any data (Images, logs, video, CSVs), any language (Python, R, SQL, C/C++), and at any scale (Petabytes of data, thousands of jobs).

The community edition is open-source and for a small team. Organizations and teams who want advanced features can opt for the Enterprise edition.

Just like Git, you can version your data using a similar syntax. In Pachyderm, the highest level of the object is Repository, and you can use Commit, Branches, File, History, and Provenance to track and version the dataset.

Image from Pachyderm

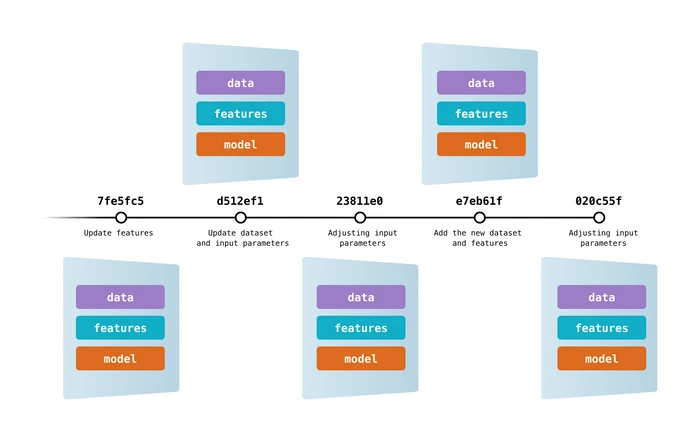

10. Data Version Control (DVC)

Data Version Control is an open-source and popular tool for machine learning projects. It works seamlessly with Git to provide you with code, data, model, metadata, and pipeline versioning.

DVC is more than just a data tracking and versioning tool.

You can use it for:

- Experiment tracking (model metrics, parameters, versioning).

- Create, visualize, and run machine learning pipelines.

- Workflow for deployment and collaboration.

- Reproducibility.

- Data and model registry.

- Continuous integration and deployment for machine learning using CML.

Image from DVC

Note: DagsHub can also be used for data and pipeline versioning.

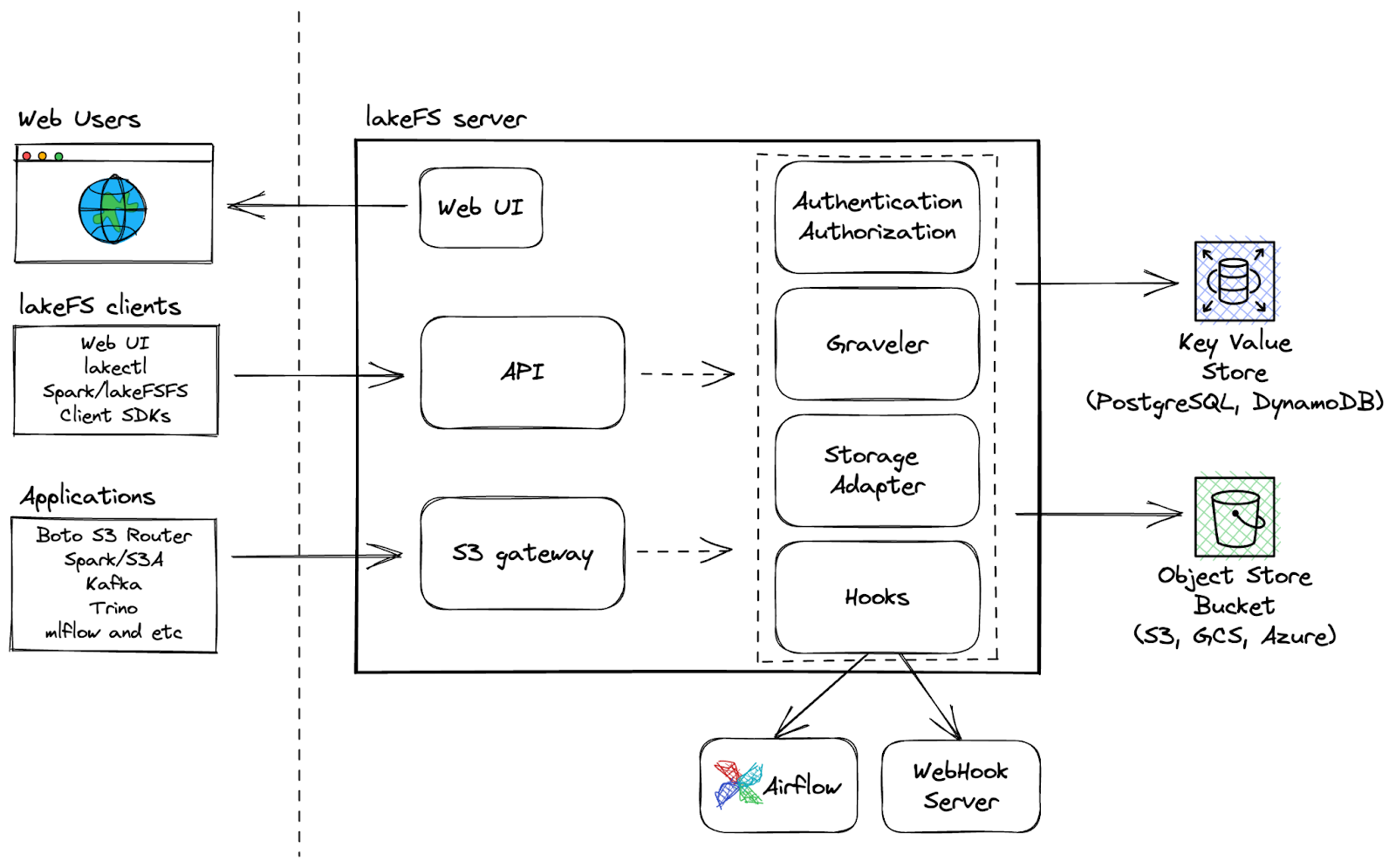

11. LakeFS

LakeFS is an open-source scalable data version control tool that provides a Git-like version control interface for object storage, enabling users to manage their data lakes as they would their code. With LakeFS, users can version control data at exabyte scale, making it a highly scalable solution for managing large data lakes.

Additional capabilities:

- Perform Git operations like branch, commit, and merge over any storage service

- Faster development with zero copy branching for frictionless experimentation and easy collaboration

- Use pre-commit and merge hooks for CI/CD workflows to ensure clean workflows

- Resilient platform allows for faster recovery from data issues with revert capability.

LakeFS Architecture

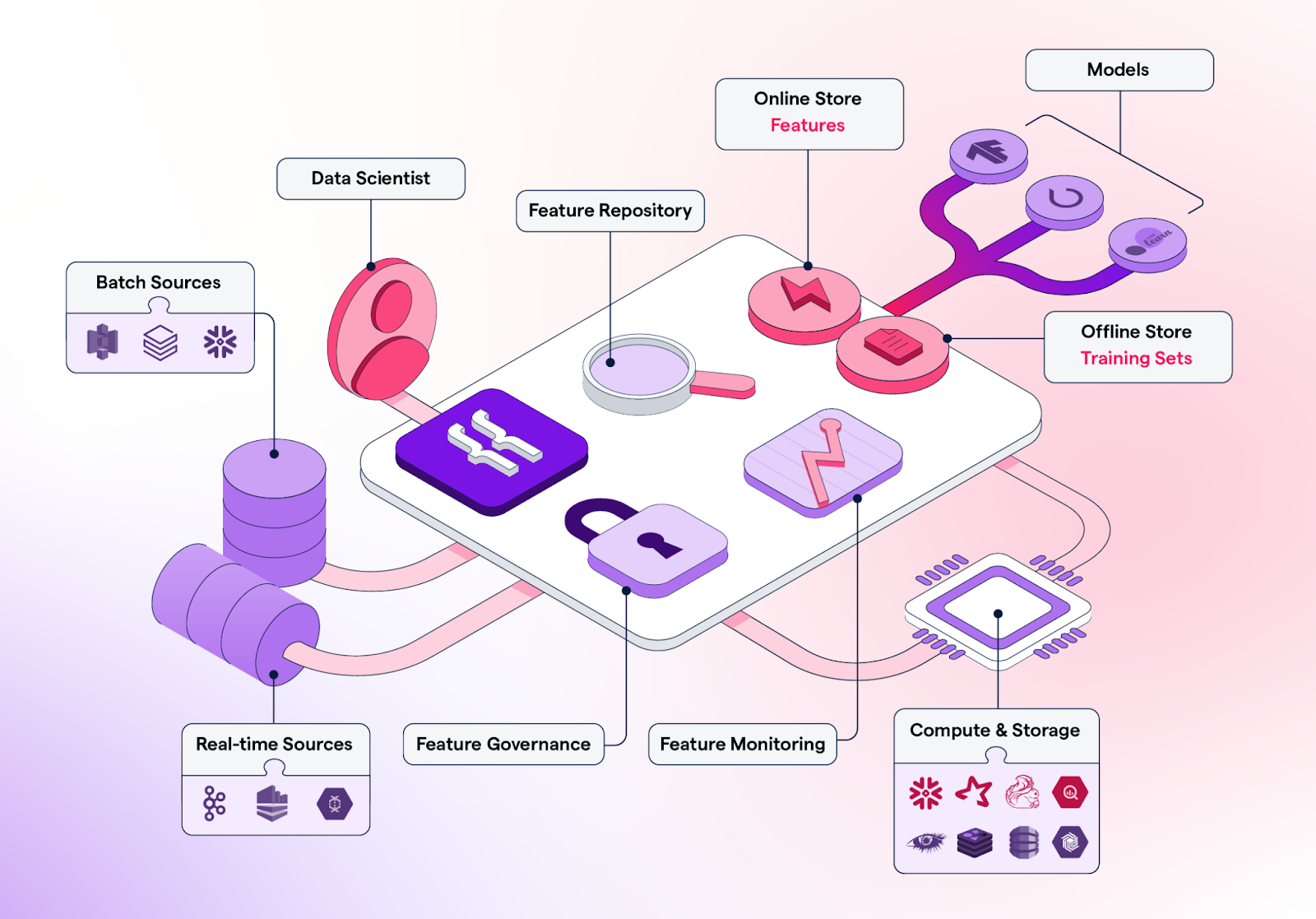

Feature Stores

Feature stores are centralized repositories for storing, versioning, managing, and serving features (processed data attributes used for training machine learning models) for machine learning models in production as well as for training purposes.

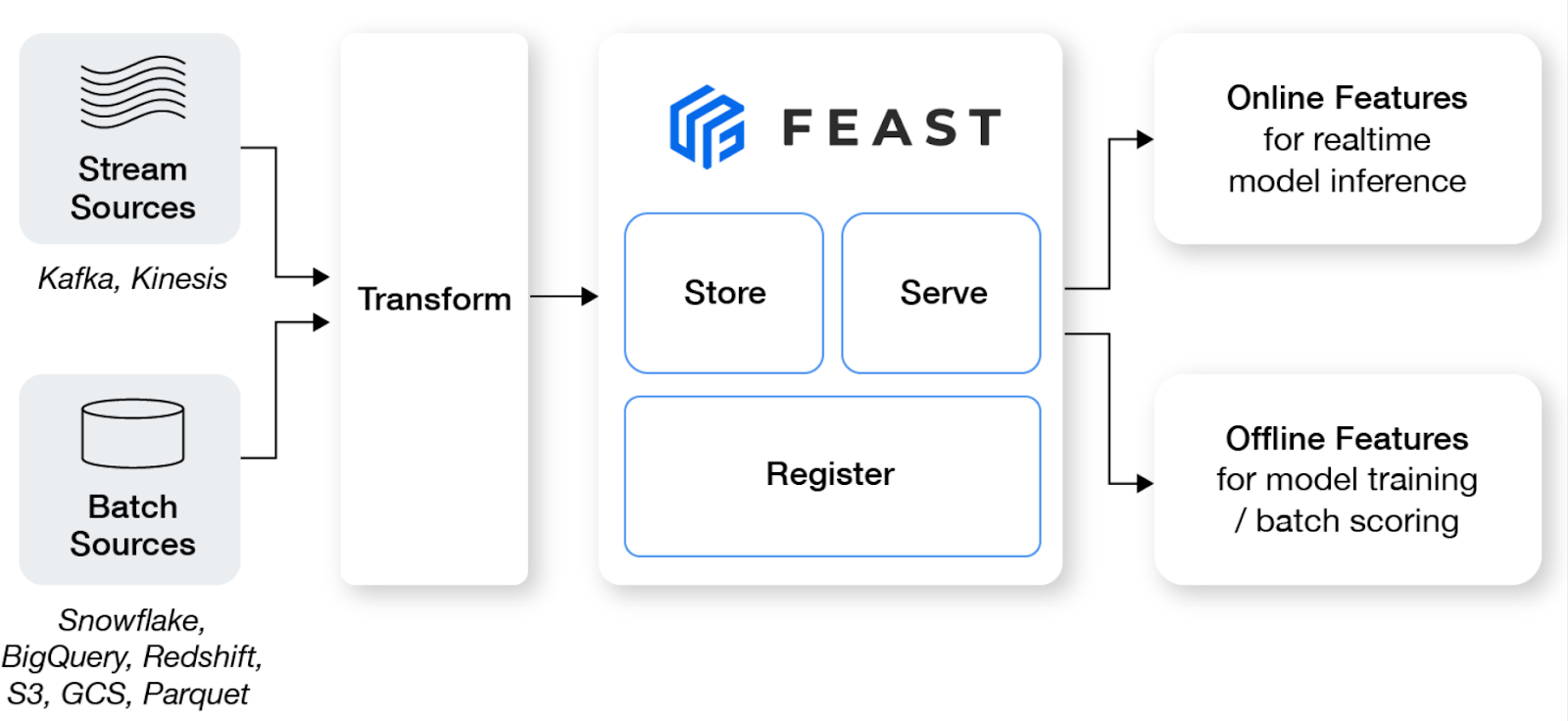

12. Feast

Feast is an open-source feature store that helps machine learning teams productionize real-time models and build a feature platform that promotes collaboration between engineers and data scientists.

Key features:

- Manage an offline store, a low-latency online store, and a feature server to ensure consistent availability of features for both training and serving purposes.

- Avoid data leakage by creating accurate point-in-time feature sets, freeing data scientists from dealing with error-prone dataset joining.

- Decouple ML from data infrastructure by having a single access layer.

Image from Feast

13. Featureform

Featureform is a virtual feature store that enables data scientists to define, manage, and serve their ML model's features. It can help data science teams to enhance collaboration, organize experimentation, facilitate deployment, increase reliability, and preserve compliance.

Key features:

- Enhance collaboration by sharing, reusing, and understanding features across the team.

- When your feature is ready to be deployed, Featureform will orchestrate your data infrastructure to make it ready for production.

- The system ensures that no features, labels, or training sets can be modified to enhance reliability.

- With built-in role-based access control, audit logs, and dynamic serving rules, Featureform can directly enforce your compliance logic.

Image from Featureform

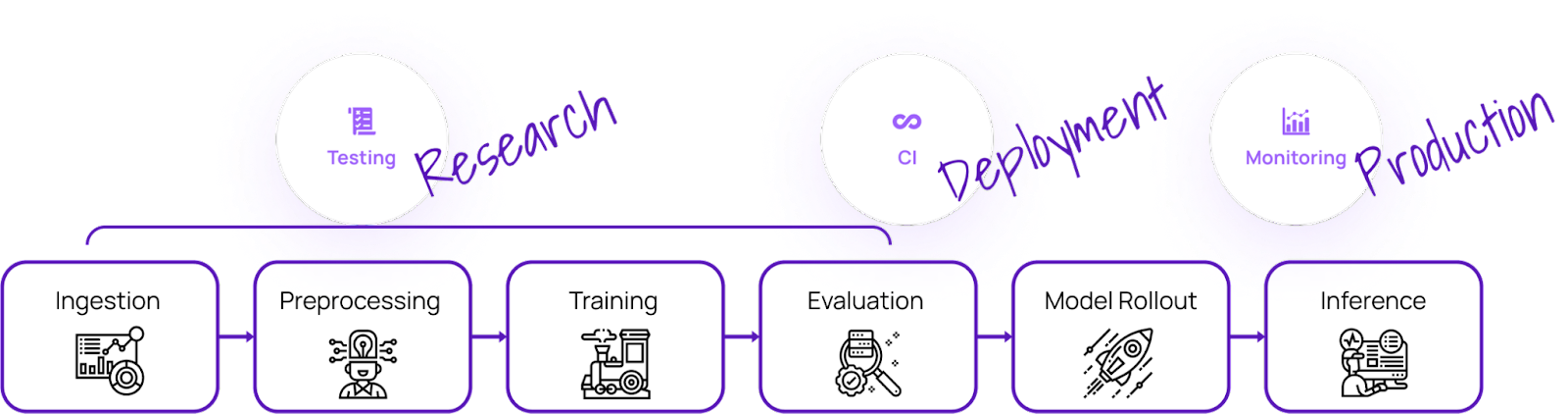

Model Testing

With these MLOps tools, you can test model quality and ensure machine learning models' reliability, robustness, and accuracy:

14. Deepchecks ML Models Testing

Deepchecks is an open-source solution that caters to all your ML validation needs, ensuring that your data and models are thoroughly tested from research to production. It offers a holistic approach to validate your data and models through its various components.

Image from Deepchecks

Deepchecks consists of three components:

- Deepchecks Testing: lets you build custom checks and suites for tabular, natural language processing, and computer vision validation.

- CI & Testing Management: provides CI & Testing Management to help you collaborate with your team and manage test results effectively.

- Deepchecks Monitoring: tracks and validates models in production.

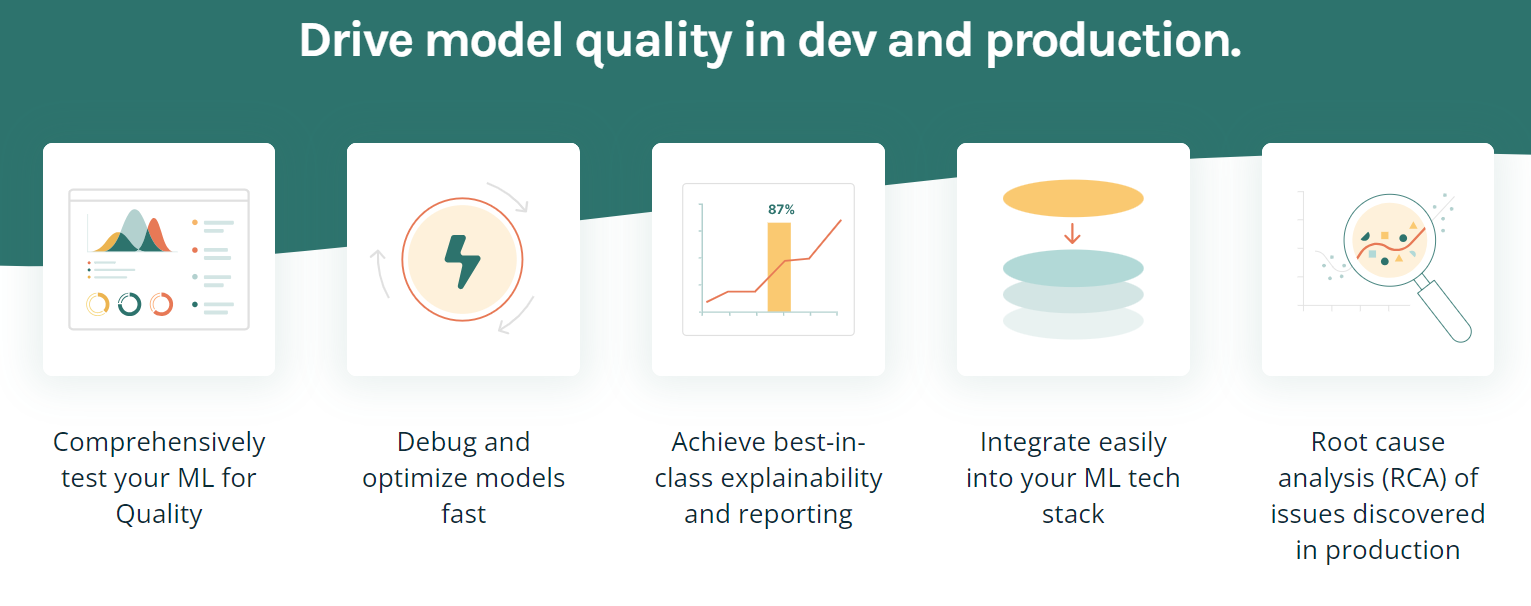

15. TruEra

TruEra is an advanced platform designed to drive model quality and performance through automated testing, explainability, and root cause analysis. It offers various features to help optimize and debug models, achieve best-in-class explainability, and integrate easily into your ML tech stack.

Key features:

- The model testing and debugging feature allows you to improve model quality during development and production.

- It can perform automated and systematic testing to ensure performance, stability, and fairness.

- It understands the evolution of model versions. This allows you to extract insights that guide faster and more effective model development.

- Identify and pinpoint which specific features are contributing to model bias.

- TruEra can be easily integrated into your current infrastructure and workflow without any hassle.

Image by TruEra

Model Deployment and Serving Tools

When it comes to deploying models, these MLOps tools can be hugely helpful:

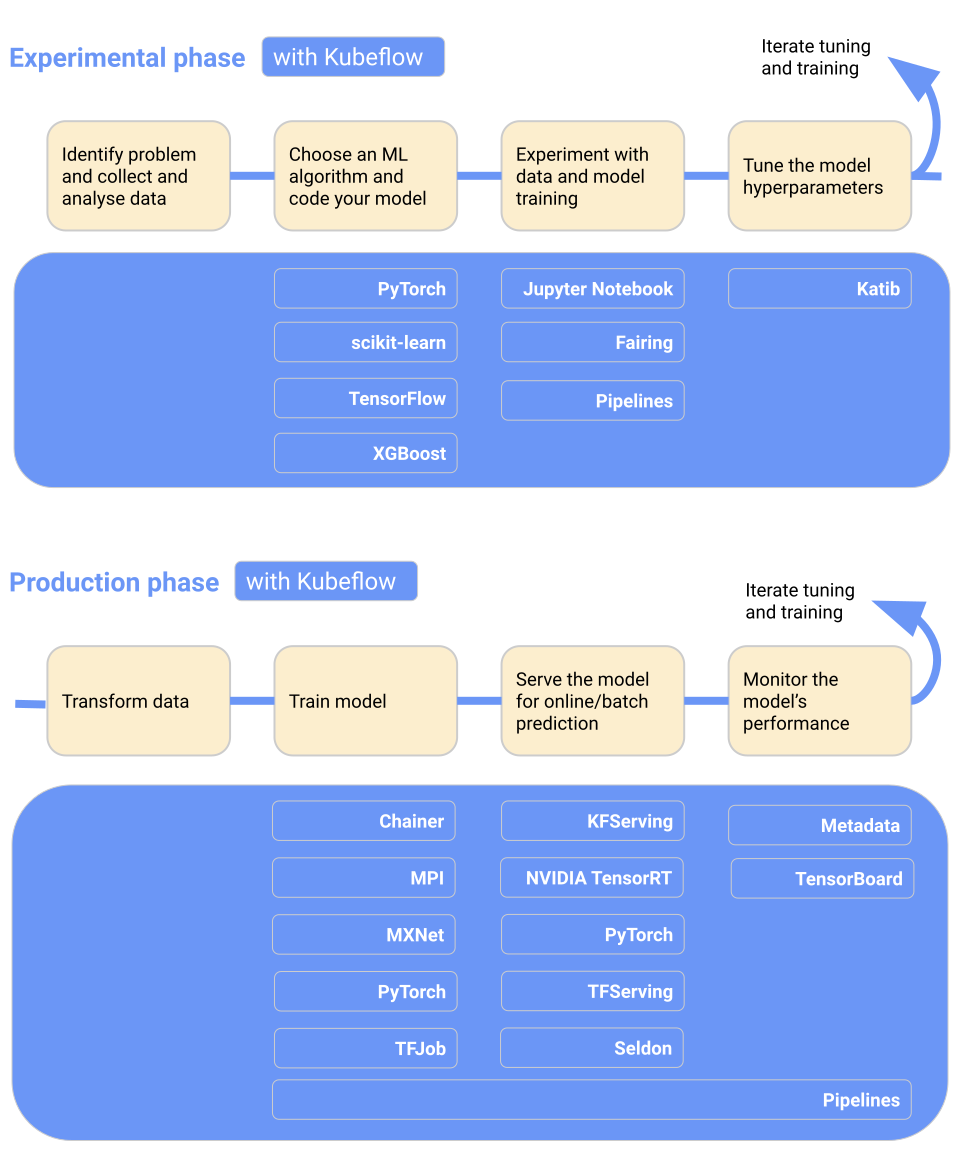

16. Kubeflow

Kubeflow makes machine learning model deployment on Kubernetes simple, portable, and scalable. You can use it for data preparation, model training, model optimization, prediction serving, and motor the model performance in production. You can deploy machine learning workflow locally, on-premises, or to the cloud. In short, it makes Kubernetes easy for data science teams.

Key features:

- Centralized dashboard with interactive UI.

- Machine learning pipelines for reproducibility and streamlining.

- Provides native support for JupyterLab, RStudio, and Visual Studio Code.

- Hyperparameter tuning and neural architecture search.

- Training jobs for Tensorflow, Pytorch, PaddlePaddle, MXNet, and XGboost.

- Job scheduling.

- Provide administrators with multi-user isolation.

- Works with all of the major cloud providers.

Image from Kubeflow

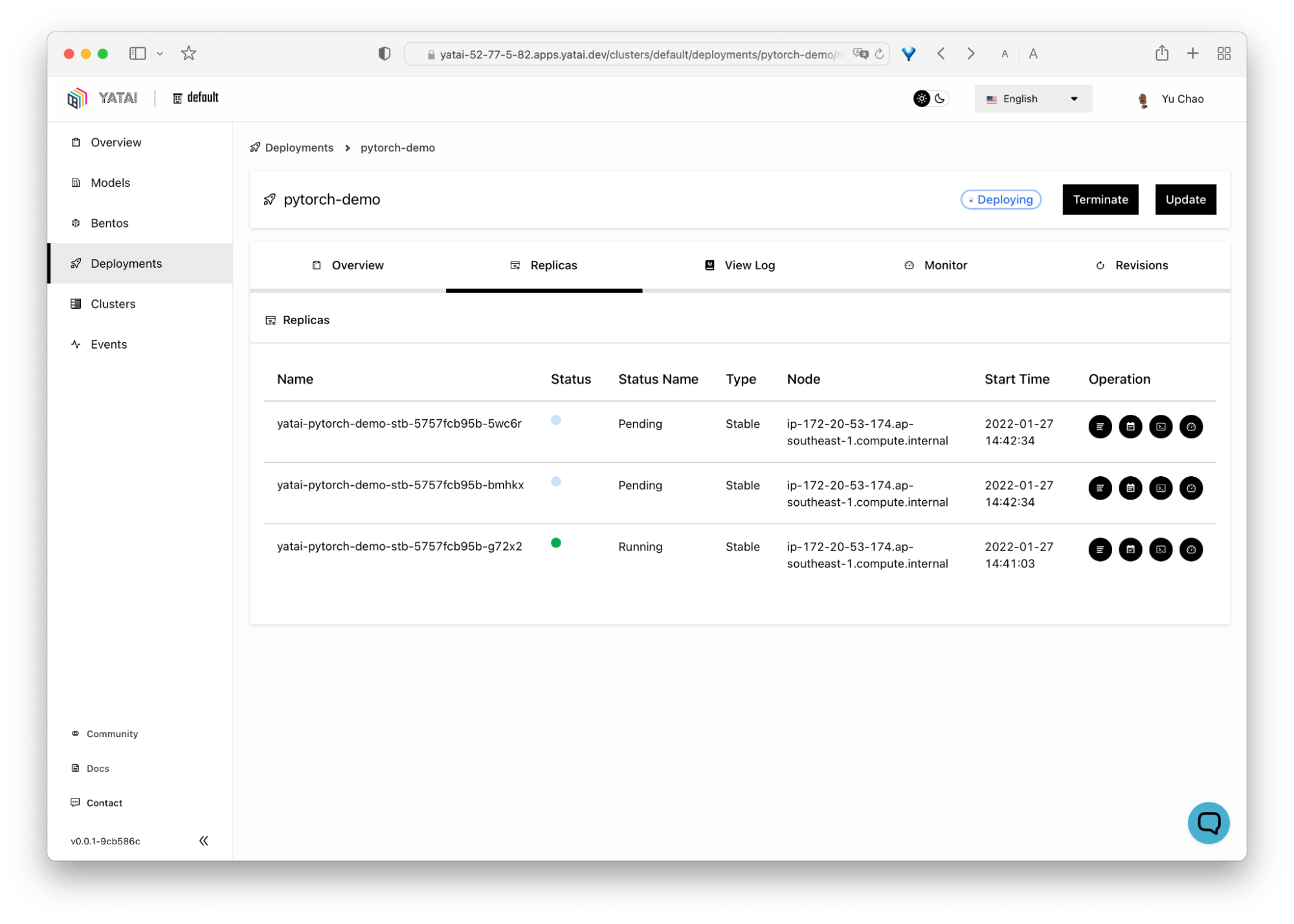

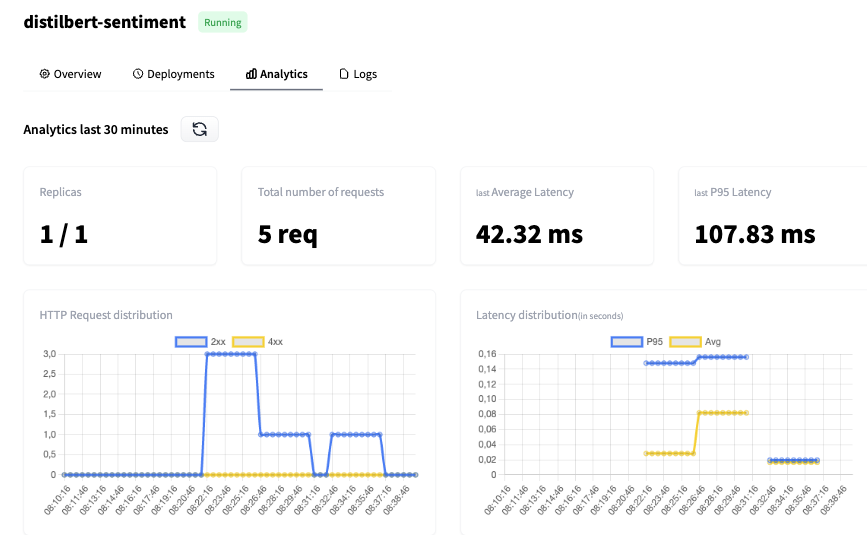

10. BentoML

BentoML makes it easy and faster to ship machine learning applications. It is a Python-first tool for deploying and maintaining APIs in production. It scales with powerful optimizations by running parallel inference and adaptive batching and provides hardware acceleration.

BentoML’s interactive centralized dashboard makes it easy to organize and monitor when deploying machine learning models. The best part is that it works with all kinds of machine learning frameworks, such as Keras, ONNX, LightGBM, Pytorch, and Scikit-learn. In short, BentoML provides a complete solution for model deployment, serving, and monitoring.

Image from BentoML

18. Hugging Face Inference Endpoints

Hugging Face Inference Endpoints is a cloud-based service offered by Hugging Face, an all-in-one ML platform that enables users to train, host, and share models, datasets, and demos. These endpoints are designed to help users deploy their trained machine learning models for inference without the need to set up and manage the required infrastructure.

Key features:

- Keep the cost as low as $0.06 per CPU core/hr and $0.6 per GPU/hr, depending on your needs.

- Easy to deploy within seconds.

- Fully managed and autoscale.

- Part of the Hugging Face ecosystem.

- Enterprise-level security.

Image from Hugging Face

Note: You can also use MLflow and AWS sagemaker for model deployment and serving.

Model Monitoring in Production ML Ops Tools

Whether your ML model is in development, validation, or deployed to production, these tools can help you monitor a range of factors:

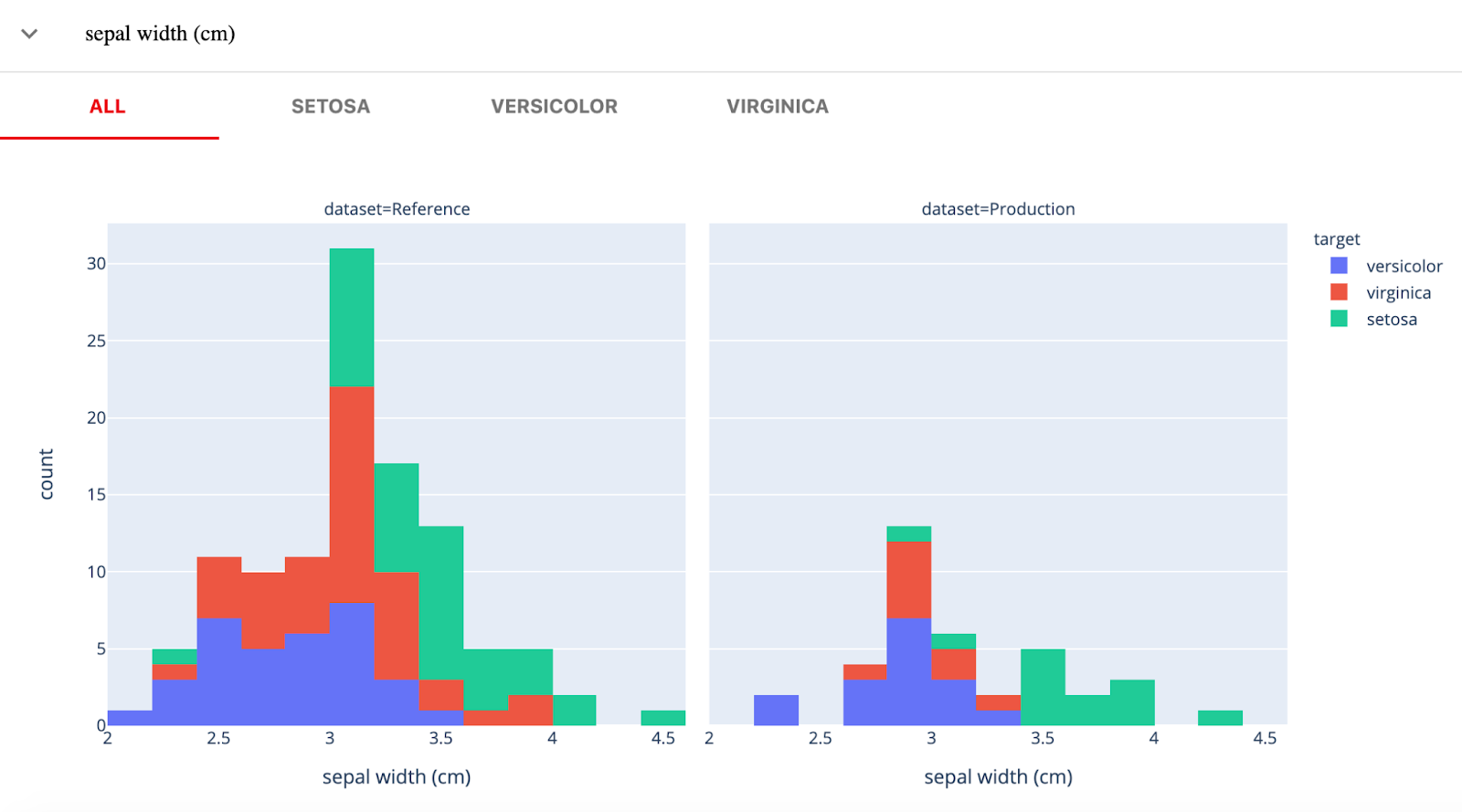

19. Evidently

Evidently AI is an open-source Python library for monitoring ML models during development, validation, and in production. It checks data and model quality, data drift, target drift, and regression and classification performance.

Evidently has three main components:

- Tests (batch model checks): for performing structured data and model quality checks.

- Reports (interactive dashboards): interactive data drift, model performance, and target virtualization.

- Monitors (real-time monitoring): monitors data and model metrics from deployed ML service.

Image from Evidently

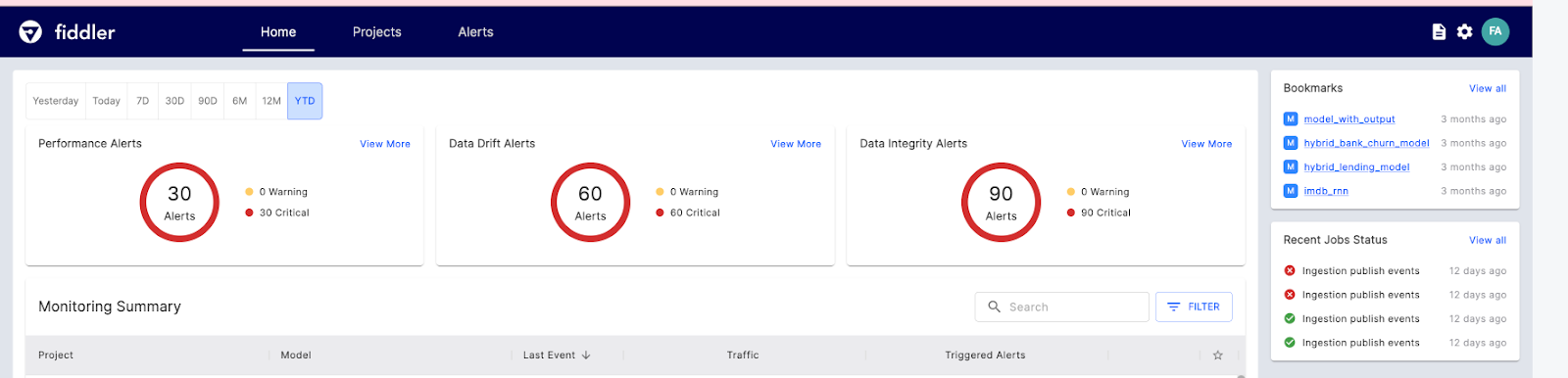

20. Fiddler

Fiddler AI is an ML model monitoring tool with an easy-to-use, clear UI. It lets you explain and debug predictions, analyze mode behavior for the entire dataset, deploy machine learning models at scale, and monitor model performance.

Let’s look at the main Fiddler AI features for ML monitoring:

- Performance monitoring: in-depth visualization of data drifting, when it’s drifting, and how it’s drifting.

- Data integrity: avoid feeding incorrect data for model training.

- Tracking outliers: shows univariate and multivariate outliers.

- Service metrics: shows basic insights into the ML service operations.

- Alerts: set up alerts for a model or group of models to warn of the issues in production.

Image from Fiddler

Runtime Engines

The runtime engine is responsible for loading the model, preprocessing input data, running inference, and returning the results to the client application.

21. Ray

Ray is a versatile framework designed to scale AI and Python applications, making it easier for developers to manage and optimize their machine learning projects.

The platform consists of two main components: a core distributed runtime and a set of AI libraries tailored for simplifying ML compute.

Ray Core offers a limited set of fundamental elements that can be used to construct and expand distributed applications.

- Tasks are functions that don't have a state and are executed within the cluster.

- Actors are worker processes that are stateful and created within the cluster.

- Objects are immutable values that can be accessed by any component within the cluster.

Ray also provides AI libraries for scalable datasets for ML, distributed training, hyperparameter tuning, reinforcement learning, and scalable and programmable serving.

The following example demonstrates the training and serving of a Gradient Boosting Classifier model.

import requests

from starlette.requests import Request

from typing import Dict

from sklearn.datasets import load_iris

from sklearn.ensemble import GradientBoostingClassifier

from ray import serve

# Train model.

iris_dataset = load_iris()

model = GradientBoostingClassifier()

model.fit(iris_dataset["data"], iris_dataset["target"])

@serve.deployment

class BoostingModel:

def __init__(self, model):

self.model = model

self.label_list = iris_dataset["target_names"].tolist()

async def __call__(self, request: Request) -> Dict:

payload = (await request.json())["vector"]

print(f"Received http request with data {payload}")

prediction = self.model.predict([payload])[0]

human_name = self.label_list[prediction]

return {"result": human_name}

# Deploy model.

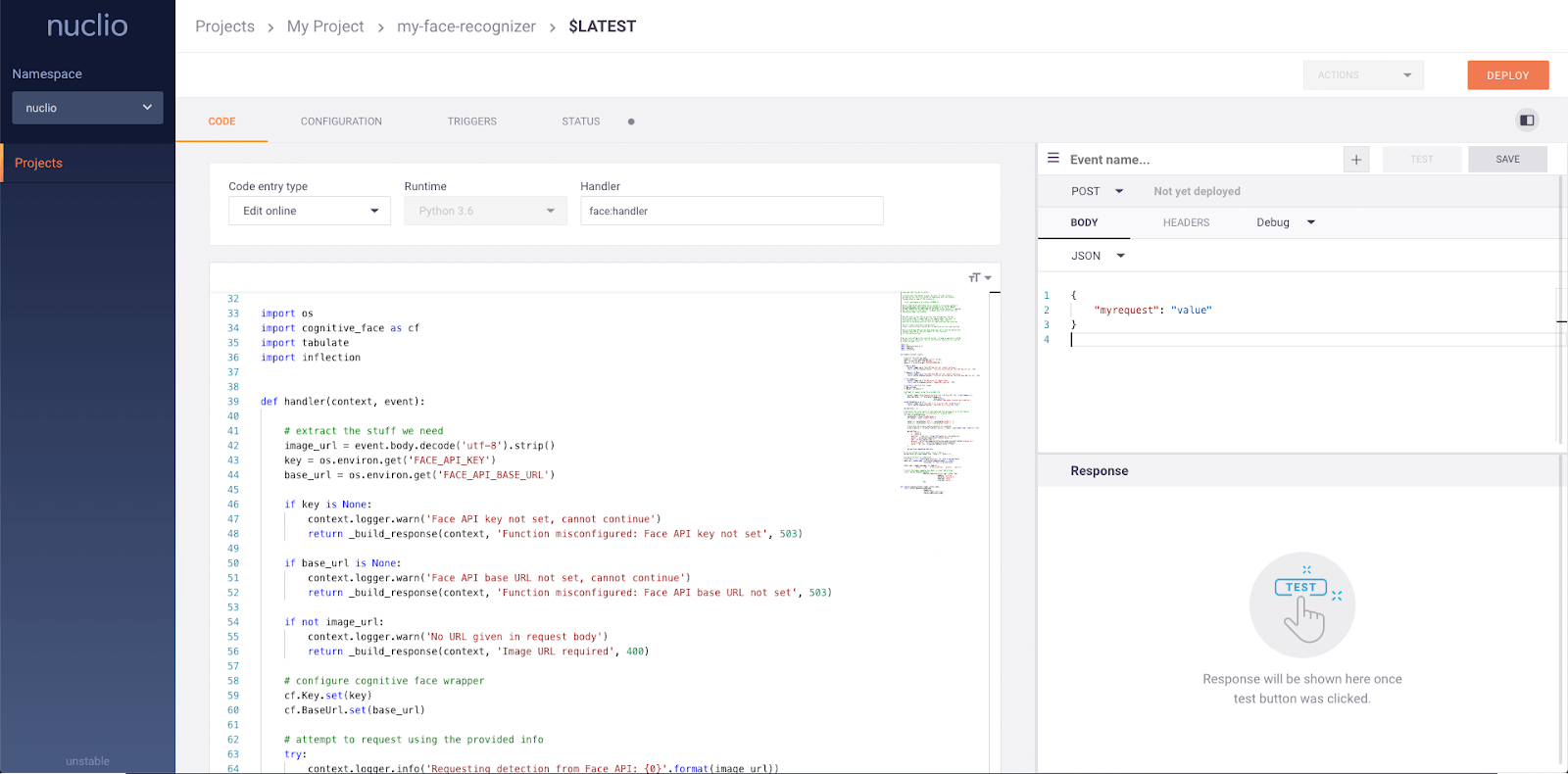

serve.run(BoostingModel.bind(model), route_prefix="/iris")22. Nuclio

Nuclio is a powerful framework that is focused on data, I/O, and compute-intensive workloads. It is designed to be serverless, meaning that you don't need to worry about managing servers. Nuclio is well-integrated with popular data science tools, such as Jupyter and Kubeflow. It also supports a wide variety of data and streaming sources and can be executed over CPUs and GPUs.

Key features:

- Requires minimal CPU/GPU and I/O resources to perform real-time processing while maximizing parallelism.

- Integrates with a wide range of data sources and ML frameworks.

- Provides stateful functions with data-path acceleration

- Portability across all kinds of devices and cloud platforms, especially low-power devices.

- Designed for the enterprise.

Image from Nuclio

End-to-End MLOps Platforms

If you’re looking for a comprehensive MLOps tool that can help during the entire process, here are some of the best:

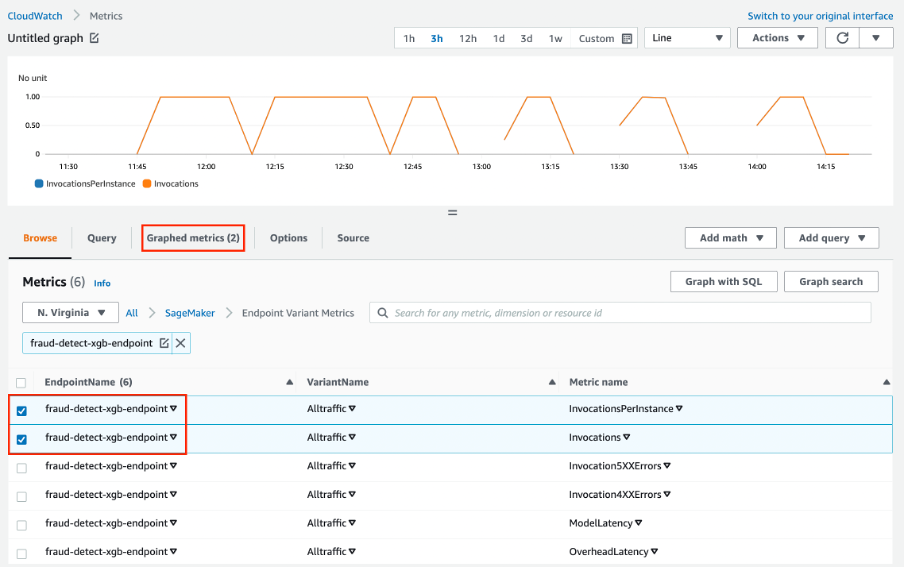

23. AWS SageMaker

Amazon Web Services SageMaker is a one-stop solution for MLOps. You can train and accelerate model development, track and version experiments, catalog ML artifacts, integrate CI/CD ML pipelines, and deploy, serve, and monitor models in production seamlessly.

Key features:

- A collaborative environment for data science teams.

- Automate ML training workflows.

- Deploy and manage models in production.

- Track and maintain model versions.

- CI/CD for automatic integration and deployment.

- Continuous monitoring and retaining models to maintain quality.

- Optimize the cost and performance.

Image from Amazon SageMaker

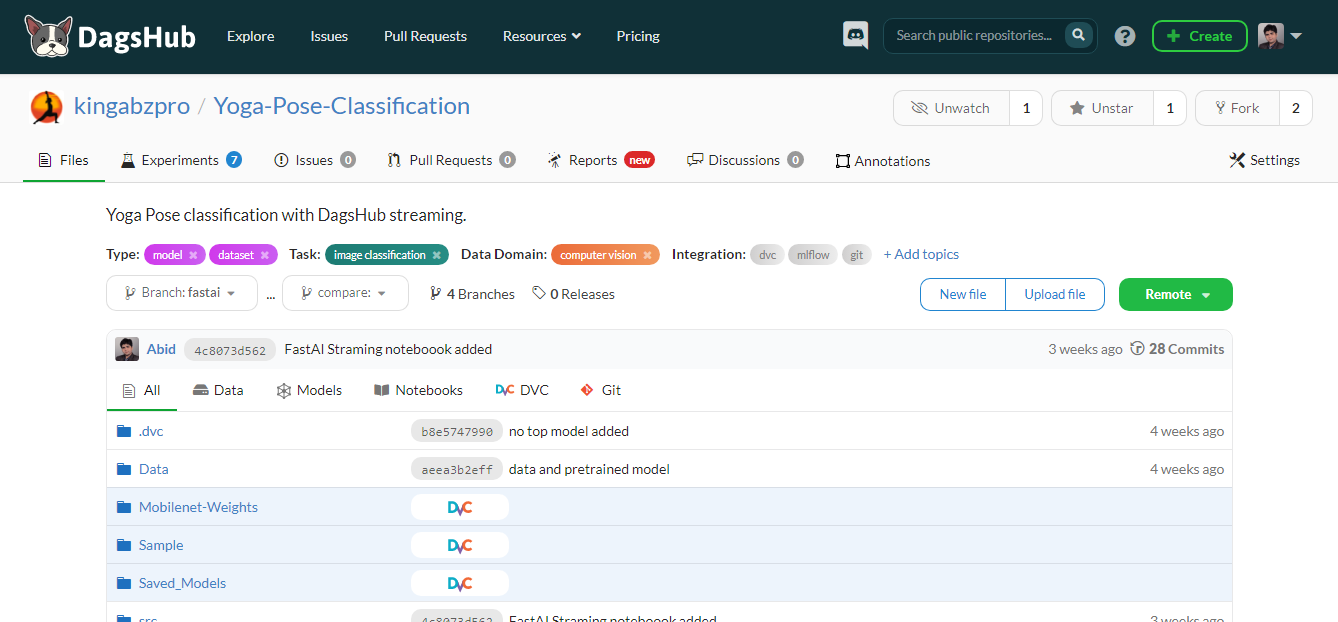

24. DagsHub

DagsHub is a platform made for the machine learning community to track and version the data, models, experiments, ML pipelines, and code. It allows your team to build, review, and share machine-learning projects.

Simply put, it is a GitHub for machine learning, and you get various tools to optimize the end-to-end machine learning process.

Key features:

- Git and DVC repository for your ML projects.

- DagsHub logger and MLflow instance for experiment tracking.

- Dataset annotation using label studio instance.

- Diffing the Jupyter notebooks, code, datasets, and images.

- Ability to comment on the file, the line of the code, or the dataset.

- Create a report for the project just like GitHub wiki.

- ML pipeline visualization.

- Reproducible results.

- Running CI/CD for model training and deployment.

- Data Merging.

- Provide integration with GitHub, Google Colab, DVC, Jenkins, external storage, webhooks, and New Relic.

Image by Author

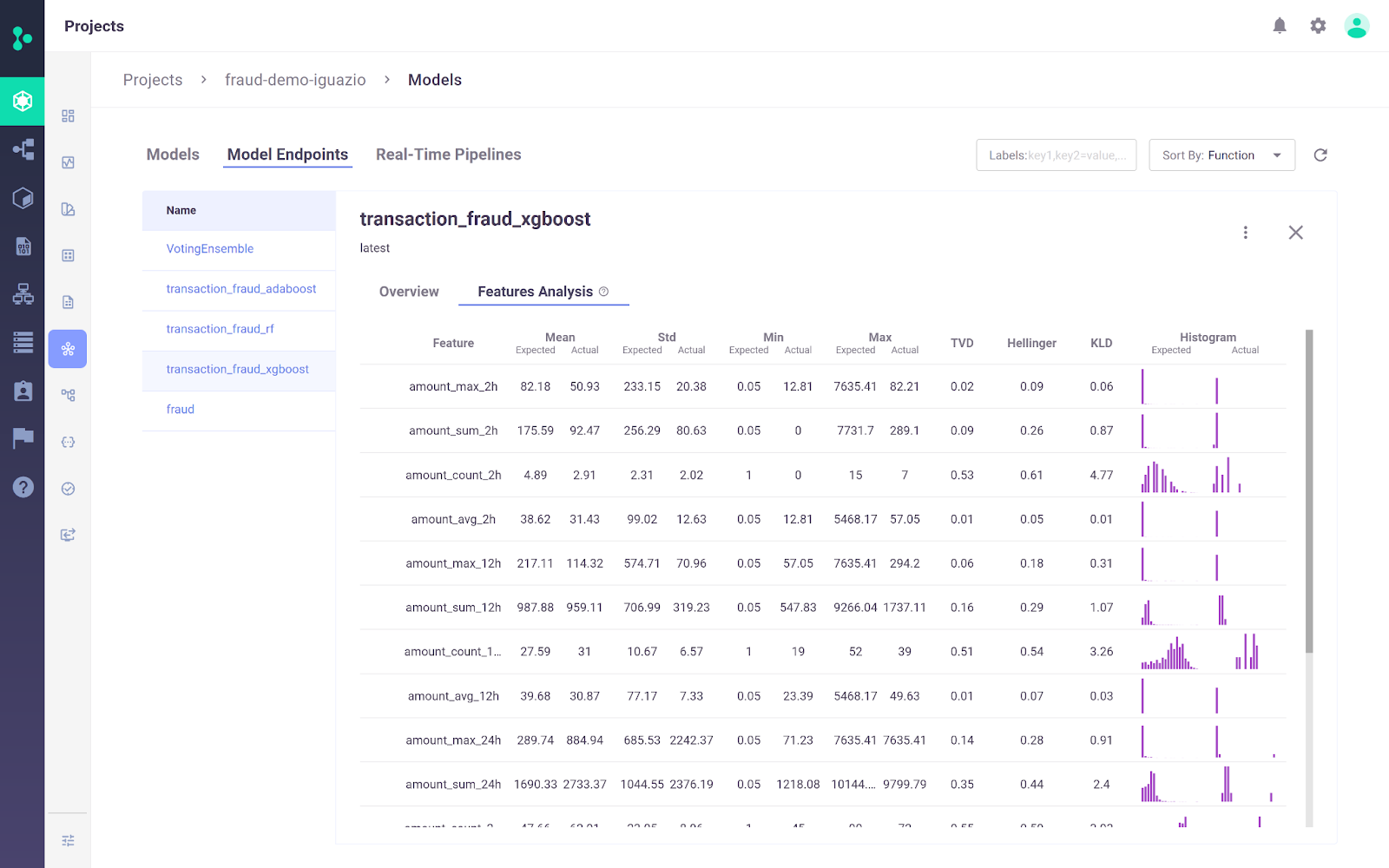

25. Iguazio MLOps Platform

The Iguazio MLOps Platform is an end-to-end MLOps platform that enables organizations to automate the machine learning pipeline from data collection and preparation to training, deployment, and monitoring in production. It provides an open (MLRun) and managed platform.

One key differentiator of the Iguazio MLOps Platform is its flexibility in deployment options. Users can deploy AI applications anywhere, including any cloud, hybrid, or on-premises environments. This is particularly important for industries like healthcare and finance, where data privacy concerns may make on-premises deployment a requirement.

Image from Iguazio MLOps Platform

Key features:

- The platform allows users to ingest data from any source and build reusable online and offline features using the integrated feature store.

- It supports continuously training and evaluating models at scale using scalable serverless with automated tracking, data versioning, and continuous integration and deployment.

- Easy to deploy models to production in just a few clicks, continuously monitors model performance and mitigates model drift.

- The platform comes with a simple dashboard for managing, governing, and monitoring models and real-time production.

Conclusion

We’re at a time when there is a boom in the MLOps industry. Every week you see new developments, new startups, and new tools launching to solve the basic problem of converting notebooks into production-ready applications. Even existing tools are expanding the horizon and integrating new features to become super MLOps tools.

In this blog, we have learned about the best MLOps tools for various steps of the MLOps process. These tools will help you during the experimentation, development, deployment, and monitoring stages.

If you are new to machine learning and want to master the essential skills to land a job as a machine learning scientist, try taking our Machine Learning Scientist with Python career track.

If you are a professional and want to learn more about standard MLOps Practices, read our article on the MLOps Best Practices and How to Apply Them and check out our MLOps Fundamentals skill track.

MLOps Tools FAQs

What are MLOps Tools?

MLOps tools help standardize, simplify, and streamline the ML ecosystem. These tools are used for experiment tracking, model metadata management, orchestration, model optimization, workflow versioning, model deployment and serving, and model monitoring in production.

What skills are needed for an MLOps Engineer?

- Ability to implement cloud solutions.

- Experience with Docker and Kubernetes.

- Experience with Quality Assurance using experiment tracking and workflow versioning.

- Ability to build MLOps pipelines.

- Familiar with Linux operating system.

- Experience with ML frameworks such as PyTorch, Tensorflow, and TFX.

- Experience with DevOps and software development.

- Experience with unit and integration testing, data, and model validation, and post-deployment monitoring.

Which cloud is best for MLOps?

AWS, GCP, and Azure provide a variety of tools for the machine learning lifecycle. They all provide end-to-end solutions for MLOps. AWS takes the lead in terms of popularity and market share. It also provides easy solutions for model training, serving, and monitoring.

Is MLOps easy to learn?

It depends on your prior experience. To master MLOps, you need to learn both machine learning and software development life cycles. Apart from strong proficiency in programming languages, you need to learn several MLOps tools. It is easy for DevOps engineers to learn MLOps as most of the tools and strategies are driven by software development.

Is Kubeflow better than MLflow?

It depends on the use case. Kubeflow provides reproducibility at a larger level than MLflow, as it manages the orchestration.

- Kubeflow is generally used for deploying and managing complex ML systems at scale.

- MLFlow is generally used for ML experiment tracking and storing and managing model metadata.

How is MLOps different from DevOps?

Both are software development strategies. DevOps focuses on developing and managing large-scale software systems, while MLOps focuses on deploying and maintaining machine learning models in production.

- DevOps: Continuous Integration(CI) and Continuous Delivery(CD).

- MLOps: Continuous Integration, Continuous Delivery, Continuous Training, and Continuous Monitoring.

How Do MLOps Tools Integrate with Existing Data Science Workflows?

MLOps tools are designed to integrate seamlessly with existing data science workflows. They typically support various data science tools and platforms, provide APIs for integration, and offer plugins or extensions for popular data science environments. This integration enables data scientists to maintain their current workflows while leveraging the benefits of MLOps for better scalability, reproducibility, and deployment efficiency.

Can MLOps Tools Help with Model Explainability and Fairness?

Yes, many MLOps tools include features to improve model explainability and fairness. They offer functionalities such as model interpretation, bias detection, and fairness metrics, which assist in understanding and improving how models make decisions. This is crucial for deploying responsible AI and maintaining compliance with regulatory standards.

MLOps Course