Introduction to AI Agents: Getting Started With Auto-GPT, AgentGPT, and BabyAGI

AI agents such as Auto-GPT, AgentGPT, and BabyAGI are the next evolution in the fast-developing world of AI. While ChatGPT brought generative AI into the mainstream at the end of 2022, it requires a human to input prompts. The idea behind AI agents is to have the AI work independently towards a goal with minimal or zero human input.

Many developers have recognized the potential of this new technology. Auto-GPT is the fastest-growing open-source project in history if you measure this by the number of GitHub stars it’s received (equivalent to "favoriting" a software project). In the six weeks since its development began, it has 107,000 stars. For comparison, pandas, the most popular Python package for data science, has 38 thousand stars.

This tutorial explains what AI agents are, how they are related to large language models like GPT, and how they can be used. Before reading this tutorial, it is helpful to know what a large language model is. A Beginner's Guide to GPT-3 is a good primer.

How Are AI Agents Related to ChatGPT?

Explaining AI agents requires some knowledge of jargon. Check out the definitions below:

- A large language model (LLM) is a type of machine learning model used to generate text.

- OpenAI's GPT is currently the most popular LLM. The latest version is GPT-4, though the previous version, GPT-3.5-turbo, is still in use.

- Chatting is a workflow where you send a text prompt to the AI, which returns a text response.

- Embedding is a workflow where you send a text prompt to the AI, which returns a numeric representation of text as its response. Representing text as numbers is useful for search, recommendation, and classification tasks.

- OpenAI's ChatGPT is a web application that provides a convenient user interface for chatting with GPT.

Just as ChatGPT is a web application that is powered by the GPT LLM, the three AI agents covered in this tutorial are also applications powered by GPT.

The main problem that these AI agents are trying to solve is to reduce the amount of human interaction that is needed for the AI to complete a task.

AgentGPT and BabyAGI are essentially "GPT on a loop," where GPT is repeatedly called to break the user's request into solvable problems, and the output from each step is refined until each subtask is completed successfully.

Auto-GPT goes a step further by providing the AI agent with internet access and the ability to run code, letting it solve a wider variety of problems.

The following innovations are worth discussing.

Long-term memory

LLMs generate words based on the previous words in the conversation (both the user prompts and the AI responses). However, LLMs have fairly short memories: many models remember only a few hundred words, and the state-of-the-art GPT-4 remembers about 6000 words by default. When the length of the conversation exceeds the memory of the LLM, the responses can become inconsistent with each other.

For most use cases, this isn't a problem. However, in some cases, it is essential that the entire conversation—and perhaps even previous conversations—is remembered. Chatbots, and their more advanced cousin, AI agents, are an example of this.

Giving the AI agent a memory is achieved by using an embedding workflow to store the text of the conversation as numbers. Each user prompt or AI response is stored as an array that represents the meaning of the text.

These numeric arrays are stored in a vector database. Currently, Auto-GPT and BabyAGI use Pinecone (the leading player in this space), and the AgentGPT team is developing support for this feature.

Web-browsing capabilities

If you ask ChatGPT a question about recent events, it will tell you that it cannot answer because it was only trained on data until September 2021. Again, this limitation isn't a problem for many applications, but it does cause issues if your task involves current events or information.

One solution to this problem is to give the AI web browsing capabilities to access the internet and find answers for itself.

Auto-GPT can perform research for itself via the Google Search API.

This feature is slightly controversial: the developers of AgentGPT and BabyAGI have currently not added this feature, preferring to restrict the AI's knowledge to whatever GPT knows.

Running code

While LLMs like GPT can generate code from scratch, and debug or improve existing code, they can't actually run it.

Auto-GPT can execute shell and Python code. That means it can both write code and run it.

One particularly powerful ability this gives it is that it can write the code to interact with other software, then run it. This means it can complete tasks by making use of other software.

What are the Use Cases of AI Agents?

It is still very early days to figure out how we can use AI agents. Many examples are variations of an automated personal assistant, like a next-generation IBM Watson Assistant.

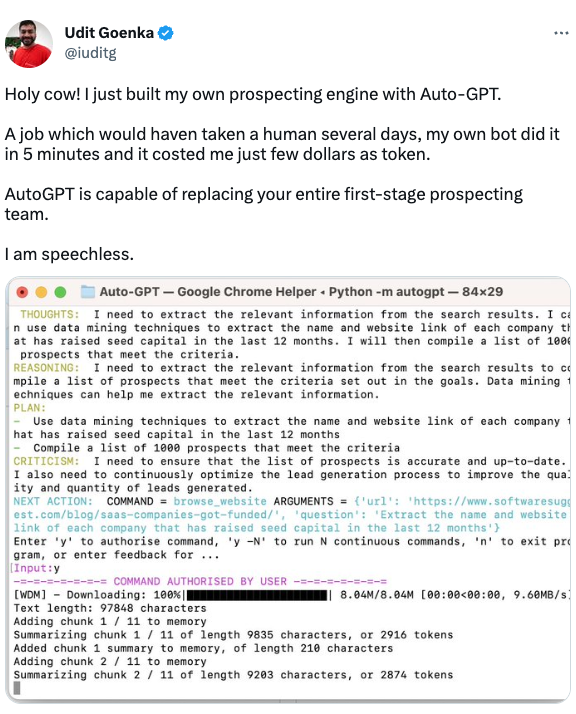

Udit Goenka, the CEO of FirstSales.io, posted on Twitter that he'd used Auto-GPT to build a prospecting engine. The screenshot in the tweet shows the AI agent creating a plan to search for companies that have raised seed capital in the last year (that is, prospects who ought to have cash available) and describing the details to create the list (extracting company names and URLs).

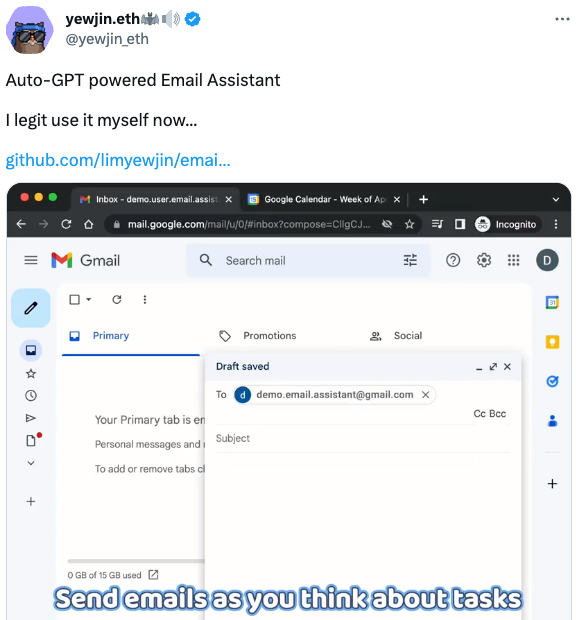

Yew Jin Lim, a software engineer at Google, posted that he'd used Auto-GPT to create an email assistant (Github repo). The video in the tweet shows Yew Jin emailing the AI agent with details of a task to complete and the agent performing tasks like adding an event to his calendar.

What are the Dangers and Limitations of AI Agents?

The consensus advice around working with AI is that most tasks are better performed with a human in the loop to sanity-check what the AI is doing. Remember that the AI is supposed to be an assistant, not an expert (and is an assistant that sometimes hallucinates). There are some pieces of caution that should be heeded.

Running the AI continuously can be expensive

While a single call to GPT via the OpenAI API is fairly cheap (typically less than 1 US cent for GPT-3.5, depending on the amount of output; GPT-4 is more expensive), using any of the three AI Agents discussed here to perform a complex task could involve hundreds or thousands of API calls.

It is a good idea to include breakpoints where human intervention is needed to provide permission for the AI to continue running.

Giving Auto-GPT access to software can be dangerous

While Auto-GPT can be provided with access to any API, if you give it access to buy stock on your behalf automatically, you should be prepared to lose all the money in your account. Likewise, it's a bad idea to give it write-access to your corporate database.

Going beyond personal or corporate losses, some AI researchers have argued that advanced AI provides larger or even existential threats. While initial attempts to use Auto-GPT to destroy humanity have been unsuccessful, it's worth bearing in mind the warning from Eliezer Yudkowsky, a Senior Research Fellow at the Machine Intelligence Research Institute.

To visualize a hostile superhuman AI, don’t imagine a lifeless book-smart thinker dwelling inside the internet and sending ill-intentioned emails. Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow. A sufficiently intelligent AI won’t stay confined to computers for long. In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing.

If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter.

While this is clearly a worst-case scenario, it's worth considering the dangers an unshackled AI could pose.

How Can I Access an AI Agent?

To try these AI agents yourself, there are several websites that let you try out the tech. If you want to use them more seriously, you'll need to install them on your development platform of choice.

Whichever agent you use, and however you decide to use it, you'll need to get an OpenAI API key. Instructions for this are given in the Using GPT-3.5 and GPT-4 via the OpenAI API in Python tutorial.

If you want to install any of these AI agents, note that they are command-line applications, and in their current state, they all require a bit of effort to set up.

Web interfaces

AI platform Hugging Face provides a hosted version of Auto-GPT. You just need to provide your OpenAI API key, then give the AI a role and some goals.

God Mode (named after a video game cheat mode) similarly allows you to input your OpenAI API key and simply give Auto-GPT a task to complete.

For Replit users, you can also fork this repl and give it your OpenAI API key.

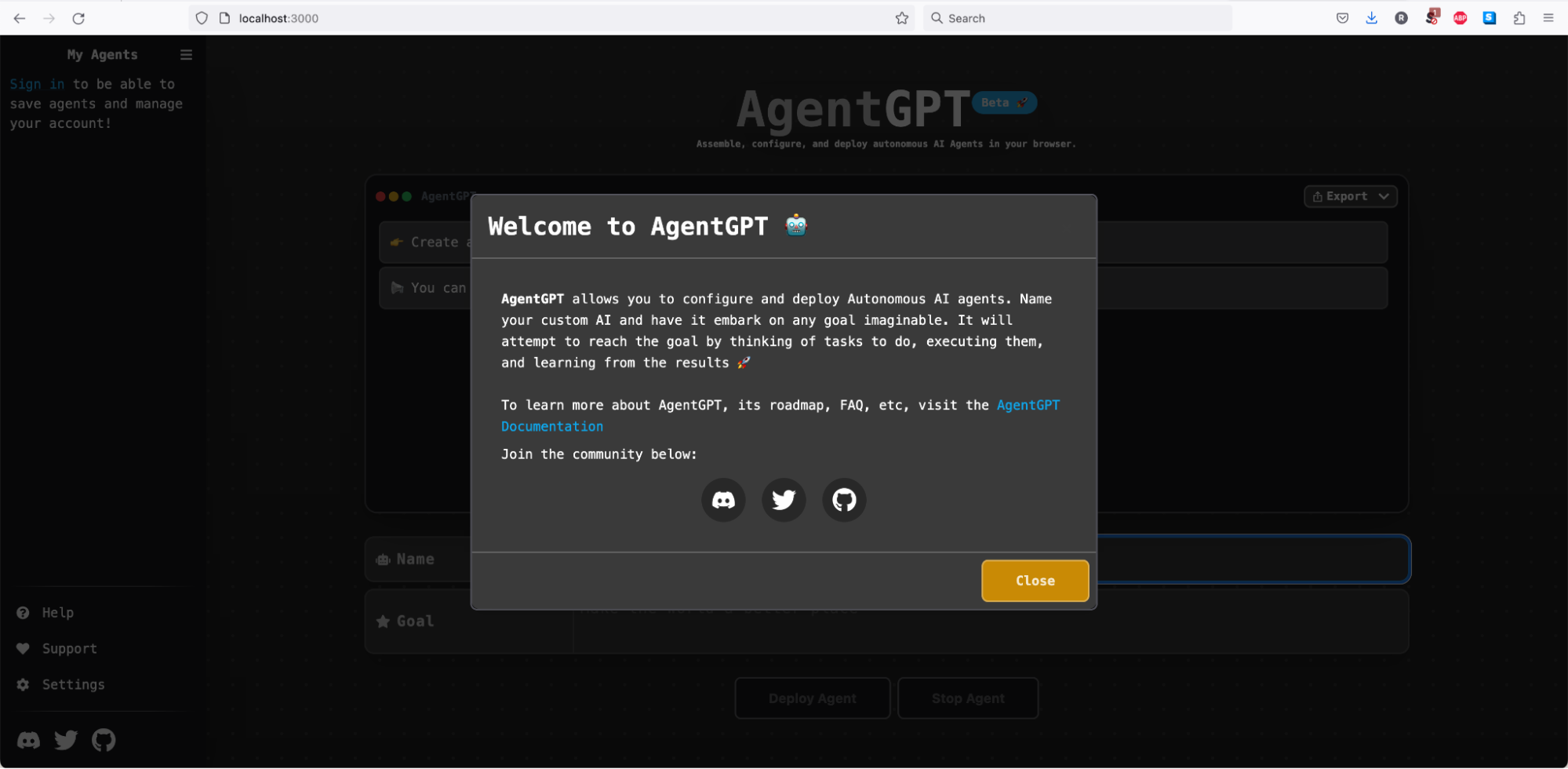

The AgentGPT team provides a demo version of their AI agent, though as a free version, it will only run for about two minutes before terminating.

Currently, there is no hosted version of BabyAGI that I could find.

Install Auto-GPT

Auto-GPT is a command-line application, and in its current state, it requires a bit of effort to set up. You can find detailed documentation on setup to help you get started.

This guide assumes that you can access a shell (like bash or zsh), and have git and Python installed.

Clone the Auto-GPT repository

In the terminal, clone the Auto-GPT repository.

git clone https://github.com/Significant-Gravitas/Auto-GPT.gitNavigate to the Auto-GPT directory that has just been created, and checkout the stable branch

cd Auto-GPT

git checkout stableGet API keys

Auto-GPT requires that you have an OpenAI API key (to contact GPT). The free account only allows you to make 3 API calls per minute, which is insufficient for use with Auto-GPT, so you'll need to get a paid account. Instructions for this are given in our Using GPT-3.5 and GPT-4 via the OpenAI API in Python tutorial.

You can optionally make use of the Pinecone API (for storage), the ElevenLabs API (for a speech interface), the Google API (for search), and the Twitter API (for promoting what you just built).

Configure Auto-GPT

Auto-GPT stores all the API keys in a configuration file named .env.

Note that storing API keys like this in a plain text file is poor security practice, so you should only run Auto-GPT in a secure environment, such as on your local machine, or you should invalidate your API keys after using them.

Take a copy of the .env.template file and name it .env.

In a text editor, find the line starting OPENAI_API_KEY= and add your OpenAI API key to it without spaces or quotes.

Add any additional API keys in the same key=value format.

Run Auto-GPT

Auto-GPT is run via the run.sh shell script (run.bat on Windows).

To get help on how to use this script, use the --help argument.

sh run.sh --help

Note that the script does a lot of checking for versions of Python packages before displaying the usage details.

To start an Auto-GPT assistant, simply run that same command without the help argument.

sh run.sh

After going through the packages checks, Auto-GPT will ask you three things

- Provide a name for your AI assistant.

- Describe your AI's role.

- Provide up to five goals for the AI assistant to attempt.

Install AgentGPT

AgentGPT provides a brief description of how to get started in the README of its GitHub repo.

Clone the AgentGPT repository

In the terminal, clone the AgentGPT repository.

git clone https://github.com/reworkd/AgentGPT.git

Navigate to the AgentGPT directory that has just been created.

cd AgentGPT

Run the Setup Script

There is a bash script to run to set up the AI agent.

bash setup.sh --local

The first thing you will be asked is to input your OpenAI API key and press ENTER.

You may be asked to give access to your Desktop files and to accept incoming network connections. Accept these.

After a few seconds, you should receive a message saying something like:

ready - started server on 0.0.0.0:3000, url: http://localhost:3000

Copy the URL and paste it into the address bar in your web browser. AgentGPT will be open with a welcome message.

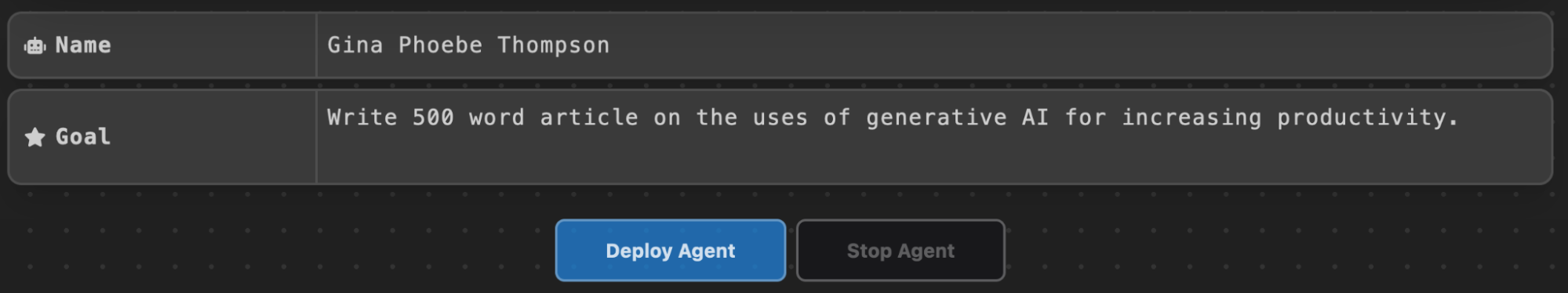

Deploy your Agent

Give your agent a name and a goal, then click "Deploy Agent".

Install BabyAGI

BabyAGI has some (very minimal) documentation in the README of its GitHub repo.

Clone the BabyAGI repository

In the terminal, clone the BabyAGI repository.

git clone https://github.com/yoheinakajima/babyagi.git

Navigate to the BabyAGI directory that has just been created.

cd BabyAGI

Install Required Python Packages

Use pip to install the packages needed to run BabyAGI.

pip install -r requirements.txt

Configure BabyAGI

Create a configuration file named .env.

cp .env.example .env

Edit the configuration file. You can use any terminal text editor; here, we'll use vim.

vim .env

Press i to insert text.

- In the line beginning

OPENAI_API_KEY=append your OpenAI API key. - In the line beginning

INSTANCE_NAME=optionally give your AI agent a name. - In the line beginning

OBJECTIVE=give your AI agent a goal to achieve.

Run BabyAGI

Run the BabyAGI script.

python3 babyagi.pyAuto-GPT Example

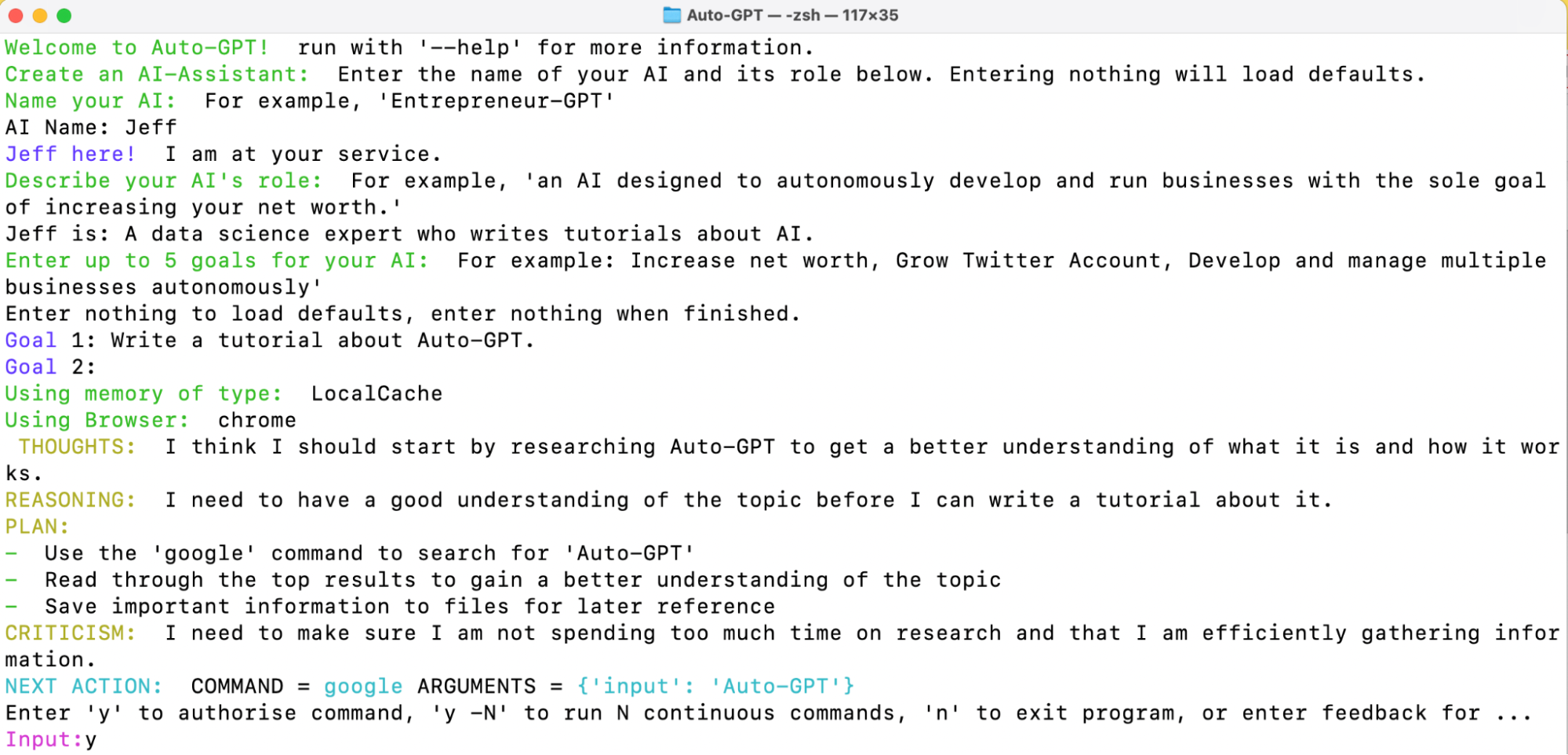

As a test, I named my AI assistant "Jeff," described it as "A data science expert who writes tutorials about AI," and gave it the goal to "Write a tutorial about Auto-GPT."

Auto-GPT will regularly prompt you for permission to perform the next task, whether it is searching the internet for more information or writing a file. Click 'y' to let it proceed, or provide text feedback.

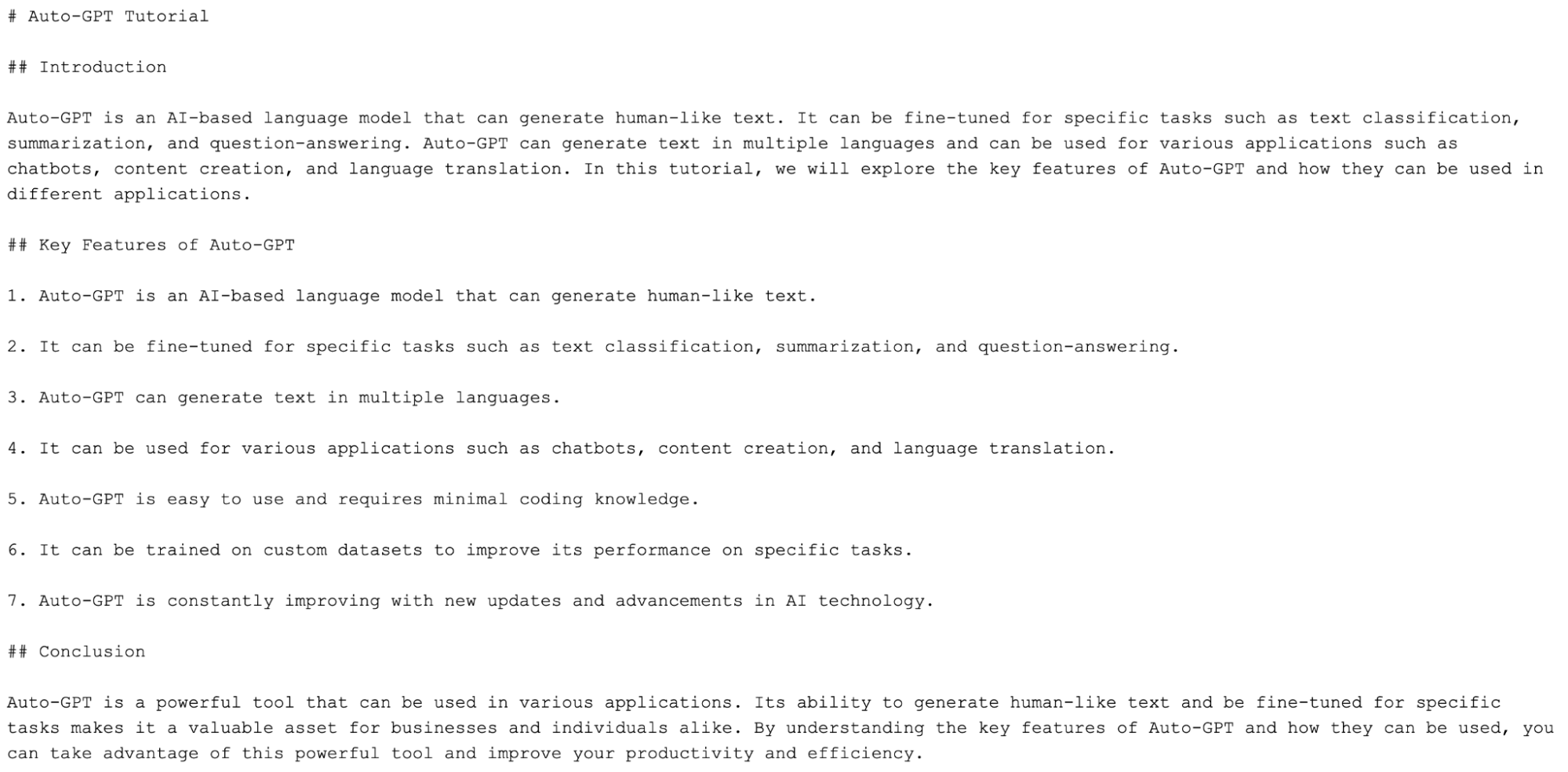

After a few minutes of Auto-GPT attempting to write a tutorial, it produced this tutorial file.

It isn't a good, or even factually correct, tutorial, so as ever, when using AI, you need to be very precise with your requirements in your prompts and pay close attention to what the AI is doing.

Take it to the Next Level

AI agents like Auto-GPT, AgentGPT, and BabyAGI represent a significant leap in the realm of AI, bringing us closer to the vision of truly autonomous AI systems. As these agents work towards goals with minimal human intervention, they open up a world of potential applications, from personal assistants to problem solvers.

To stay ahead in this rapidly changing landscape, we encourage you to expand your knowledge and skills in data science, machine learning, and AI. Check out the following resources to learn more:

Introduction to ChatGPT Course

Get Started with ChatGPT

How to Become a Prompt Engineer: A Comprehensive Guide

Srujana Maddula

9 min