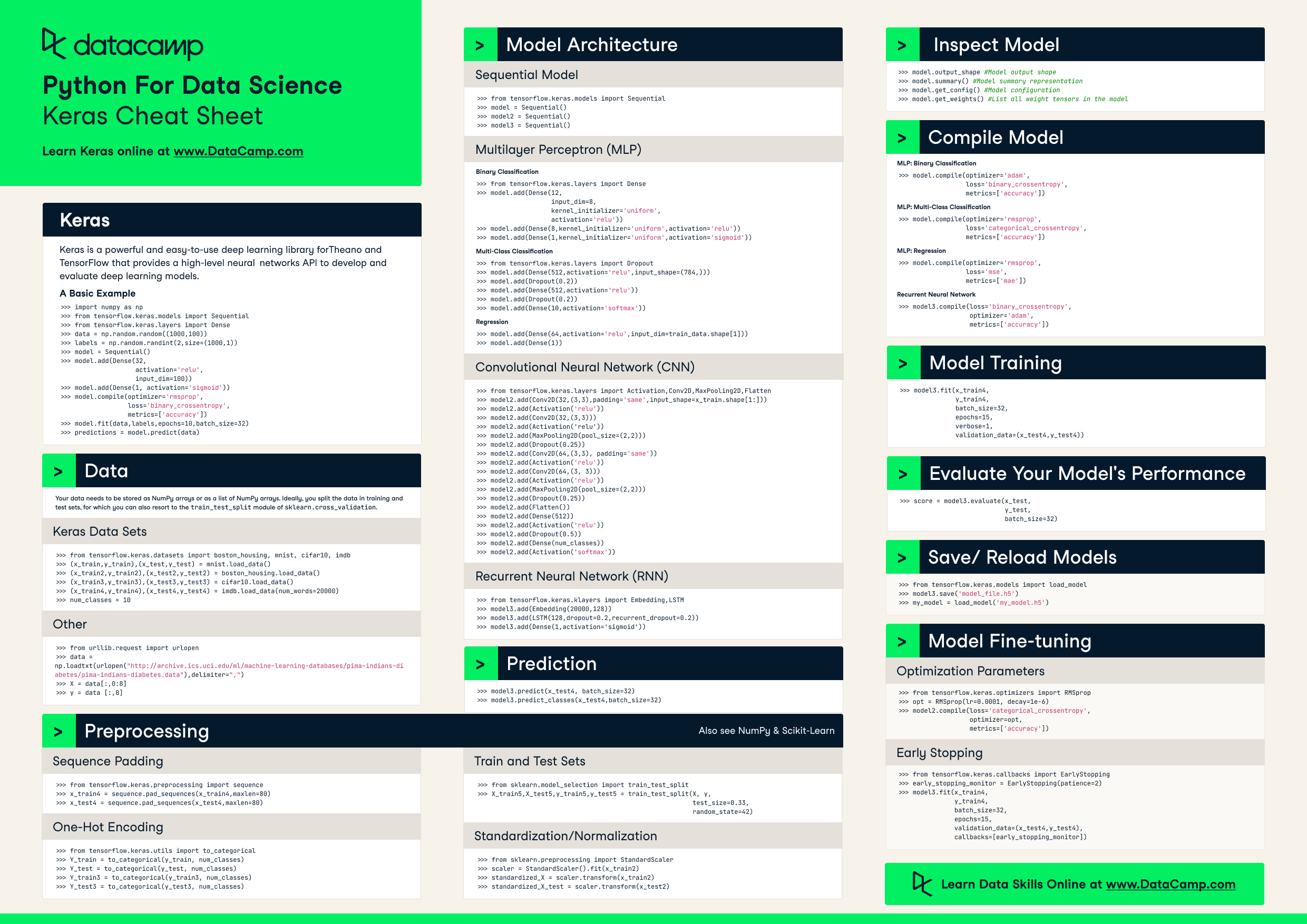

Keras Cheat Sheet: Neural Networks in Python

Make your own neural networks with this Keras cheat sheet to deep learning in Python for beginners, with code samples.

Jun 2021 · 5 min read

RelatedSee MoreSee More

cheat sheet

Scikit-Learn Cheat Sheet: Python Machine Learning

A handy scikit-learn cheat sheet to machine learning with Python, including some code examples.

Karlijn Willems

4 min

cheat sheet

Deep Learning with PyTorch Cheat Sheet

Learn everything you need to know about PyTorch in this convenient cheat sheet

Richie Cotton

6 min

cheat sheet

Python for Data Science - A Cheat Sheet for Beginners

This handy one-page reference presents the Python basics that you need to do data science

Karlijn Willems

4 min

tutorial

Keras Tutorial: Deep Learning in Python

This Keras tutorial introduces you to deep learning in Python: learn to preprocess your data, model, evaluate and optimize neural networks.

Karlijn Willems

43 min

tutorial

Convolutional Neural Networks in Python with Keras

In this tutorial, you’ll learn how to implement Convolutional Neural Networks (CNNs) in Python with Keras, and how to overcome overfitting with dropout.

Aditya Sharma

30 min

tutorial

Python For Data Science - A Cheat Sheet For Beginners

This handy one-page reference presents the Python basics that you need to do data science

Karlijn Willems

7 min