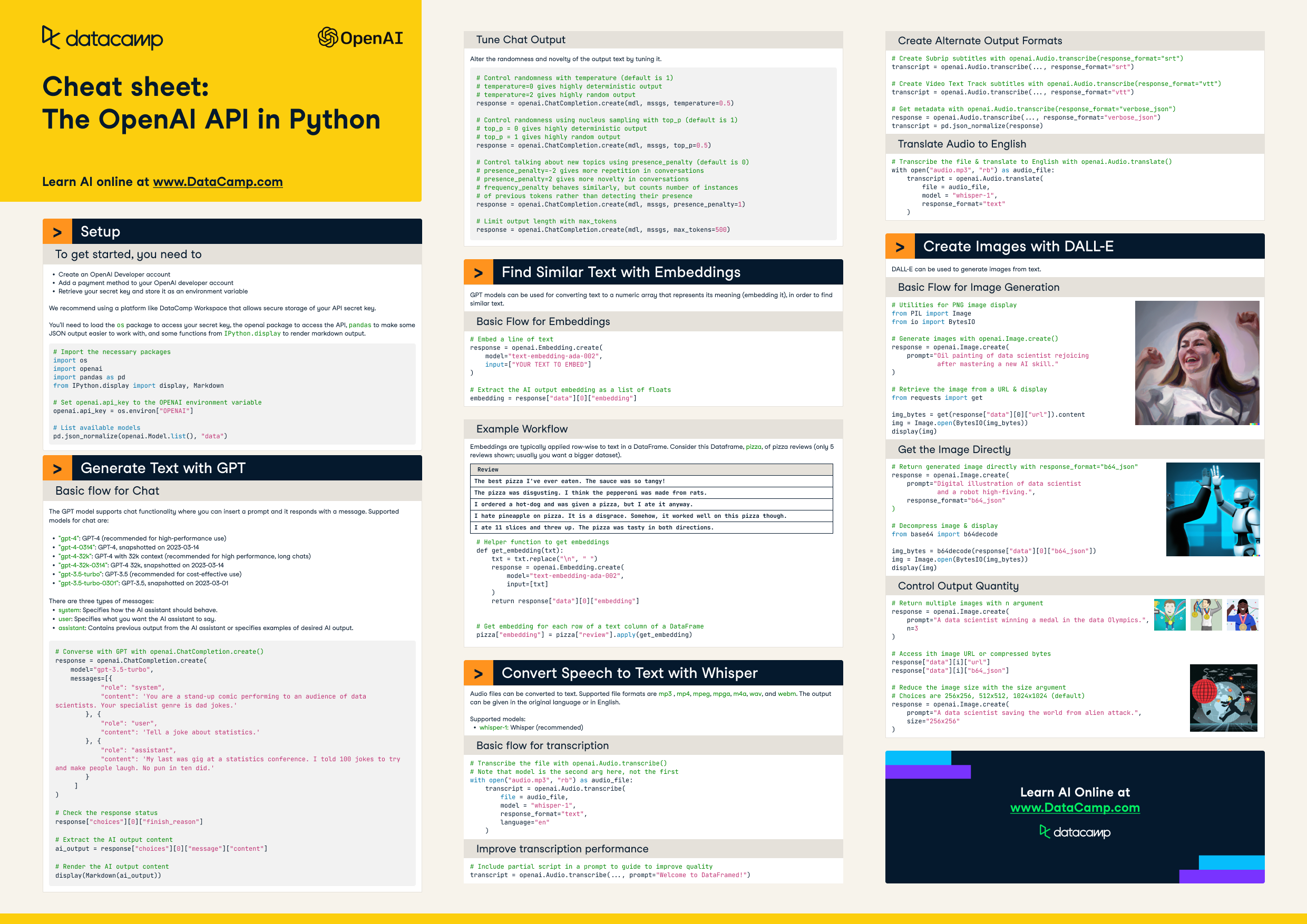

The OpenAI API in Python

ChatGPT and large language models have taken the world by storm. In this cheat sheet, learn the basics on how to leverage one of the most powerful AI APIs out there, then OpenAI API.

Apr 2023 · 3 min read

RelatedSee MoreSee More

tutorial

Using GPT-3.5 and GPT-4 via the OpenAI API in Python

In this tutorial, you'll learn how to work with the OpenAI Python package to programmatically have conversations with ChatGPT.

Richie Cotton

14 min

tutorial

Fine-Tuning GPT-3 Using the OpenAI API and Python

Unleash the full potential of GPT-3 through fine-tuning. Learn how to use the OpenAI API and Python to improve this advanced neural network model for your specific use case.

Zoumana Keita

12 min

tutorial

GPT-4o API Tutorial: Getting Started with OpenAI's API

To connect through the GPT-4o API, obtain your API key from OpenAI, install the OpenAI Python library, and use it to send requests and receive responses from the GPT-4o models.

Ryan Ong

8 min

code-along

Getting Started with the OpenAI API and ChatGPT

Get an introduction to the OpenAI API and the GPT-3 model.

Richie Cotton

code-along

Fine-tuning GPT3.5 with the OpenAI API

In this code along, you'll learn how to use the OpenAI API and Python to get started fine-tuning GPT3.5.

Zoumana Keita

code-along

Introduction to Large Language Models with GPT & LangChain

Learn the fundamentals of working with large language models and build a bot that analyzes data.

Richie Cotton