track

An Introduction to Data Orchestration: Process and Benefits

An integral part of driving any successful business involves gathering data, identifying inconsistencies, handling them, storing the data in an integrated platform, and using it to make informed decisions.

But imagine doing all these tasks manually each time you run a project. Over 40% of workers spend at least a quarter of their workweek on data collection and entry tasks. Also, it's no surprise to see data entry errors or corruption issues during the process.

That’s where data orchestration shines. This article explains what exactly data orchestration is and why it is crucial. We’ll also explore some popular data orchestration tools to help you get started.

What is Data Orchestration?

Data orchestration is a method or a tool that manages data-related activities. The tasks include gathering data, performing quality checks, moving data across systems, automating workflows, and more.

Put simply, data orchestration is the method where siloed data at different locations is combined, prepared, and presented for analysis.

Data engineers no longer need to create custom scripts for ETL tasks. Instead, data orchestration tools collect and organize the data, making it readily accessible to analytics tools.

Data orchestration simplifies things by:

- Unifying disparate data sources

- Executing workflow events in the right order

- Transforming data into the desired format

- Automating data flow between various storage platforms

Why is Data Orchestration Important?

Image by Author

Before data orchestration, data engineers had to manually pull unstructured data from APIs, spreadsheets, and databases. Then, they would clean that data, standardize the format, and send it to target systems.

According to one recent study, 95% of businesses find this process tough, especially with unstructured data. However, data orchestration can automate these tasks. The process controls cleaning and preparing data and ensures the right order of data flow between systems.

We know data orchestration centralizes data. But what if you can’t afford a single large storage system to save it? In such a case, data orchestration simplifies fetching data right from where it is stored, often in real time. Ultimately, this means you don’t need a single massive storage system.

Also, data orchestration improves data quality. Take data transformation, for instance. This step is all about converting data into a standard format and ensuring consistency and accuracy across the systems involved.

Another big plus for data orchestration is its ability to manage and process data in real time. Dynamic pricing strategies, stock trading and prediction, and customer behavior trends are some areas where real-time data processing is a thing, and data orchestration simplifies them.

Finally, data orchestration is essential for anyone dealing with big data and frequent data streaming tasks, especially for companies managing multiple storage systems.

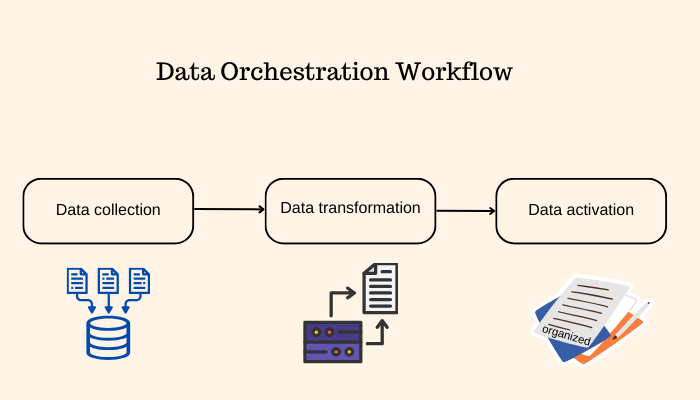

How Data Orchestration Works

The three main steps of data orchestration include data collection, preparation, and activation. Let’s explore them in detail.

Image by Author

Data collection and preparation

Data isn’t always perfect straight out of a CSV file for analysis. Initially, you’ll have to collect raw data from disparate sources like websites, APIs, and databases.

So, the first stage of orchestration involves collecting data from these different sources, performing integrity and accuracy checks, and then preparing it for the next step.

Data transformation

Different systems represent the same field of a dataset differently. For example, your customer relation management (CRM) software might store customer IDs as numbers, while the finance database might have them in strings.

To avoid such inconsistencies, data orchestration tools employ transformers that standardize the data format, ensuring consistent and reliable data.

Data activation

This last phase of orchestration puts the data to operational use. Activated data is the polished data that is ready to use, either by analytics teams or tools.

You can easily analyze this data to discover patterns, trends, and challenges across your marketing, finance, sales, and customer support departments.

You can use these findings to design personalized content for your visitors, quote customized pricing plans, and deliver premium customer service for targeted audiences.

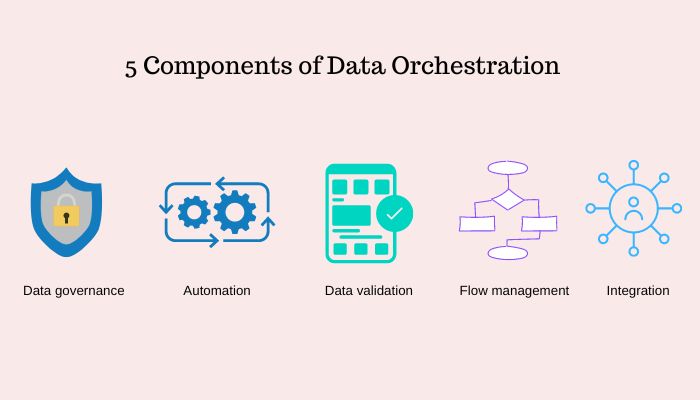

Key Considerations of Data Orchestration

Orchestration offers many opportunities in data organization and analysis. Here are a few factors that data orchestration streamlines:

Image by Author

Automation

The primary purpose of data orchestration is to automate various tasks involved in handling large volumes of data. For instance, in data integration, data orchestration tools automate the collection and combination of data from multiple sources.

During data transformation, the tools will convert different data formats into a single, consistent format. They also automate the movement of data across systems and pipelines.

Overall, data orchestration automates tasks involved in bringing data together, standardizing its format, cleaning it for analysis, and maintaining accurate data.

Data integration

Integration involves combining data from multiple locations into a centralized repository to create a unified view of your data. Orchestration eases this process by collecting data in regular intervals or based on the triggers you’ve set.

For example, you can set a rule in the orchestration tool to collect and integrate data automatically whenever there is an update in the pipeline.

Data flow management

Data orchestration automates workflows by scheduling tasks across pipelines. It automates the flow of data between different systems, coordinating the right sequence of operations.

Now, how does it ensure tasks run in the right order? Orchestration tools allow you to define custom triggers to schedule tasks.

For example, you can define a fixed sequence for data flow, like pipeline 1 to pipeline 2, and then to 3, or put custom conditions such as if data meets certain criteria, move to pipeline 2, or else send it to pipeline 3.

Data governance

Data governance refers to maintaining the availability, quality, and security of your organization’s data, a concept that varies according to the industry, domain, and location.

For example, extracting and storing only necessary data and deleting it after usage can be considered a data governance policy.

Data orchestration tracks the source of data, what data is stored, and how it is transformed throughout the lifecycle. These records will help you adhere to the governance policies and regulations such as GDPR and CCPA.

Data validation

Data orchestration regularly validates your data for quality and accuracy. Certain orchestration tools include built-in validators for basic quality checks.

For example, a data type validator verifies if the values in the name column are stored in string data type. The tools also allow you to set custom validation rules specific to your needs.

Implementing Data Orchestration

We’ve looked at how data orchestration works in the earlier sections. So here is how you can start the process and some key tips for selecting the right data orchestration tool.

Planning and assessing your needs

The first step in the orchestration process is to define your goals. Determine what you want to achieve through this process, whether it’s scheduling workflows, unifying data, or maintaining quality data.

Start by evaluating your current data infrastructure, finding inconsistencies, identifying which data tasks are consuming more time, and checking how easily your analytics team or tools can access the current data. Is it easy for them, or are there any difficulties?

Identify all these pain points and define the goals of orchestration that can address these issues.

Selecting the right tools

Once you’ve decided on the goals, the next step is to find the tools that can implement data orchestration mechanisms in your systems. Here are a few considerations to help you select the right data orchestration tool:

- Choose a flexible tool that allows easy updates and deployments in the future.

- As you need to handle data from multiple sources, make sure the chosen orchestration tool integrates well with your various data warehouses, analytics platforms, pipelines, and more.

- Consider the growth of your business. Make sure you pick a tool that can scale with your data needs.

- A user-friendly tool with built-in editors helps you easily design workflows, schedule tasks, set access controls, and more.

Data Orchestration Common Challenges

Data orchestration is becoming an essential asset to speed up and schedule various data tasks. However, data orchestration presents both advantages and challenges.

Here are a few challenges that might need attention while implementing data orchestration in your systems.

- Security: As data moves throughout the orchestration process, it’s important to implement security measures like SSL/TLS encryption, data access controls, multi-factor authentication, and more.

- Real-time data streaming: Real-time data is in growing demand. As such, your data orchestration process needs to be quick in moving data between pipelines with minimal delays.

- Resource management: During parallel execution, different processes might require the same computing resources or infrastructure. Prioritizing tasks and the right resource allocation can be a challenge.

- Talent requirements: You’ll need to hire or train skilled data professionals to install and set up data orchestration tools and techniques within your systems.

- Data silos: It’s common that your finance data isn’t accessible to the marketing teams, and HR data isn’t available to the tech department. This limited access can restrict interactions between data pipelines.

Strategies for Effective Data Orchestration

|

1. Be specific with your goals |

Defining clear goals helps you keep the workflows and orchestration process on track and in line with the objectives. |

|

2. Define clear data quality metrics |

If data format, structure, and accuracy bother you, then monitor those metrics throughout the orchestration process to maintain quality data. |

|

3. Use managed cloud solutions |

Managed cloud solutions mean third-party services that easily connect various data orchestration tools with the other platforms that your organization uses. |

|

4. Implement Data security measures |

Implement standard security protocols and data governance policies to keep your data secure throughout the orchestration lifecycle. |

|

5. Select the right tools |

Consider scalability, ease of use, integrations, security features, and more while deciding on the orchestration tool. |

Data Orchestration Case Studies

Every data-driven company today uses data orchestration tools and techniques to handle big data effectively. Here are a few use cases for it:

Hybrid environments

Many organizations have data in the cloud and computing resources in-house, or it could be the other way around. Delays occur in these cases as on-premise tools need to interact with the data in cloud servers. However, data orchestration fills this gap, helping them communicate and work together as if they are in the same environment.

Real-time data streaming

80% of Netflix's stream time is through its recommender system. This recommender system uses Netflix Maestro, a workflow orchestrator to run Netflix’s super large-scale data workflows.

E-commerce

Having customer behavior, inventory data, financial transactions, ads displays, and product recommendations data in separate platforms doesn’t help e-commerce businesses in deriving insights. That’s why e-commerce businesses leverage data orchestration to unify this data and draw actionable insights.

Overview of Popular Orchestration Tools

Orchestration tools automate and streamline data orchestration tasks and techniques. They handle everything from data collection to activation, reducing human errors and improving efficiency and speed.

The market is flooded with options, so we’ve done the research for you and picked some popular data orchestration tools:

Image by Author

Apache Airflow

Apache Airflow is an open-source orchestration tool to create, schedule, and monitor workflows or pipelines using Python. The tool’s workflows are represented as Directed Acyclic Graph(DAG) to organize tasks and their dependencies within workflows.

Breaking down DAGs:

- Directed: a defined movement or relationship between tasks. That is, tasks are connected in a defined path. For example, task 1 depends on task 2 meaning task 1 runs only after task 2 completes.

- Acyclic: ensures there are no loops in the dependencies. For example, if task 1 relies on task 2 and task 2 depends on task 1, it’s like a never-ending loop. The architecture avoids such loops to keep running tasks smoothly.

With its easy user interface, you can quickly navigate to certain DAGs and track the status and logs of various tasks.

Prefect

Prefect is another Python-based orchestration tool used to build and automate workflows between data pipelines. With Perfect, you can break down complex task dependencies and sequences into organized sub-flows.

Prefect features executing tasks at runtime, running workflows in hybrid environments, caching frequently executed pipeline results, and more.

Keboola

A cloud-based platform for designing and executing data pipelines effortlessly. With Keboola extractors, you can fetch data from any source and easily load it into the platform. Once the data is in, you can use the tool’s transformations to standardize it.

Keboola’s “Applications” component performs exhaustive data manipulation and transformation tasks.

Dragster

Dragster is an open-source data orchestrator that simplifies creating and maintaining data pipelines. Inspired by Airflow, the tool allows you to create workflow DAGs using Python-based domain-specific language(DSL).

This way, developers can easily define their dependencies and transformations using the tool.

Conclusion

Data orchestration simplifies the process of building automated data workflows by handling data collection, data transformation, and data movement tasks involved in maintaining pipelines. This makes it easier for companies to handle big data, execute ETL tasks, and scale ML deployments.

In this tutorial, we walked through all you need to get started with data orchestration, a widely practiced technique among data engineers today. To continue learning, check out these resources:

Srujana is a freelance tech writer with the four-year degree in Computer Science. Writing about various topics, including data science, cloud computing, development, programming, security, and many others comes naturally to her. She has a love for classic literature and exploring new destinations.

Become a Data Engineer Today!

track

Associate Data Engineer

course

Understanding Data Engineering

blog

Top 3 Trends in Data Infrastructure for 2021

blog

14 Essential Data Engineering Tools to Use in 2024

blog

5 Essential Data Engineering Skills

blog

Corporate Data Management Training: Key Principles and Benefits

Kevin Babitz

7 min

tutorial

An Introduction to Data Pipelines for Aspiring Data Professionals

tutorial

Airflow vs Prefect: Deciding Which is Right For Your Data Workflow

Tim Lu

8 min