Topics

RelatedSee MoreSee More

blog

DataCamp's new workspace product lets you start your own data analysis in seconds

Doing data science work should be easy, enjoyable, and collaborative. That’s why we’re building a collaboration product for data professionals and data-fluent teams.

Jonathan Cornelissen

6 min

blog

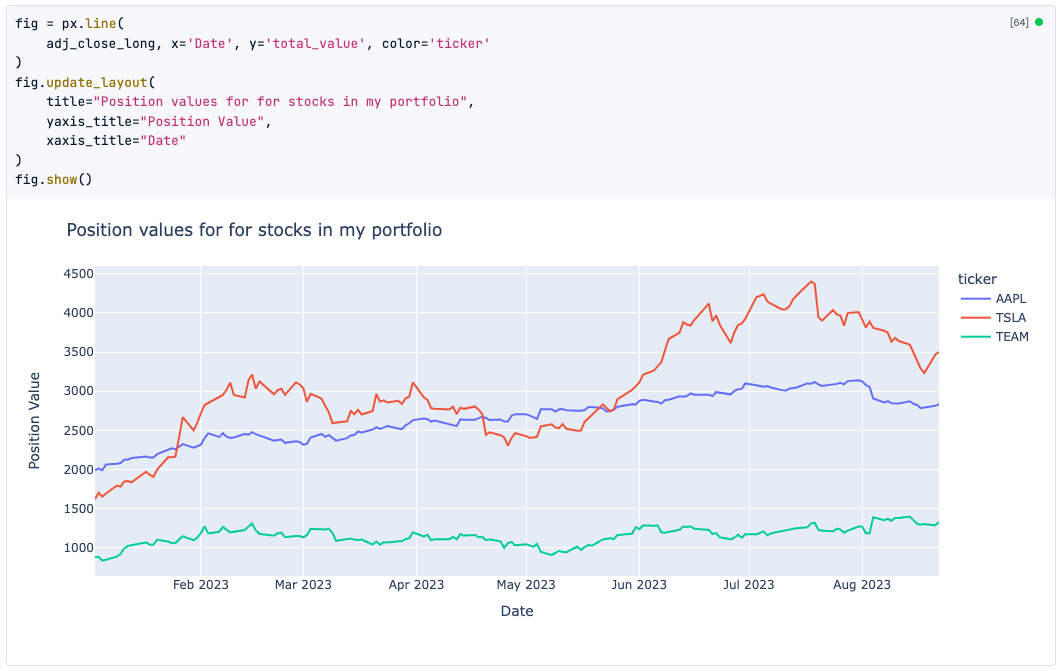

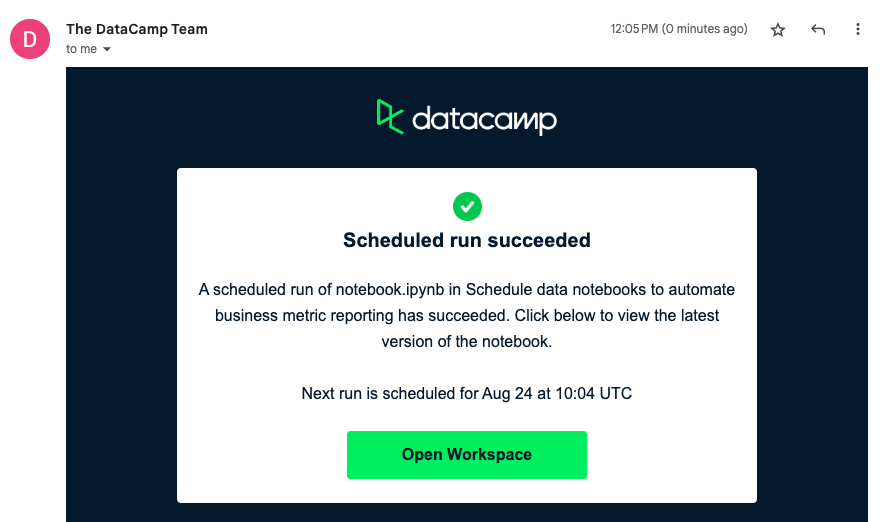

How I Saved Months Automating My Reporting with DataLab

Discover how Will, a Data Analyst at Starting Point, built his data skills using DataCamp and then automated his reporting using DataLab, saving him weeks of effort.

Rhys Phillips

6 min

blog

Introducing DataLab

DataCamp is launching DataLab, an AI-enabled data notebook to make it easier and faster than ever before to go from data to insight. Read on to learn more about what makes DataLab unique and our path towards it.

Filip Schouwenaars

3 min

blog

10 Ways to Speed Up Your Analysis With the DataLab AI Assistant

Learn how to leverage the Generate feature inside DataLab to speed up your workflow!

Justin Saddlemyer

11 min

code-along

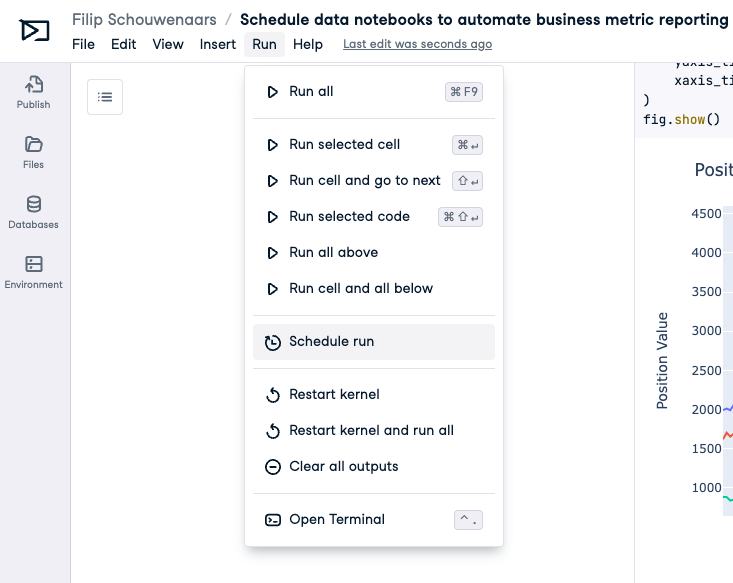

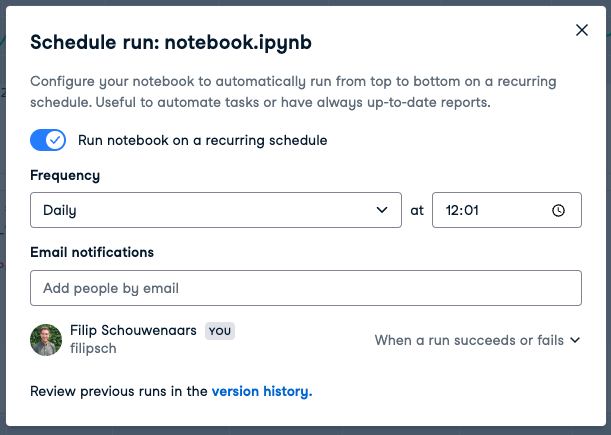

Automate Business Metric Reporting with Datalab

In this live training aimed at aspiring and active data analysts, we’ll showcase this workflow by reporting on bicycle sales and inventory data stored in Microsoft SQL Server data.

Filip Schouwenaars

code-along

Using DataLab in Data Academies

Learn how to use DataCamp Workspace as part of a corporate training program

Filip Schouwenaars