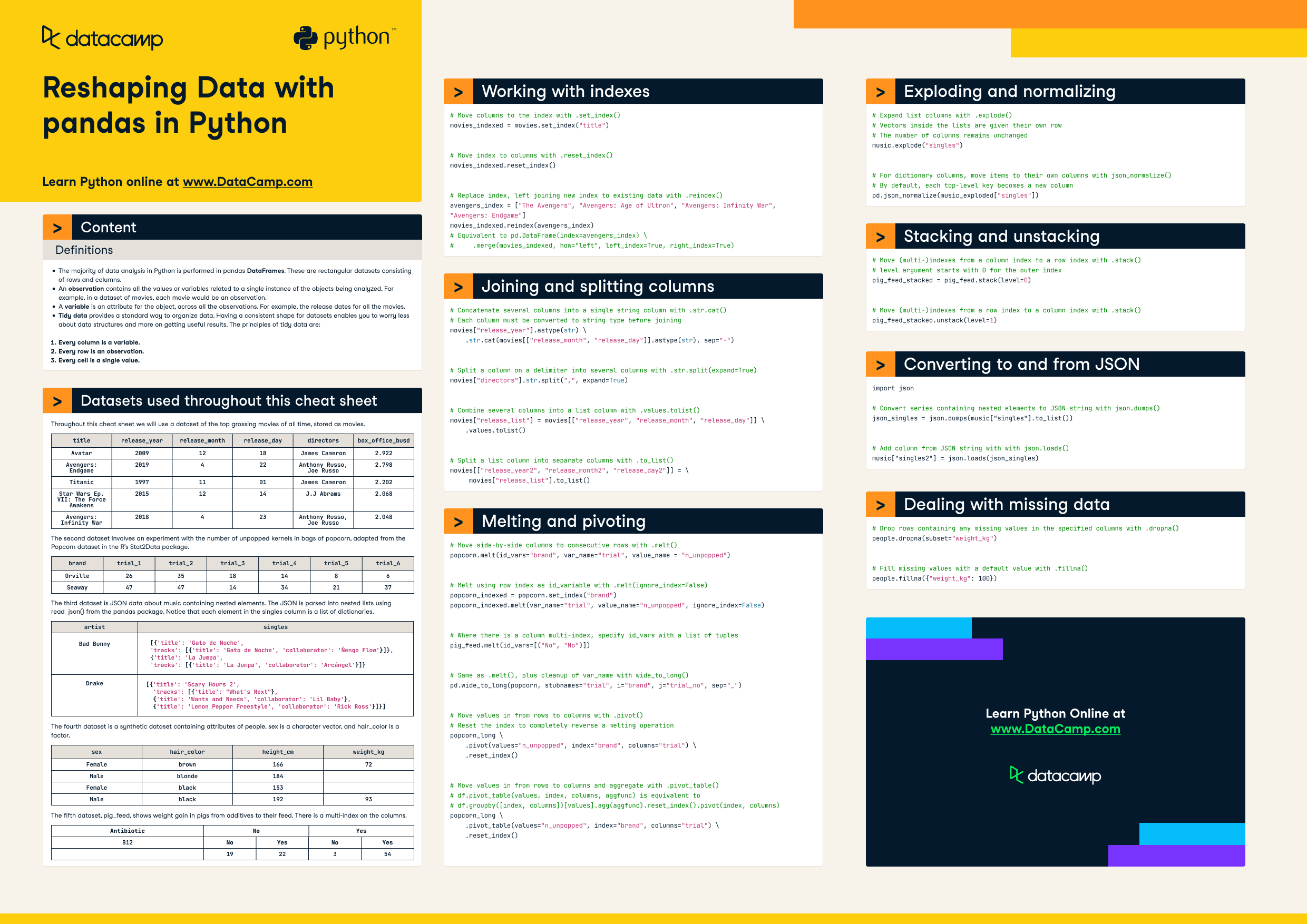

Reshaping Data with pandas in Python

Pandas DataFrames are commonly used in Python for data analysis, with observations containing values or variables related to a single object and variables representing attributes across all observations.

Feb 2023

RelatedSee MoreSee More

cheat sheet

Pandas Cheat Sheet for Data Science in Python

A quick guide to the basics of the Python data analysis library Pandas, including code samples.

Karlijn Willems

4 min

cheat sheet

Pandas Cheat Sheet: Data Wrangling in Python

This cheat sheet is a quick reference for data wrangling with Pandas, complete with code samples.

Karlijn Willems

4 min

tutorial

Pandas Tutorial: DataFrames in Python

Explore data analysis with Python. Pandas DataFrames make manipulating your data easy, from selecting or replacing columns and indices to reshaping your data.

Karlijn Willems

20 min

tutorial

Data Preparation with pandas

In this tutorial, you will learn why it is important to pre-process data and how to do it in pandas.

Sejal Jaiswal

15 min

tutorial

Python Select Columns Tutorial

Use Python Pandas and select columns from DataFrames. Follow our tutorial with code examples and learn different ways to select your data today!

DataCamp Team

7 min

tutorial

Python Exploratory Data Analysis Tutorial

Learn the basics of Exploratory Data Analysis (EDA) in Python with Pandas, Matplotlib and NumPy, such as sampling, feature engineering, correlation, etc.

Karlijn Willems

30 min