course

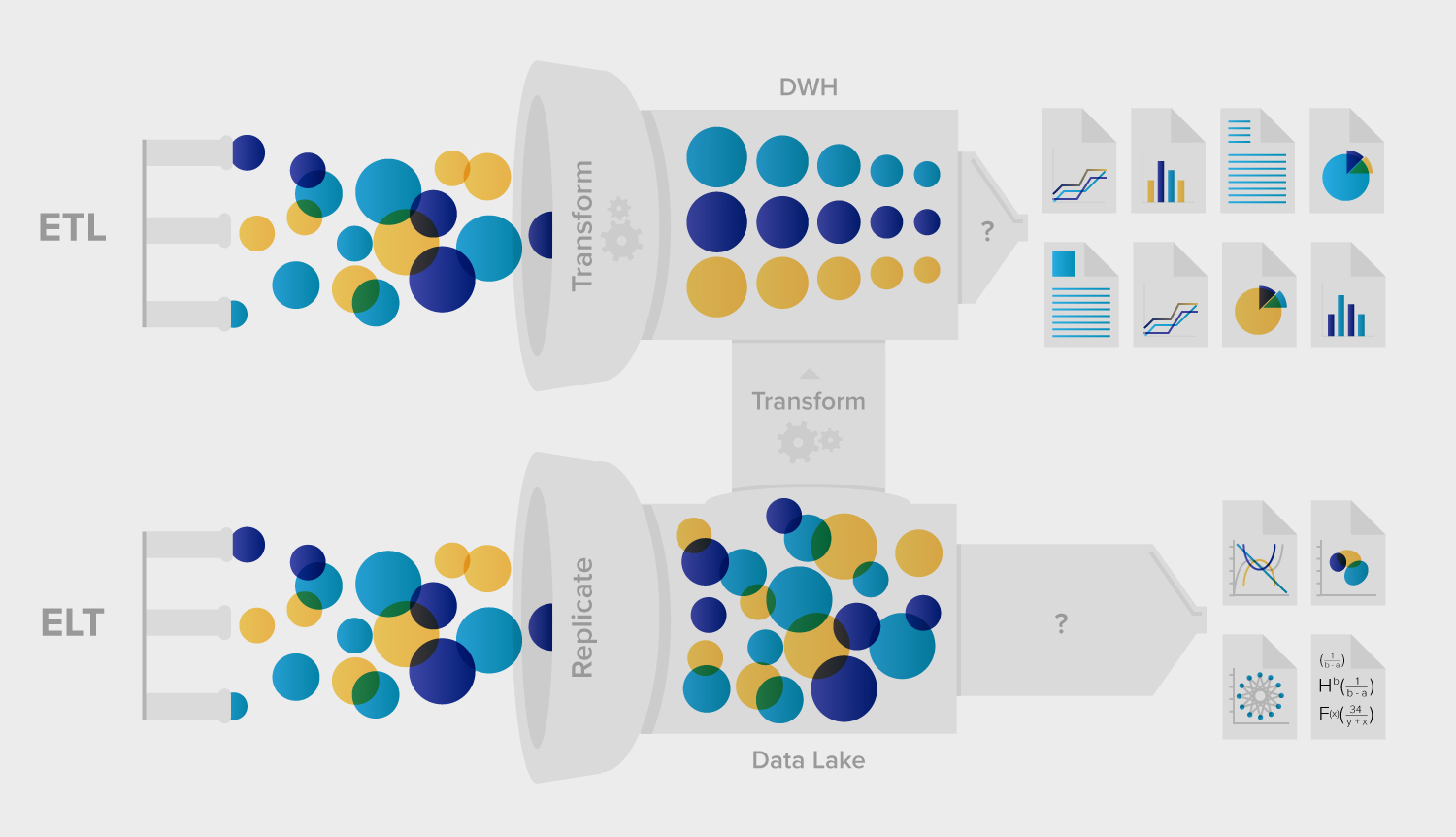

ETL vs ELT: Understanding the Differences and Making the Right Choice

Dive deep into the ETL vs ELT debate, uncovering the key differences, strengths, and optimal applications of each. Learn how these data integration methodologies shape the future of business intelligence and decision-making.

Nov 2023 · 6 min read

Topics

Start Your Data Engineering Journey Today!

4 hours

110.6K

course

ETL and ELT in Python

4 hours

9.2K

See More

RelatedSee MoreSee More

blog

A List of The 20 Best ETL Tools And Why To Choose Them

This blog post covers the top 20 ETL (Extract, Transform, Load) tools for organizations, like Talend Open Studio, Oracle Data Integrate, and Hadoop.

DataCamp Team

12 min

blog

What is Zero-ETL? Introducing New Approaches to Data Integration

Discover how zero-ETL architecture can revolutionize data processing by eliminating ETL pipelines and streamlining data analytics and AI implementation.

Vahab Khademi

16 min

blog

Data Lakes vs. Data Warehouses

Understand the differences between the two most popular options for storing big data.

DataCamp Team

4 min

blog

Power BI vs Alteryx: Which Should You Use?

Explore the key differences between Power BI and Alteryx in user interface, integration, cost, and learning curve to determine the best fit for your needs.

Vikash Singh

8 min

blog

Data Engineering vs. Data Science Infographic

Check out our newest infographic comparing the roles of a Data Engineer and a Data Scientist

Jacob Moody

1 min

tutorial

Building an ETL Pipeline with Airflow

Master the basics of extracting, transforming, and loading data with Apache Airflow.

Jake Roach

15 min