course

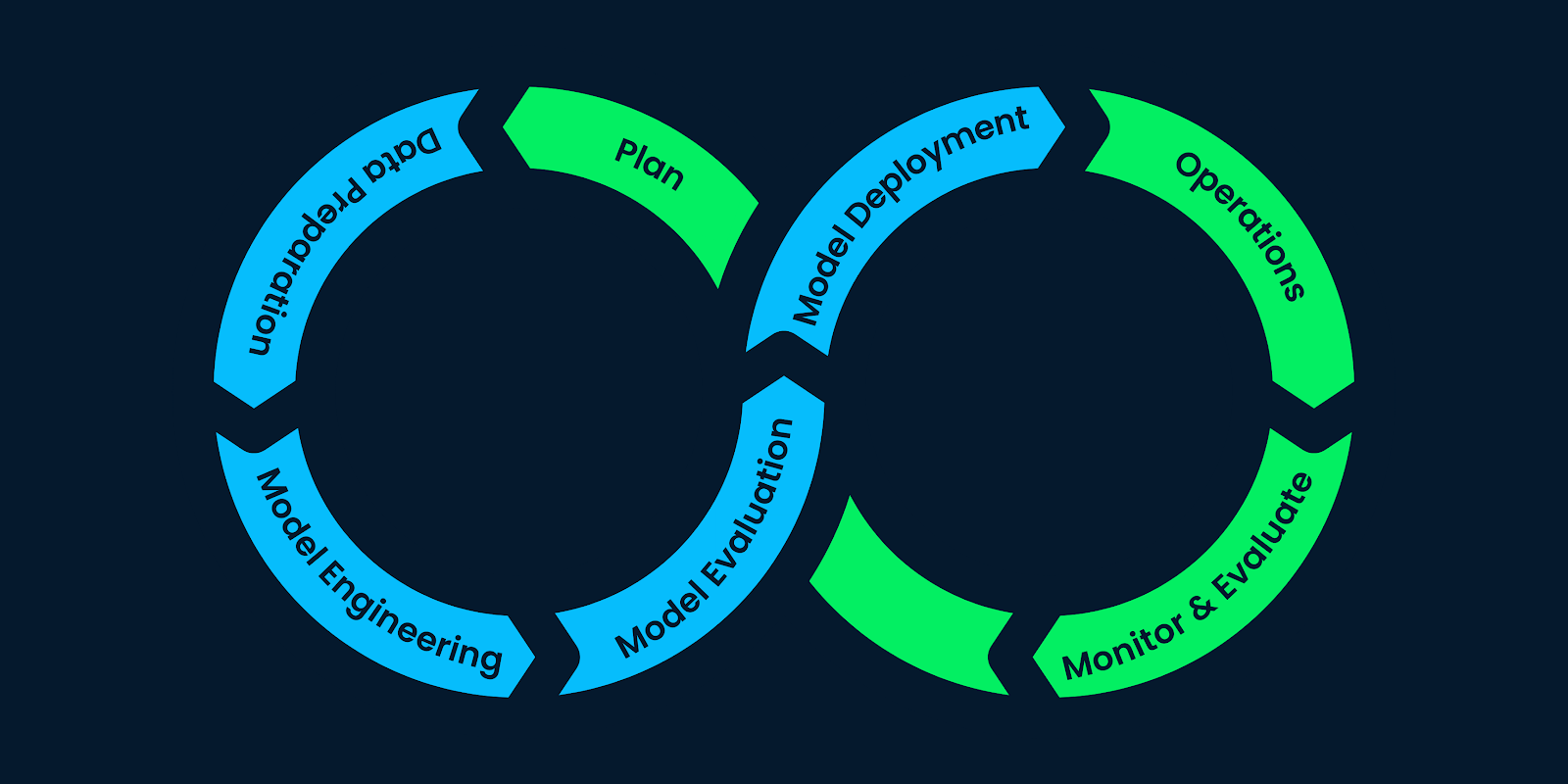

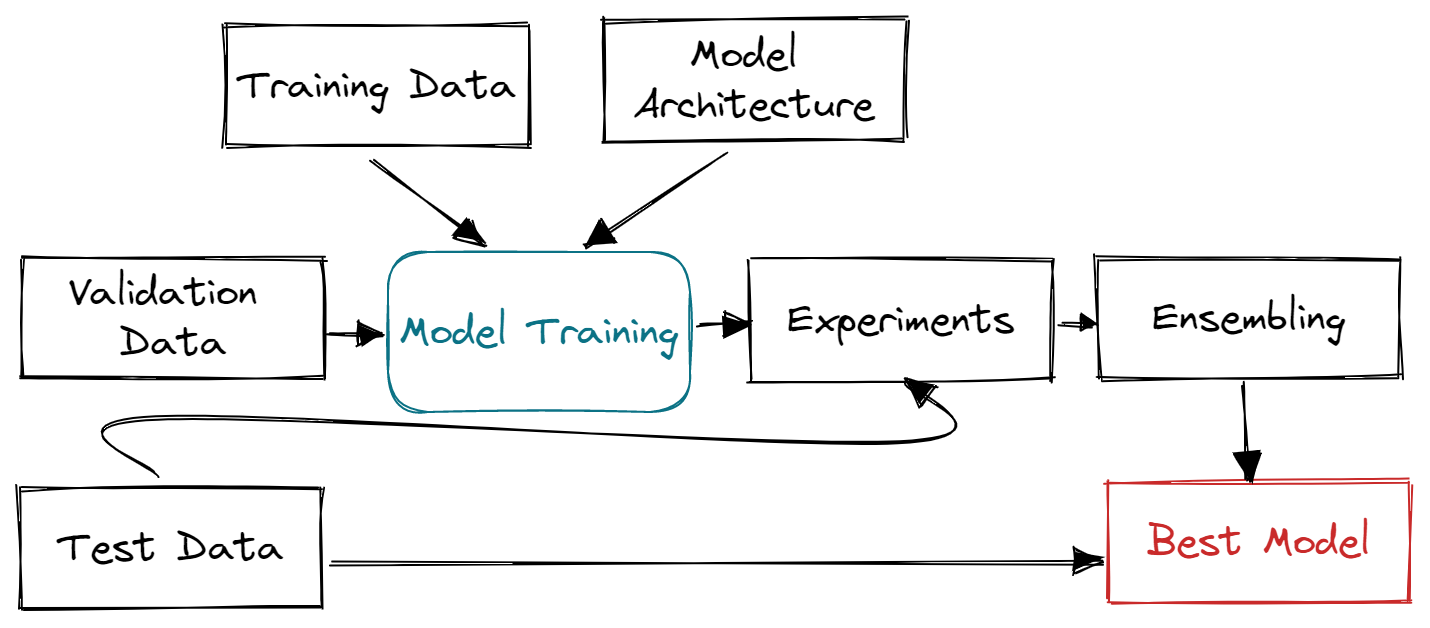

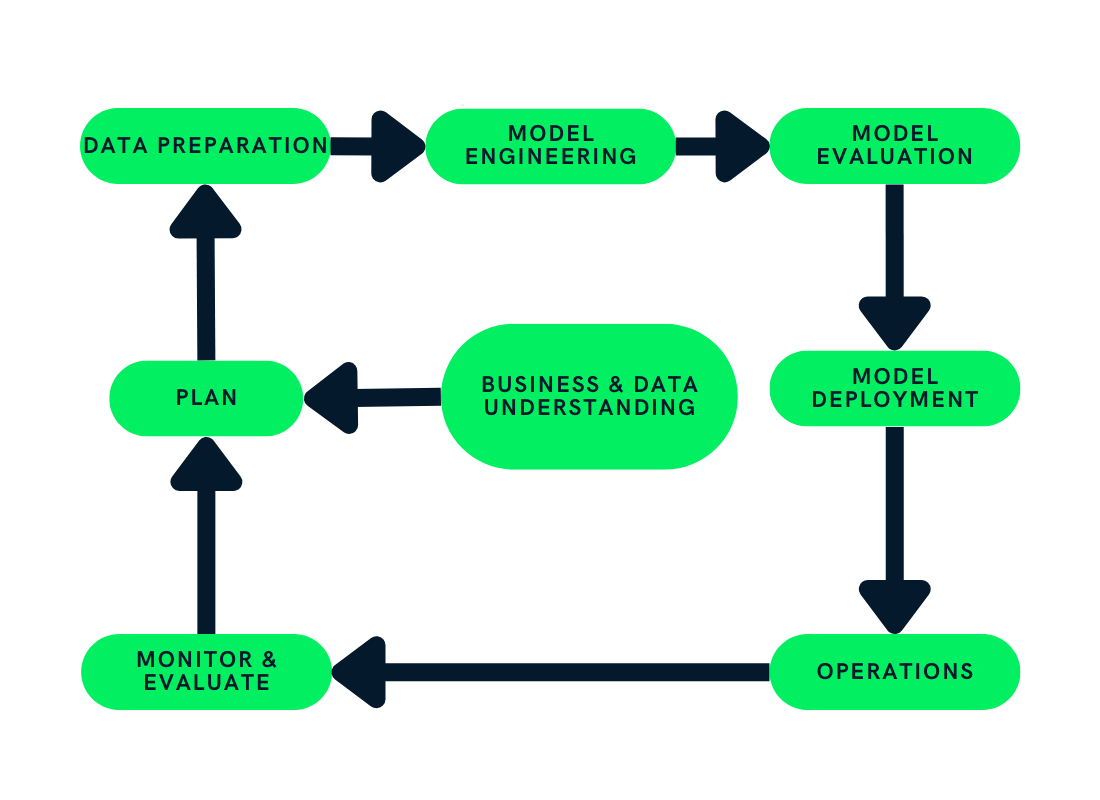

The Machine Learning Life Cycle Explained

Learn about the steps involved in a standard machine learning project as we explore the ins and outs of the machine learning lifecycle using CRISP-ML(Q).

Oct 2022 · 10 min read

Machine Learning Courses

2 hours

30.2K

course

Machine Learning with Tree-Based Models in Python

5 hours

88.2K

course

Machine Learning for Finance in Python

4 hours

27.2K

See More

RelatedSee MoreSee More

blog

A Beginner's Guide to The Machine Learning Workflow

In this infographic, get a download of what the machine learning workflow looks like.

DataCamp Team

2 min

blog

What is Machine Learning? Definition, Types, Tools & More

Find out everything you need to know about machine learning in 2023, including its types, uses, careers, and how to get started in the industry.

Matt Crabtree

14 min

tutorial

Streamline Your Machine Learning Workflow with MLFlow

Take a deep dive into what MLflow is and how you can leverage this open-source platform for tracking and deploying your machine learning experiments.

Moez Ali

12 min

tutorial

Machine Learning, Pipelines, Deployment and MLOps Tutorial

Learn basic MLOps and end-to-end development and deployment of ML pipelines.

Moez Ali

19 min

code-along

Managing Machine Learning Models with MLflow

Learn to use MLflow to track and package a machine learning model, and see the process for getting models into production.

Weston Bassler

code-along

Getting Started with Machine Learning in Python

Learn the fundamentals of supervised learning by using scikit-learn.

George Boorman

Image by Author

Image by Author

Image by Author

Image by Author Image by Author

Image by Author Image by Author

Image by Author Image by Author

Image by Author