course

What is Transfer Learning in AI? An Introductory Guide with Examples

Learn about transfer learning, fine-tuning, and their foundational roles in machine learning and generative AI.

May 2024 · 7 min read

What is transfer learning?

What are the advantages of transfer learning?

What is fine-tuning?

What are the applications of transfer learning?

Learn with DataCamp

4 hours

20K

course

Introduction to Deep Learning in Python

4 hours

247.1K

course

Understanding Machine Learning

2 hours

197.4K

See More

RelatedSee MoreSee More

blog

What is AI? A Quick-Start Guide For Beginners

Find out what artificial intelligence really is with examples, expert input, and all the tools you need to learn more.

Matt Crabtree

11 min

blog

Artificial Intelligence (AI) vs Machine Learning (ML): A Comparative Guide

Check out the similarities, differences, uses and benefits of machine learning and artificial intelligence.

Matt Crabtree

10 min

blog

Classification in Machine Learning: An Introduction

Learn about classification in machine learning, looking at what it is, how it's used, and some examples of classification algorithms.

Zoumana Keita

14 min

tutorial

What is Deep Learning? A Tutorial for Beginners

The tutorial answers the most frequently asked questions about deep learning and explores various aspects of deep learning with real-life examples.

Abid Ali Awan

20 min

tutorial

Transfer Learning: Leverage Insights from Big Data

In this tutorial, you’ll see what transfer learning is, what some of its applications are and why it is critical skill as a data scientist.

Lars Hulstaert

17 min

tutorial

Deep Learning (DL) vs Machine Learning (ML): A Comparative Guide

In this tutorial, you'll get an overview of Artificial Intelligence (AI) and take a closer look in what makes Machine Learning (ML) and Deep Learning different.

Matt Crabtree

14 min

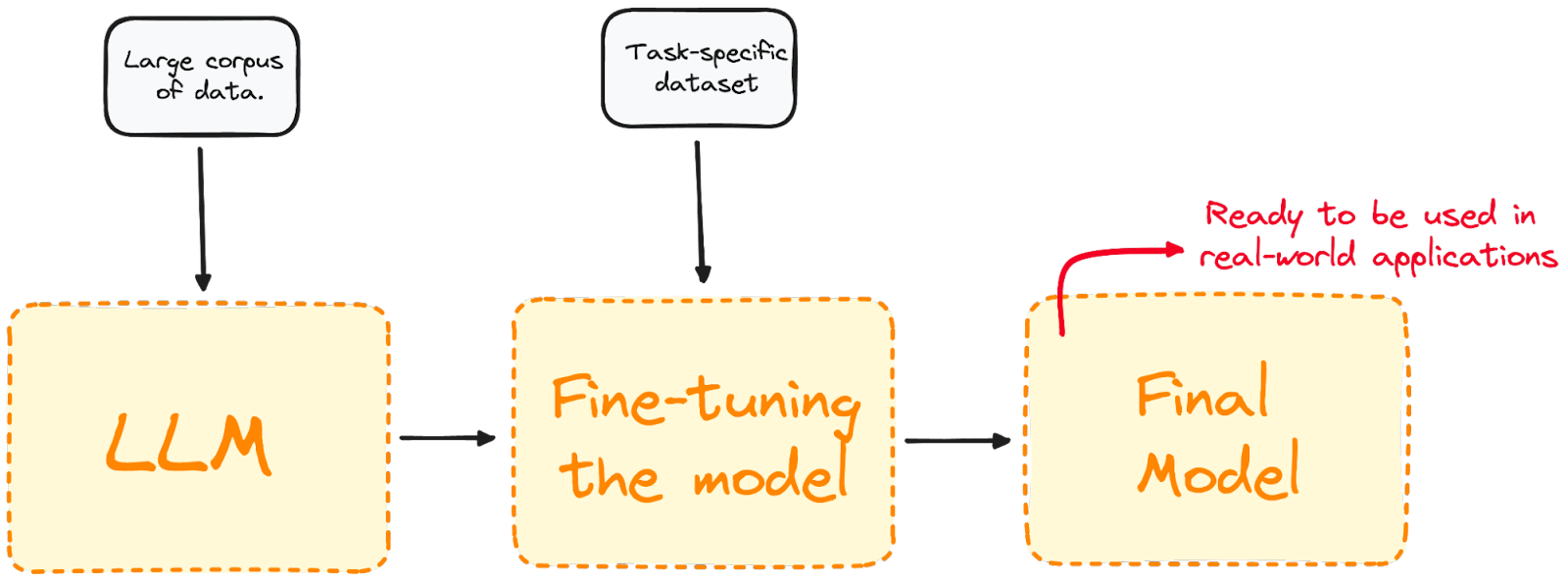

Visualizing the fine-tuning process. [

Visualizing the fine-tuning process. [