course

What is Mistral's Codestral? Key Features, Use Cases, and Limitations

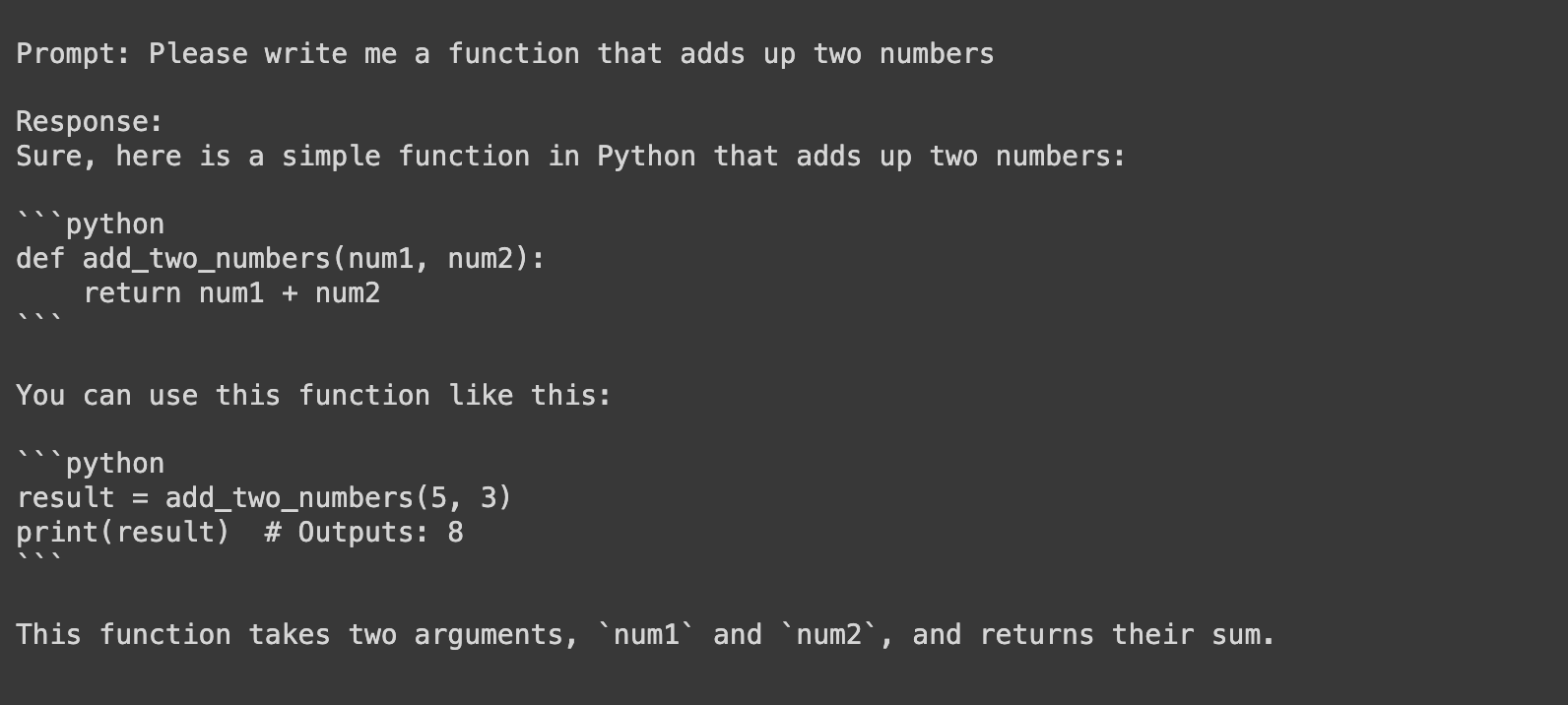

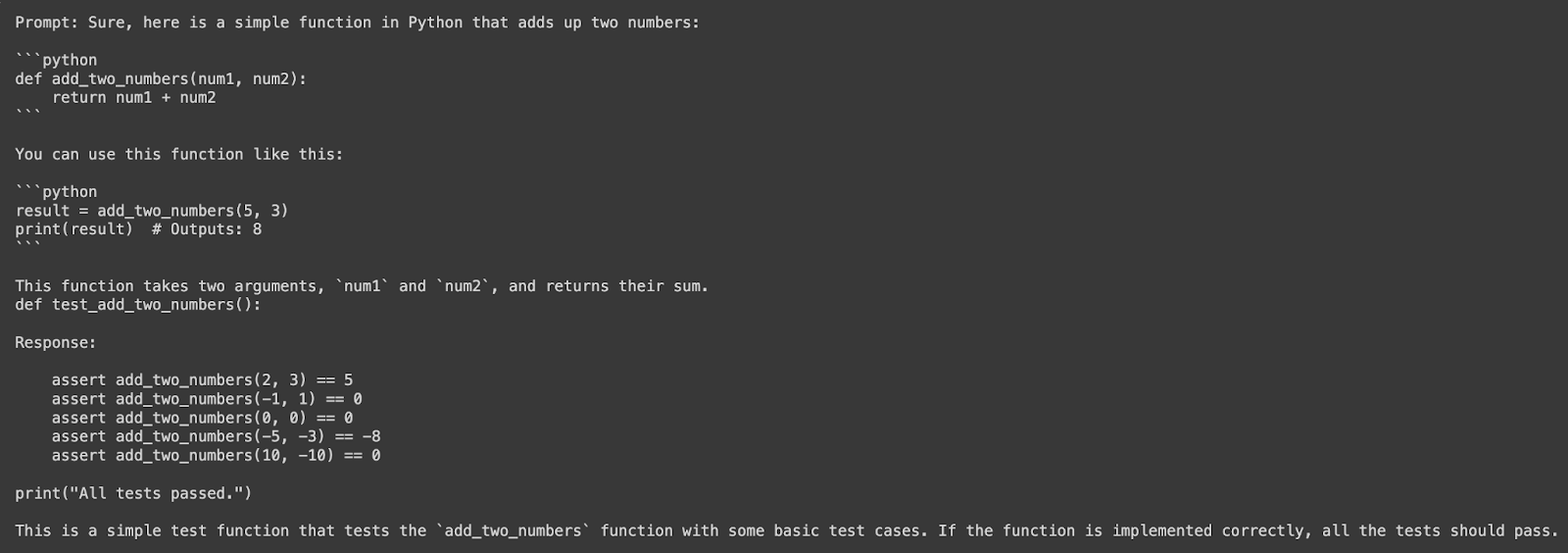

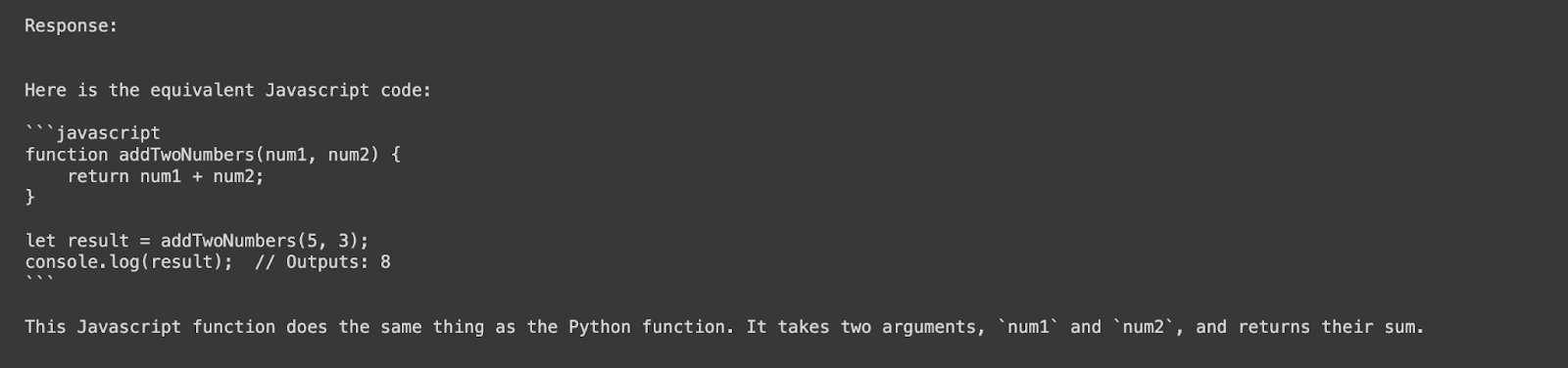

Codestral is Mistral AI's first open-weight generative AI model designed for code generation tasks, automating code completion, generation, and testing across multiple languages.

May 2024 · 8 min read

Is Codestral available for commercial use?

Can Codestral be fine-tuned for specific tasks or domains?

How much does Codestral cost?

Learn more about AI with these courses!

1 hour

5.2K

course

Large Language Models (LLMs) Concepts

2 hours

24.8K

course

AI Ethics

1 hour

11.1K

See More

RelatedSee MoreSee More

blog

GPT-3 and the Next Generation of AI-Powered Services

How GPT-3 expands the world of possibilities for language tasks—and why it will pave the way for designers to prototype more easily, streamline work for data analysts, enable more robust research, and automate content generation.

Adel Nehme

7 min

blog

What Is Claude 3.5 Sonnet? How It Works, Use Cases, and Artifacts

Claude 3.5 Sonnet outperforms GPT-4o and Gemini Pro 1.5 in several benchmarks and introduces a cool new feature: Artifacts.

Alex Olteanu

8 min

tutorial

Codestral API Tutorial: Getting Started With Mistral’s API

To connect to the Codestral API, obtain your API key from Mistral AI and send authorized HTTP requests to the appropriate endpoint (either codestral.mistral.ai or api.mistral.ai).

Ryan Ong

9 min

tutorial

A Comprehensive Guide to Working with the Mistral Large Model

A detailed tutorial on the functionalities, comparisons, and practical applications of the Mistral Large Model.

Josep Ferrer

12 min

tutorial

Mistral 7B Tutorial: A Step-by-Step Guide to Using and Fine-Tuning Mistral 7B

The tutorial covers accessing, quantizing, fine-tuning, merging, and saving this powerful 7.3 billion parameter open-source language model.

Abid Ali Awan

12 min

tutorial

Getting Started With Mixtral 8X22B

Explore how Mistral AI's Mixtral 8X22B model revolutionizes large language models with its efficient SMoE architecture, offering superior performance and scalability.

Bex Tuychiev

12 min