course

Introduction

The extensive contribution of researchers in NLP, short for Natural Language Processing, during the last decades has been generating innovative results in different domains. Below are some of the examples of Natural Language Processing in practice:

- Apple’s Siri personal assistant, which can help users in their day-to-day activities such as setting alarms, texting, answering questions, etc.

- Medical field researchers use NLP to pave the way for faster drug discovery.

- Language translation is another great application because it allows us to overcome communication barriers.

This conceptual blog aims to cover Transformers, one of the most powerful models ever created in Natural Language Processing. After explaining their benefits compared to recurrent neural networks, we will build your understanding of Transformers. Then, we will walk you through some real-world case scenarios using Huggingface transformers.

You can also learn more about Building NLP Applications with Hugging Face with our code-along.

Recurrent Network — the shining era before Transformers

Before diving into the core concept of transformers, let’s briefly understand what recurrent models are and their limitations.

Recurrent networks employ the encoder-decoder architecture, and we mainly use them when dealing with tasks where both the input and outputs are sequences in some defined ordering. Some of the greatest applications of recurrent networks are machine translation and time series data modeling.

Challenges with recurrent networks

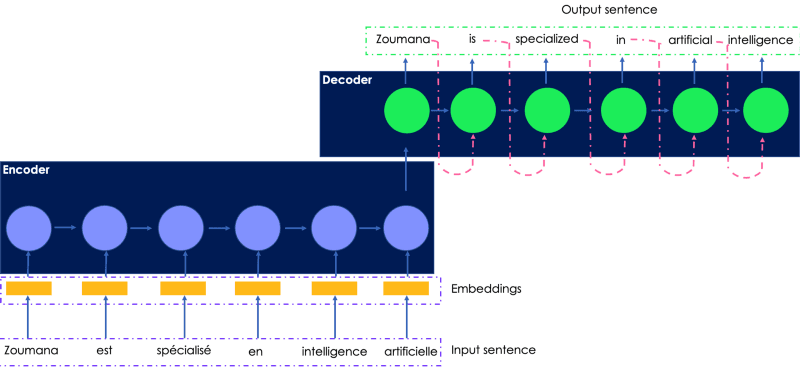

Let’s consider translating the following French sentence into English. The input transmitted to the encoder is the original French sentence, and the translated output is generated by the decoder.

A simple illustration of the recurrent network for language translation

- The input French sentence is passed to the encoder one word after the other, and the word embeddings are generated through the decoder in the same order, which makes them slow to train.

- The current word's hidden state depends on the previous words' hidden states, which makes it impossible to perform parallel computation no matter the computation power used.

- Sequence to sequence neural networks is prompt to exploding gradients when the network is too long, which leads them to perform poorly.

- Long Short Terms Memory (LSTM) networks, which are other types of recurrent networks, have been introduced to mitigate the vanishing gradient, but these are even slower than sequence models.

Wouldn't it be great to have a model that combines the benefits of recurrent networks and make parallel computation possible?

Here is where transformers come in handy.

What are transformers in NLP?

Transformers is the new simple yet powerful neural network architecture introduced by Google Brain in 2017 with their famous research paper “Attention is all you need.” It is based on the attention mechanism instead of the sequential computation as we might observe in recurrent networks.

What are the main components of transformers?

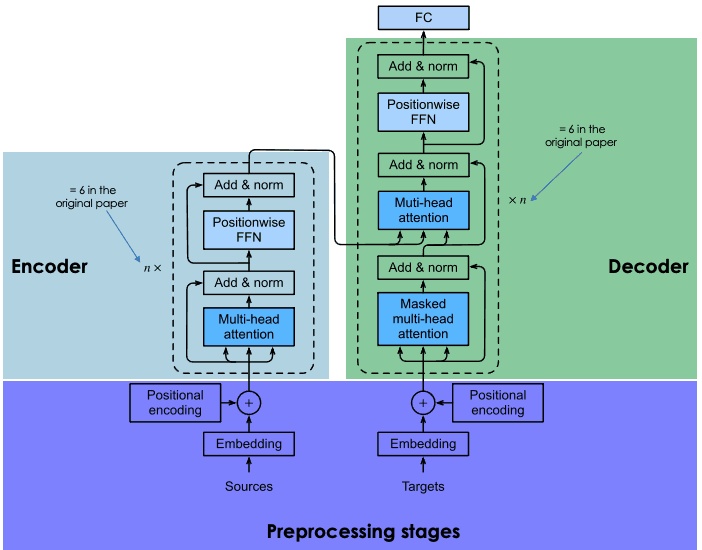

Similar to recurrent networks, transformers also have two main blocs: encoder and decoder, each one having a self-attention mechanism. The first version of transformers had RNN and LSTM encoder-decoder architecture, which have been changed later into self-attention and feed-forward networks.

The following section provides a general overview of the main components of each block of transformers.

General Architecture of transformers (adapted by Author)

Input sentence preprocessing stage

This section contains two main steps: (1) the generation of the embeddings of the input sentence, and (2) the computation of the positional vector of each word in the input sentence. All the computations are performed the same way for both the source sentence (before the encoder block) and the target sentence (before the decoder block).

Embedding of the input data

Before generating the embeddings of the input data, we start by performing the tokenization, then create the embedding of each individual word without paying attention to their relationship in the sentence.

Positional encoding

The tokenization task discards any notion of relations that existed in the input sentence. The positional encoding tries to create the original cyclic nature by generating a context vector for each word.

Encoder bloc

At the end of the previous step, we get for each word two vectors: (1) the embedding and (2) its context vector. These vectors are added to create a single vector for each word, which is then transmitted to the encoder.

Multi-head attention

As mentioned previously, we lost all notion of a relationship. The goal of the attention layer is to capture the contextual relationships existing between different words in the input sentence. This step ends up generating an attention vector for each word.

Position-wise feed-forward net (FFN)

At this stage, a feed-forward neural network is applied to every attention vector to transform them into a format that is expected by the next multi-head attention layer in the decoder.

Decoder block

The decoder block consists of three main layers: masked multi-head attention, multi-head attention, and a position-wise feed-forward network. We already understand the last two layers, which are the same in the encoder.

The decoder comes into the equation during the training of the network, and it receives two main inputs: (1) the attention vectors of the input sentence we want to translate and (2) the translated target sentences in English.

So, what is the masked multi-head attention layer responsible for?

During the generation of the next English word, the network is allowed to use all the words from the French word. However, when dealing with a given word in the target sequence (English translation), the network only has to access the previous words because making the next ones available will lead the network to “cheat” and not make any effort to learn properly. Here is where the masked multi-head attention layer has all its benefits. It masks those next words by transforming them into zeros so that they can’t be used by the attention network.

The result of the masked multi-head attention layer passes through the rest of the layers in order to predict the next word by generating a probability score.

This architecture was successful because of the following reasons:

- The total computational complexity at each layer is lower compared to RNNs.

- It totally got rid of all necessity of recurrence and allows sequence parallelization, unlike RNNs that expect the input to be in sequence.

- RNNs are not efficient with learning from long-range sequences because of the lengths of the path forward and backward signals in the network. This path is shortened using self-attention, which improves the learning process.

Transfer Learning in NLP

Training deep neural networks such as transformers from scratch is not an easy task, and might present the following challenges:

- Finding the required amount of data for the target problem can be time-consuming

- Getting the necessary computation resources like GPUs to train such deep networks can be very costly.

Using transfer learning can have many benefits, such as reducing the training time, speeding up the training process of new models, and decreasing project delivery time.

Imagine building a model from scratch to translate Mandingo language into Wolof, which are both low resources languages. Gathering data related to those languages is costly. Instead of going through all these challenges, one can re-use pre-trained deep-neural networks as the starting point for training the new model.

Such models have been trained on a huge corpus of data, made available by someone else (moral person, organization, etc.), and evaluated to work very well on language translation tasks such as French to English.

If you are new to NLP, this Introduction to Natural Language Processing in Python course can provide you with the fundamental skills to perform and solve real-world problems.

But what do you mean by re-use of deep-neural networks?

The re-use of the model involves choosing the pre-trained model that is similar to your use case, refining the input-output pair data of your target task, and retraining the head of the pre-trained model by using your data.

The introduction of Transformers has led to the development of state-of-the-art transfer learning models such as:

- BERT, short for Bidirectional Encoder Representations from Transformers, was developed by Google researchers in 2018. It helps to solve the most common language tasks such as named entity recognition, sentiment analysis, question-answering, text-summarization, etc. Read more about BERT in this NLP tutorial.

- GPT3 (Generative Pre-Training-3), proposed by OpenAI researchers. It is a multi-layer transformer, mainly used to generate any type of text. GPT models are capable of producing human-like text responses to a given question. This amazing article can provide you with deeper information about what makes GPT3 unique, the technology powering it, its risks, and its limitations.

An introduction to Hugging Face Transformers

Hugging Face is an AI community and Machine Learning platform created in 2016 by Julien Chaumond, Clément Delangue, and Thomas Wolf. It aims to democratize NLP by providing Data Scientists, AI practitioners, and Engineers immediate access to over 20,000 pre-trained models based on the state-of-the-art transformer architecture. These models can be applied to:

- Text in over 100 languages for performing tasks such as classification, information extraction, question answering, generation, generation, and translation.

- Speech, for tasks such as object audio classification and speech recognition.

- Vision for object detection, image classification, segmentation.

- Tabular data for regression and classification problems.

- Reinforcement Learning transformers.

Hugging Face Transformers also provides almost 2000 data sets and layered APIs, allowing programmers to easily interact with those models using almost 31 libraries. Most of them are deep learning, such as Pytorch, Tensorflow, Jax, ONNX, Fastai, Stable-Baseline 3, etc.

These courses are a great introduction to using Pytorch and Tensorflow for respectively building deep convolutional neural networks. Other components of the Hugging Face Transformers are the Pipelines.

What are Pipelines in Transformers?

- They provide an easy-to-use API through pipeline() method for performing inference over a variety of tasks.

- They are used to encapsulate the overall process of every Natural Language Processing task, such as text cleaning, tokenization, embedding, etc.

The pipeline() method has the following structure:

from transformers import pipeline

# To use a default model & tokenizer for a given task(e.g. question-answering)

pipeline("<task-name>")

# To use an existing model

pipeline("<task-name>", model="<model_name>")

# To use a custom model/tokenizer

pipeline('<task-name>', model='<model name>',tokenizer='<tokenizer_name>')Hugging Face Tutorial : EDITION IN PROGRESS …

Now that you have a better understanding of Transformers, and the Hugging Face platform, we will walk you through the following real-world scenarios: language translation, sequence classification with zero-shot classification, sentiment analysis, and question answering.

Information about the data sets

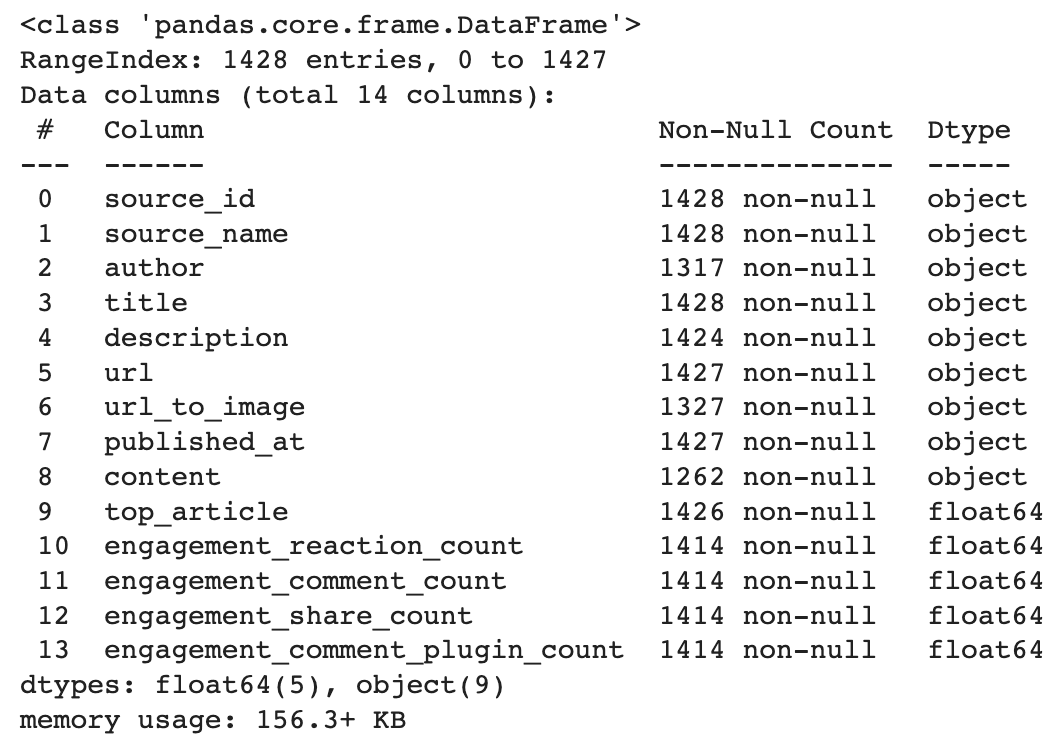

This dataset is available on Datacamp’s Dataset is enriched by Facebook and was created to predict the popularity of an article before its publication. The analysis will be based on the description column. To illustrate our examples, we will be using only three examples from the data.

Below is a brief description of the data. It has 14 columns and 1428 rows.

import pandas as pd

# Load the data from the path

data_path = "datacamp_workspace_export_2022-08-08 07_56_40.csv"

news_data = pd.read_csv(data_path, error_bad_lines=False)

# Show data information

news_data.info()

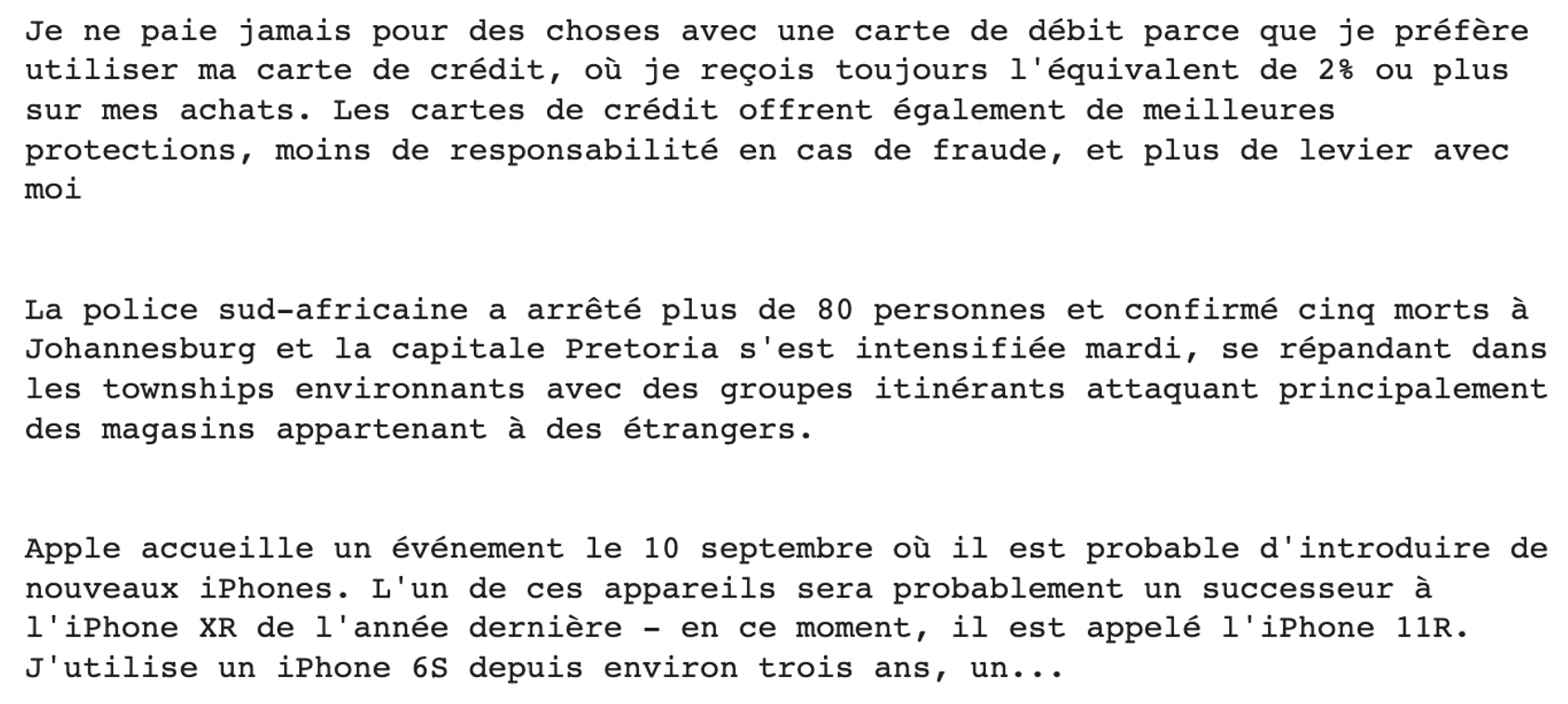

Language Translation

MariamMT is an efficient Machine Translation framework. It uses the MarianNMT engine under the hood, which is purely developed in C++ by Microsoft and many academic institutions such as the University of Edinburgh, and Adam Mickiewicz University in Poznań. The same engine is currently behind the Microsoft Translator service.

The NLP group from the University of Helsinki open-sourced multiple translation models on Hugging Face Transformers and they are all in the following format Helsinki-NLP/opus-mt-{src}-{tgt}where {src} and {tgt}correspond respectively to the source and target languages.

So, in our case, the source language is English (en) and the target language is French (fr)

MarianMT is one of those models previously trained using Marian on parallel data collected at Opus.

- MarianMT requires sentencepiece in addition to Transformers:

pip install transformers sentencepiece

from transformers import MarianTokenizer, MarianMTModel- Select the pre-trained model, get the tokenizer and load the pre-trained model

# Get the name of the model

model_name = 'Helsinki-NLP/opus-mt-en-fr'

# Get the tokenizer

tokenizer = MarianTokenizer.from_pretrained(model_name)

# Instantiate the model

model = MarianMTModel.from_pretrained(model_name)- Add the special token >>{tgt}<< in front of each source (English) text with the help of the following function.

def format_batch_texts(language_code, batch_texts):

formated_bach = [">>{}<< {}".format(language_code, text) for text in

batch_texts]

return formated_bach- Implement the batch translation logic with the help of the following function, a batch being a list of texts to be translated.

def perform_translation(batch_texts, model, tokenizer, language="fr"):

# Prepare the text data into appropriate format for the model

formated_batch_texts = format_batch_texts(language, batch_texts)

# Generate translation using model

translated = model.generate(**tokenizer(formated_batch_texts,

return_tensors="pt", padding=True))

# Convert the generated tokens indices back into text

translated_texts = [tokenizer.decode(t, skip_special_tokens=True) for t in translated]

return translated_texts- Run the translation on the previous samples of descriptions

# Check the model translation from the original language (English) to French

translated_texts = perform_translation(english_texts, trans_model, trans_model_tkn)

# Create wrapper to properly format the text

from textwrap import TextWrapper

# Wrap text to 80 characters.

wrapper = TextWrapper(width=80)

for text in translated_texts:

print("Original text: \n", text)

print("Translation : \n", text)

print(print(wrapper.fill(text)))

print("")

Zero-shot classification

Most of the time, training a Machine Learning model requires all the candidate labels/targets to be known beforehand, meaning that if your training labels are science, politics, or education, you will not be able to predict the healthcare label unless you retrain your model, taking into consideration that label and the corresponding input data.

This powerful approach makes it possible to predict the target of a text in about 15 languages without having seen any of the candidate labels. We can use this model by simply loading it from the hub.

The goal here is to try to classify the category of each of the previous descriptions, whether it is tech, politics, security, or finance.

- Import the pipeline module

from transformers import pipeline- Define candidate labels. These correspond to what we want to predict: tech, politics, business, or finance

candidate_labels = ["tech", "politics", "business", "finance"]- Define the classifier with the multilingual option

my_classifier = pipeline("zero-shot-classification",

model='joeddav/xlm-roberta-large-xnli')- Run the predictions on the first and the last descriptions

#For the first description

prediction = my_classifier(english_texts[0], candidate_labels, multi_class = True)

pd.DataFrame(prediction).drop(["sequence"], axis=1)

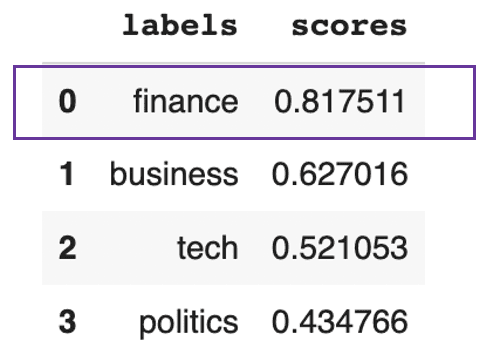

Text predicted to be mainly about finance

This previous result shows that the text is overall about finance at 81%.

For the last description, we get the following result:

#For the last description

prediction = my_classifier(english_texts[-1], candidate_labels, multi_class = True)

pd.DataFrame(prediction).drop(["sequence"], axis=1)

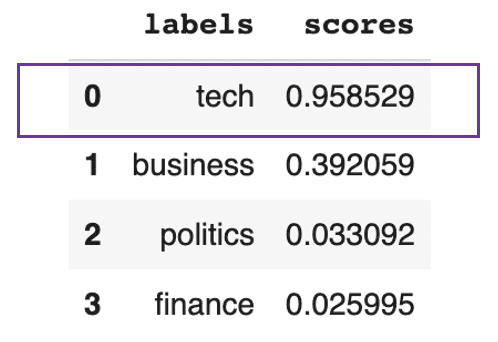

Text predicted to be mainly about tech

This previous result shows that the text is overall about tech at 95%.

Sentiment analysis

Most models performing sentiment classification require proper training. The hugging Face pipeline module makes it easy to run sentiment analysis predictions by using a specific model available on the hub by specifying its name.

- Choose the task to perform and load the corresponding model. Here, we want to perform sentiment classification, using the distilled BERT base model.

model_checkpoint = "distilbert-base-uncased-finetuned-sst-2-english"

distil_bert_model = pipeline(task="sentiment-analysis", model=model_checkpoint)- The model is ready! Let's analyze the underlying sentiments behind the last two sentences.

# Run the predictions

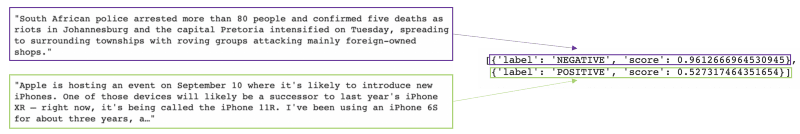

distil_bert_model(english_texts[1:])

The model predicted the first text to have a negative sentiment with 96% confidence, and the second one predicted with positive sentiment at 52% confidence.

If you want to explore more on sentiment analysis tasks, this Python Sentiment Analysis course will help you get the skills to build your own sentiment analysis classifier using Python and understand the basics of NLP.

Question Answering

Imagine dealing with a report much longer than the one about Apple. And, all you are interested in is the date of the event being mentioned. Instead of reading the whole report to find the key information, we can use a question-answering model from Hugging Face that will provide the answer we are interested in.

This can be done by providing the model with proper context (Apple’s report)and the question we are interested in finding the answer to.

- Import the question-answering class and tokenizer from transformers

from transformers import AutoModelForQuestionAnswering, AutoTokenizer- Instantiate the model using its name and its tokenizer.

model_checkpoint = "deepset/roberta-base-squad2"

task = 'question-answering'

QA_model = pipeline(task, model=model_checkpoint, tokenizer=model_checkpoint)- Request the model by asking the question and specifying the context.

QA_input = {

'question': 'when is Apple hosting an event?',

'context': english_texts[-1]

}- Get the result of the model

model_response = QA_model(QA_input)

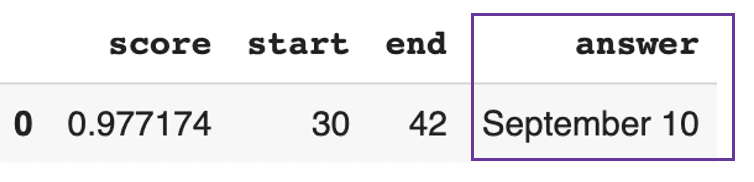

pd.DataFrame([model_response])

The model answered that Apple’s event is on September 10th with high confidence of 97%.

Conclusion

In this article, we’ve covered the evolution of natural language technology from recurrent networks to transformers and how Hugging Face has democratized the use of NLP through its platform.

If you are still hesitant about using transformers, we believe it is time to give them a try and add value to your business cases.

FAQ Transformers and Hugging Face

What is a Hugging Face transformer?

Hugging Face transformers is a platform that provides the community with APIs to access and use state-of-the-art pre-trained models available from the Hugging Face hub.

What is a pre-trained transformer?

A pre-trained transformer is a transformer model that has been trained and validated by someone (a moral person or an industry) and that we can use as a starting point for a similar task.

Is Hugging Face free to use?

Hugging Face provides both community and organization versions. The community version includes free, which has limitations, and the pro version, which costs $9 per month. Organizations, on the other hand, include Lab and enterprise solutions, which are not free of charge.

Which framework is used on Hugging Face?

Hugging Face provides APIs for almost 31 libraries. Most of them are deep learning, such as Pytorch, Tensorflow, Jax, ONNX, fastai, Stable-Baseline 3, etc.

Which programming language is used on Hugging Face?

Some of the pre-trained models have been trained using multilingual denoising tasks in Javascript, Python, Rust, and Bash/Shell. This Python Natural Language Processing course can help you get the relevant skills to successfully perform common text cleaning tasks

Courses for Python

course

Intermediate Python

course

Data Manipulation with pandas

tutorial

Building a Transformer with PyTorch

tutorial

Hugging Face Image Classification: A Comprehensive Guide With Examples

tutorial

Hugging Face's Text Generation Inference Toolkit for LLMs - A Game Changer in AI

Josep Ferrer

11 min

code-along

Building NLP Applications with Hugging Face

Jacob Marquez

code-along

Image Classification with Hugging Face

Priyanka Asnani

code-along

Using Open Source AI Models with Hugging Face

Alara Dirik