course

What is RAFT? Combining RAG and Fine-Tuning To Adapt LLMs To Specialized Domains

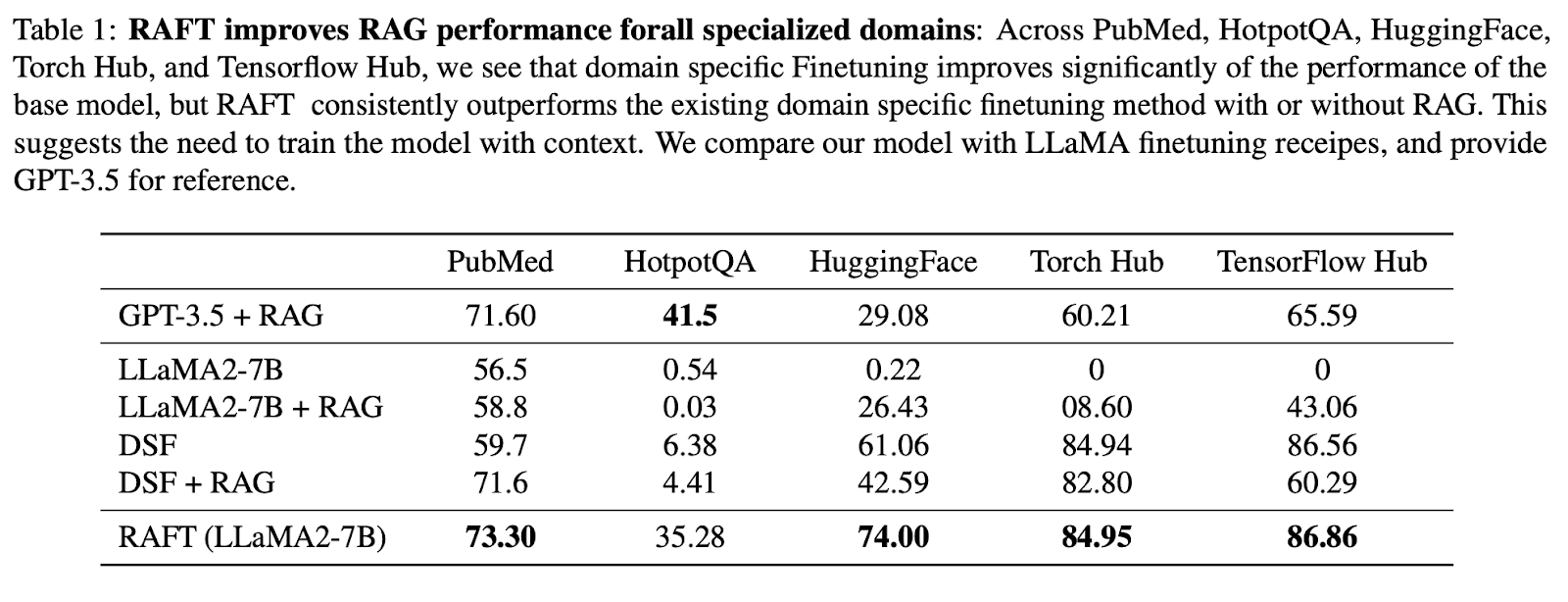

RAFT combines Retrieval-Augmented Generation (RAG) and fine-tuning to boost large language models' performance in specialized domains

May 2024 · 11 min read

Topics

Continue Your AI Learning Journey Today!

2 hours

25.7K

course

Developing LLM Applications with LangChain

3 hours

6K

course

Introduction to LLMs in Python

4 hours

6.5K

See More

RelatedSee MoreSee More

blog

What is Retrieval Augmented Generation (RAG)?

Explore Retrieval Augmented Generation (RAG) RAG: Integrating LLMs with data search for nuanced AI responses. Understand its applications and impact.

Natassha Selvaraj

8 min

tutorial

Boost LLM Accuracy with Retrieval Augmented Generation (RAG) and Reranking

Discover the strengths of LLMs with effective information retrieval mechanisms. Implement a reranking approach and incorporate it into your own LLM pipeline.

Iván Palomares Carrascosa

11 min

tutorial

An Introductory Guide to Fine-Tuning LLMs

Fine-tuning Large Language Models (LLMs) has revolutionized Natural Language Processing (NLP), offering unprecedented capabilities in tasks like language translation, sentiment analysis, and text generation. This transformative approach leverages pre-trained models like GPT-2, enhancing their performance on specific domains through the fine-tuning process.

Josep Ferrer

12 min

tutorial

Mastering Low-Rank Adaptation (LoRA): Enhancing Large Language Models for Efficient Adaptation

Explore the groundbreaking technique of Low-Rank Adaptation (LoRA) in our full guide. Discover how LoRA revolutionizes the fine-tuning of Large Language Models.

Moez Ali

10 min

tutorial

How to Improve RAG Performance: 5 Key Techniques with Examples

Explore different approaches to enhance RAG systems: Chunking, Reranking, and Query Transformations.

Eugenia Anello

tutorial

Fine-Tuning LLaMA 2: A Step-by-Step Guide to Customizing the Large Language Model

Learn how to fine-tune Llama-2 on Colab using new techniques to overcome memory and computing limitations to make open-source large language models more accessible.

Abid Ali Awan

12 min