course

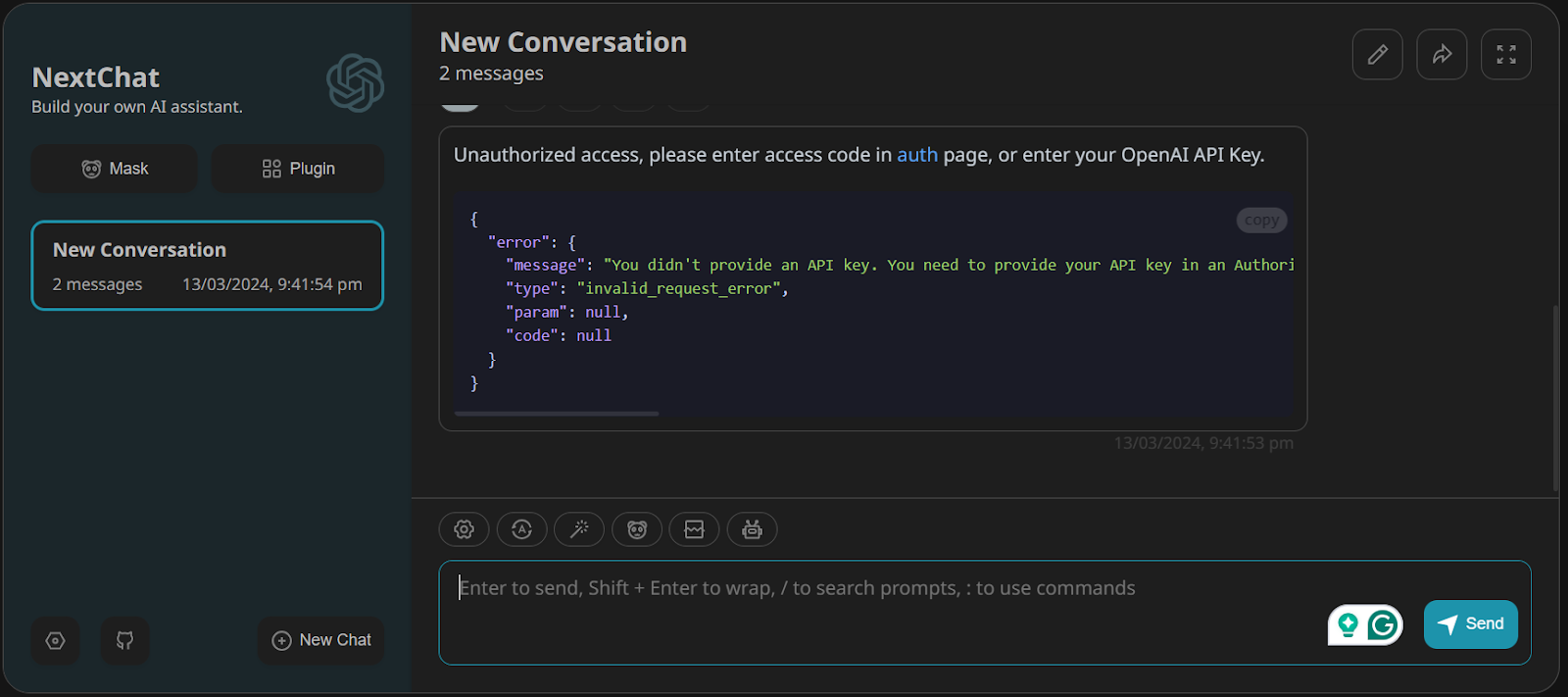

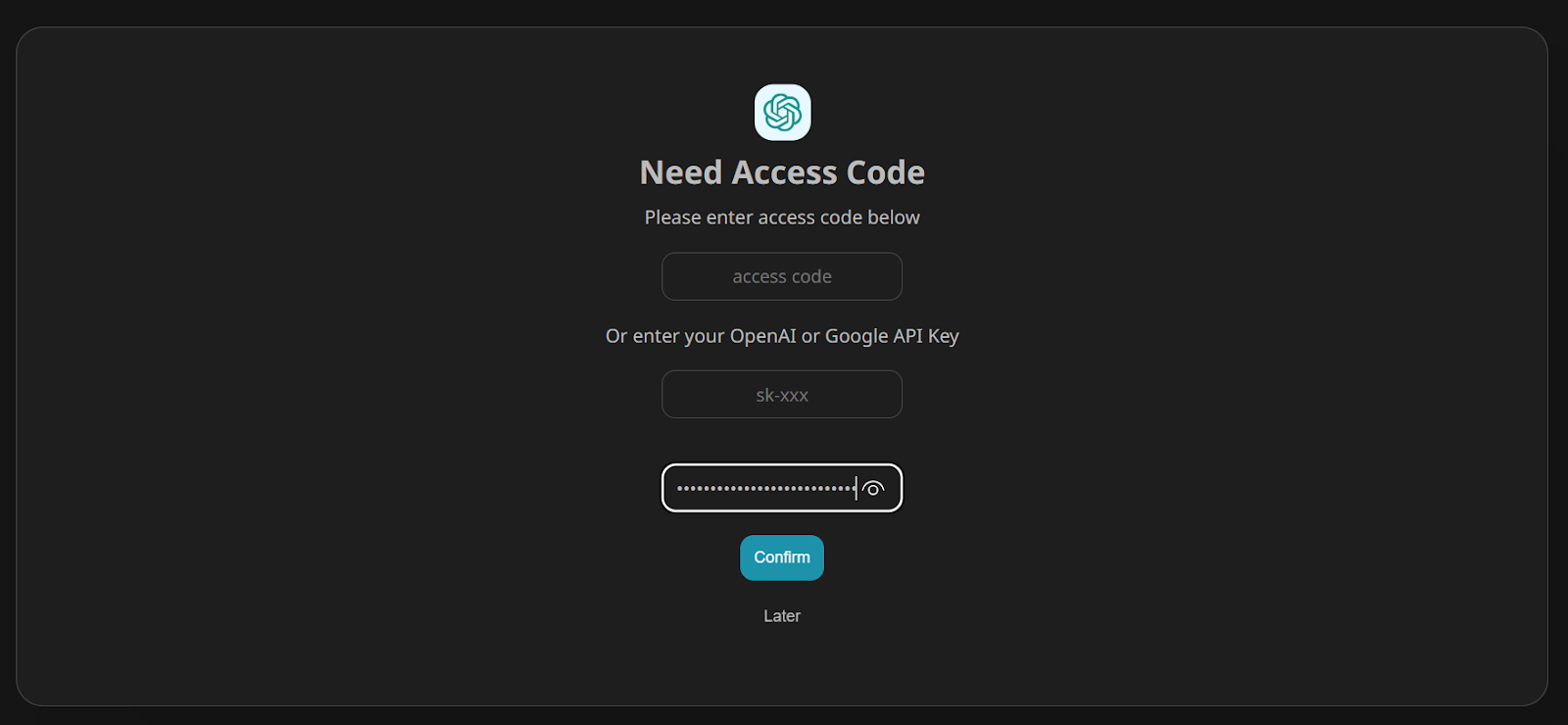

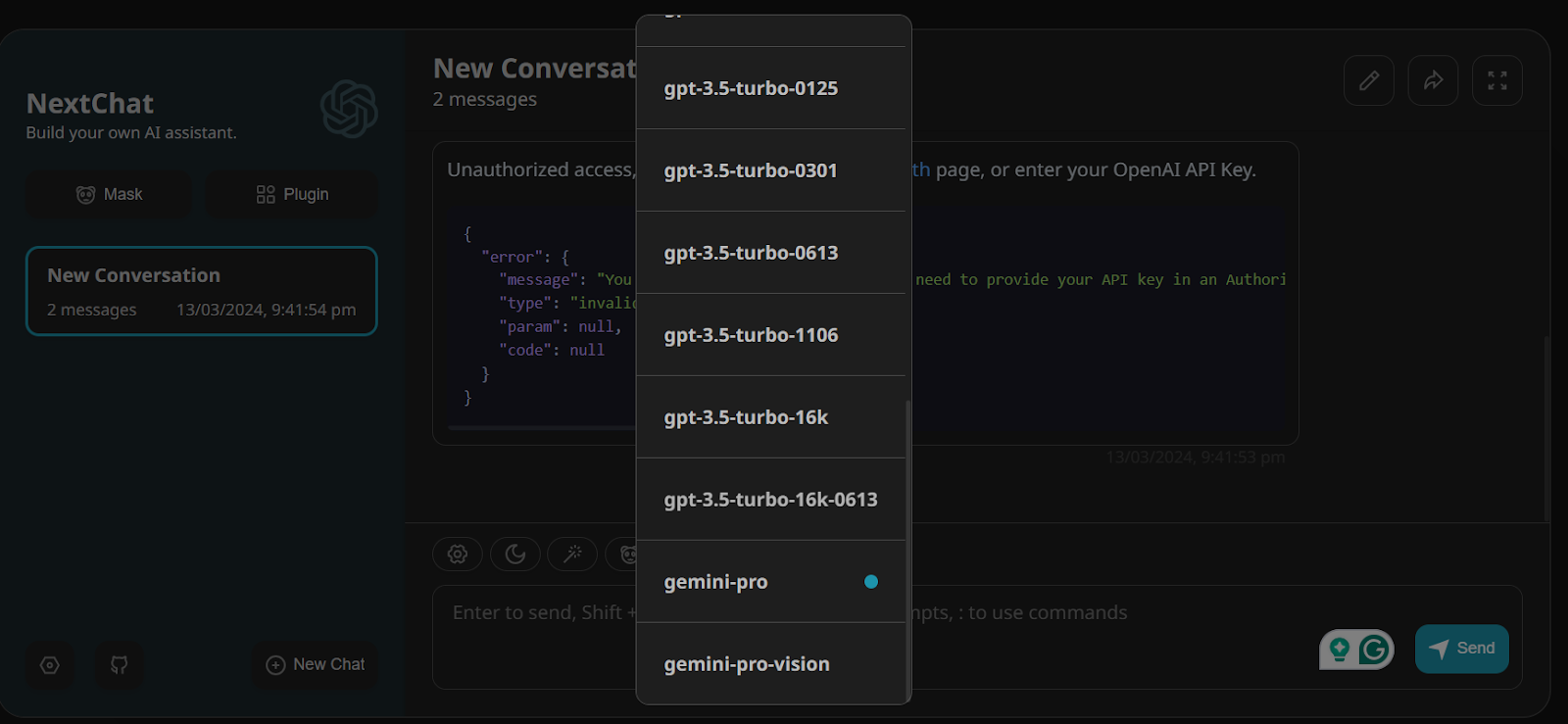

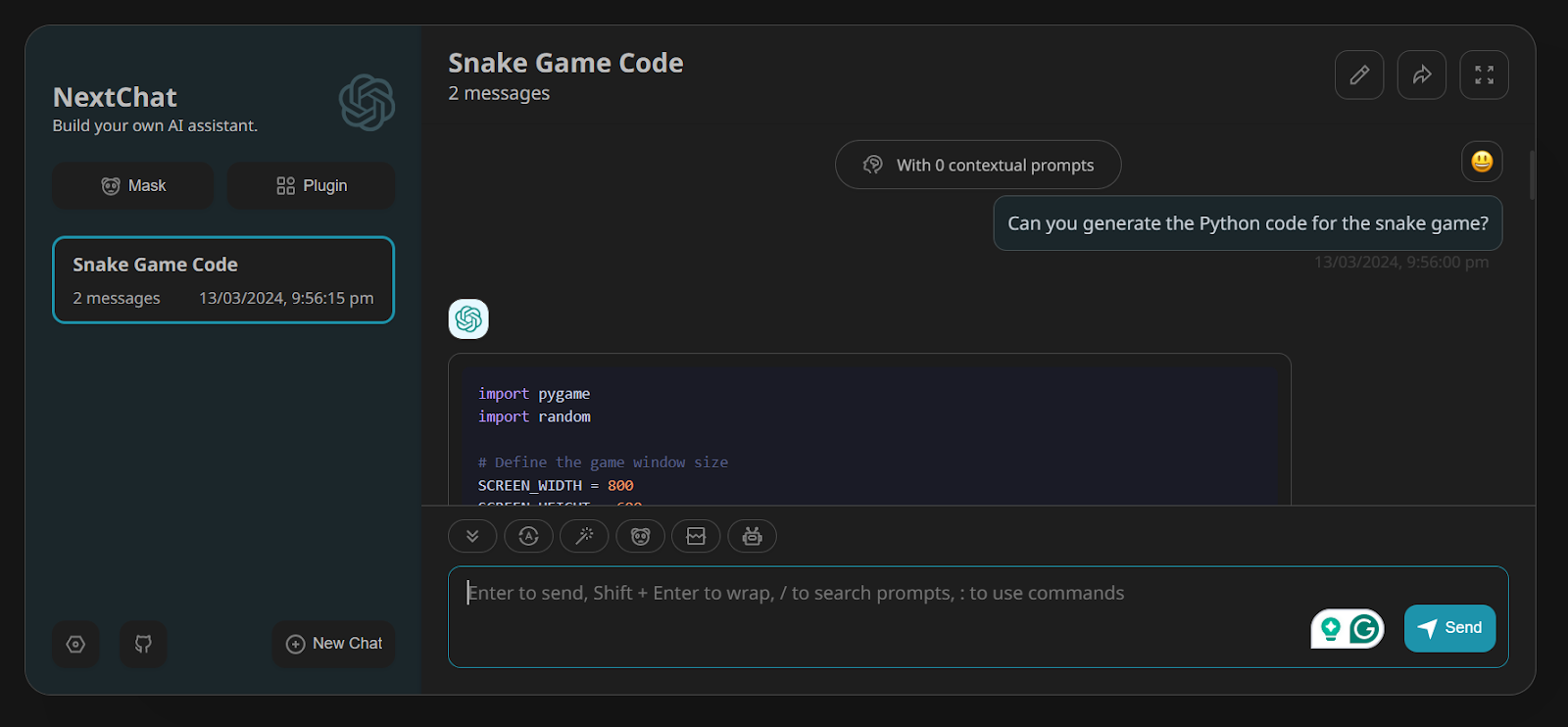

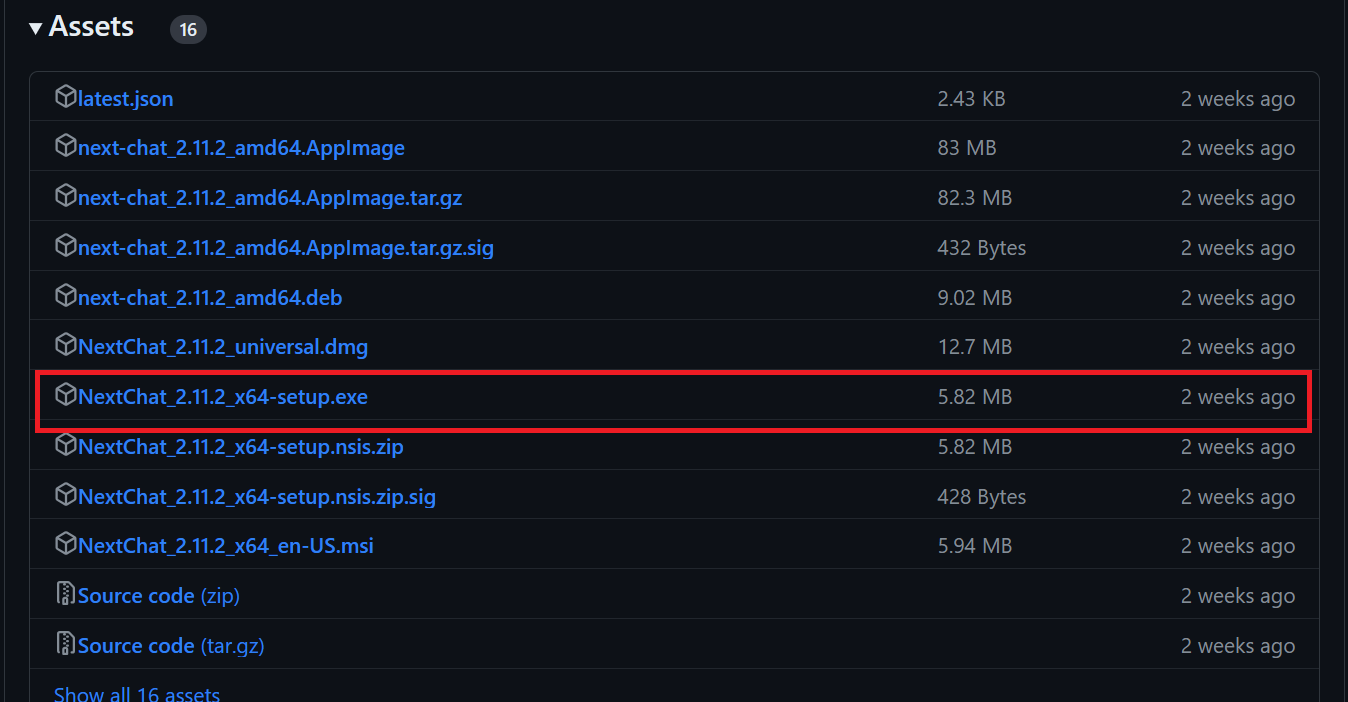

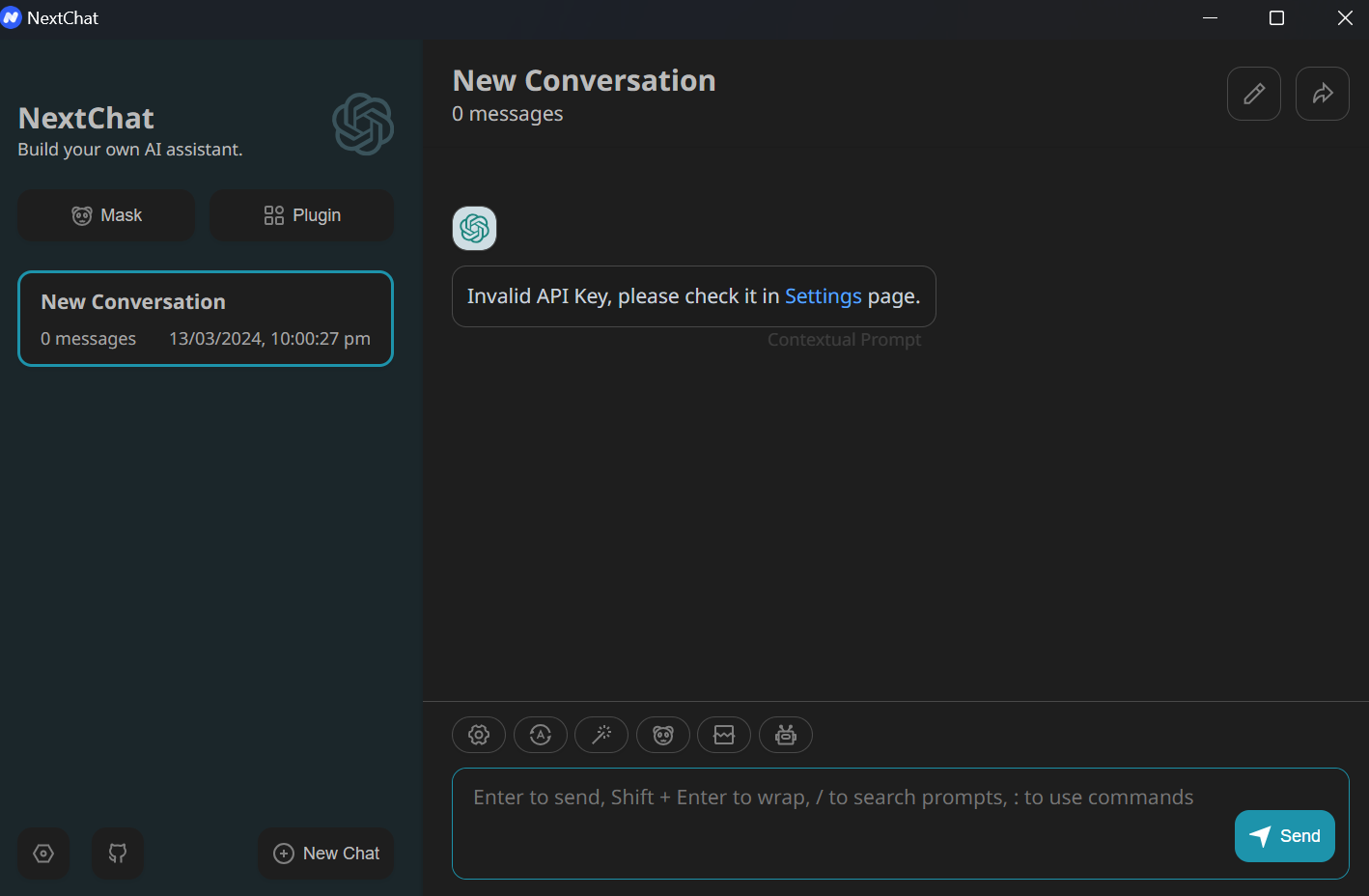

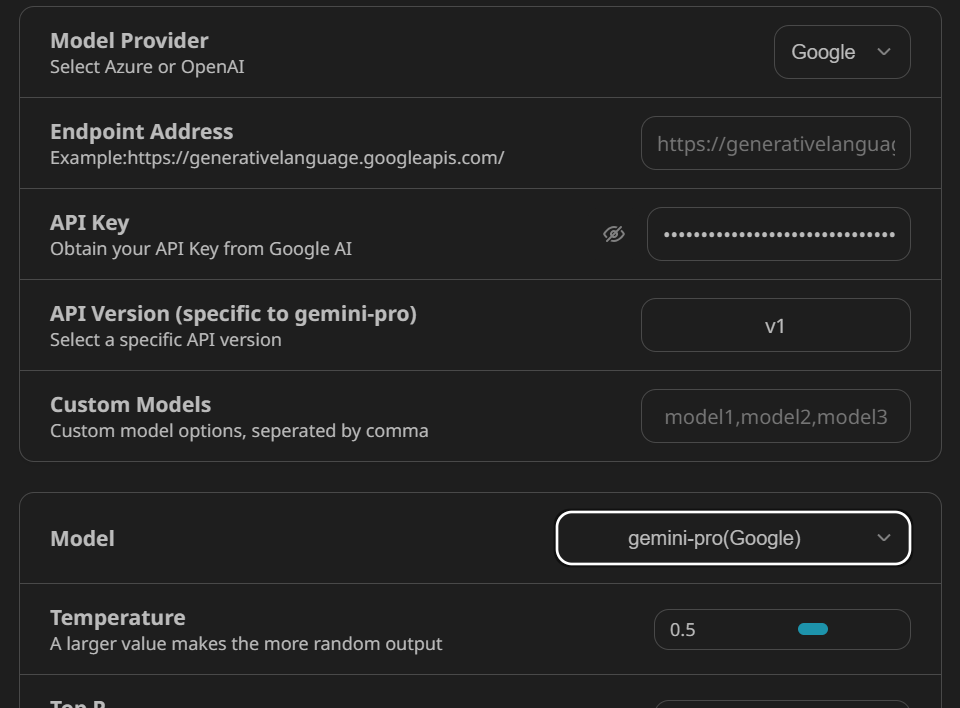

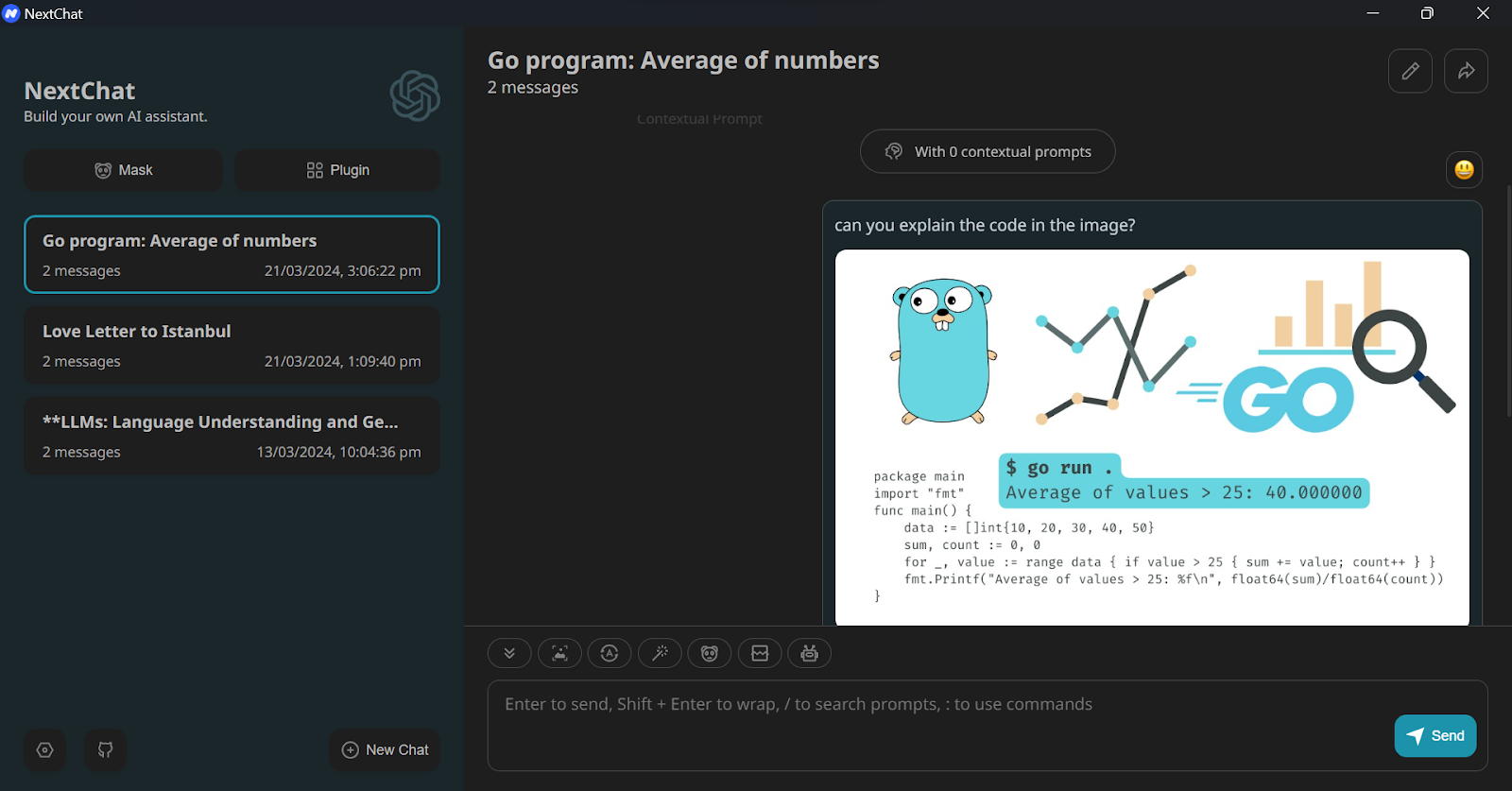

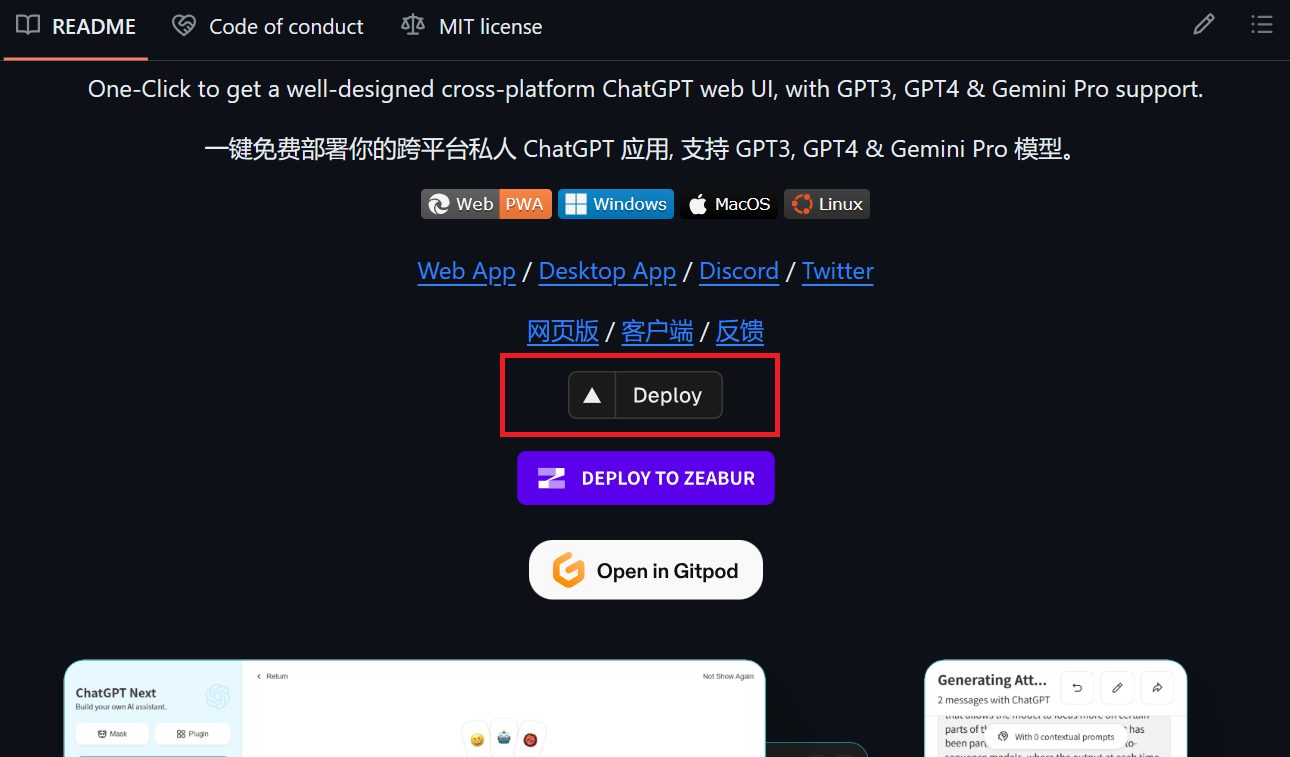

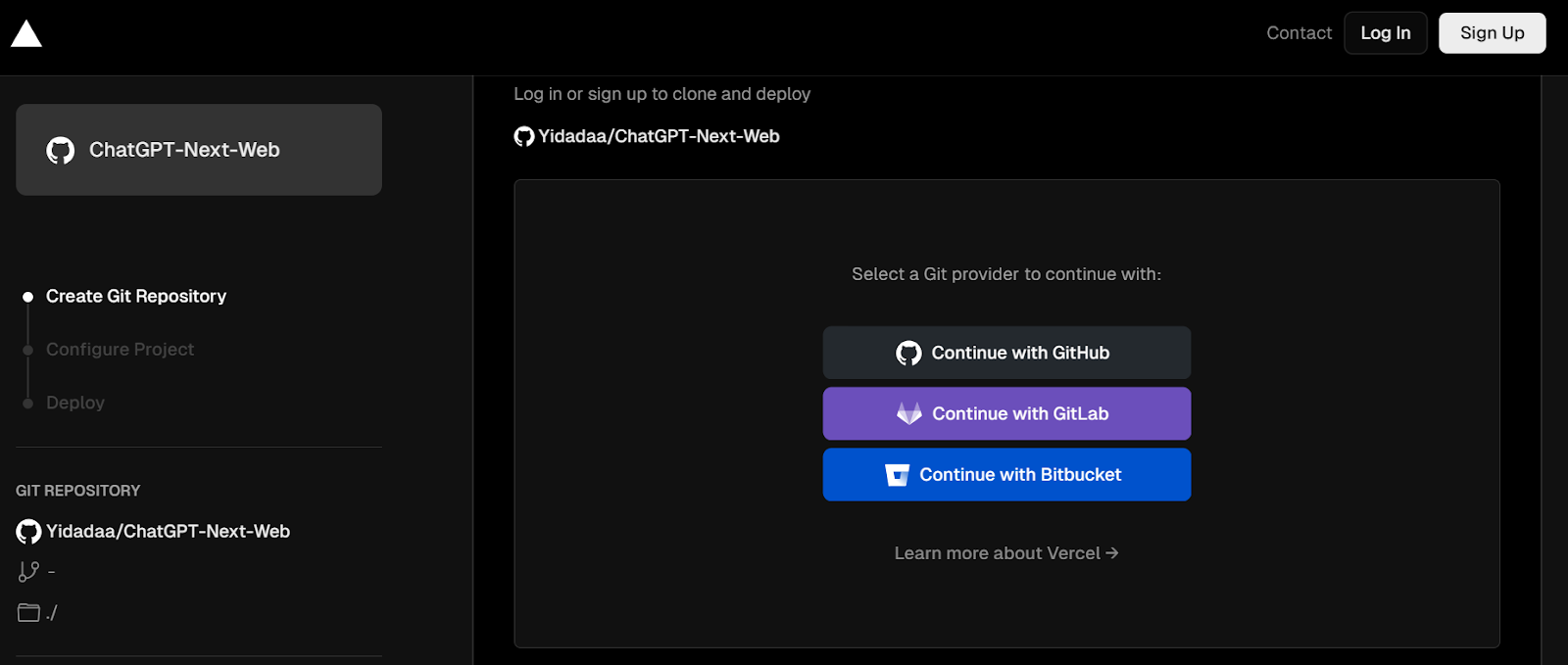

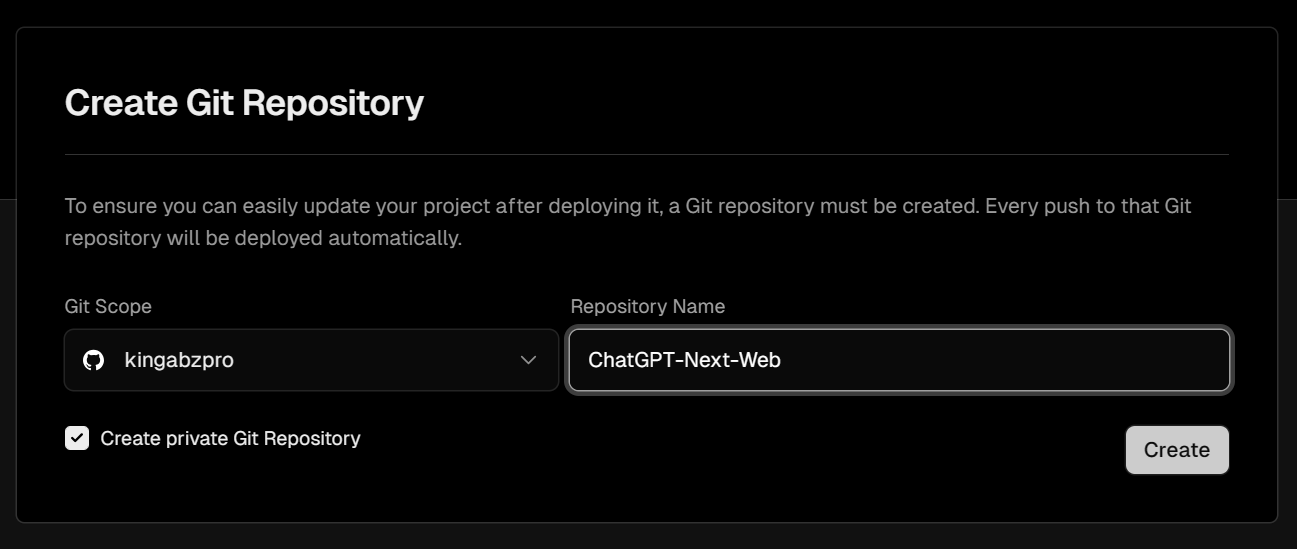

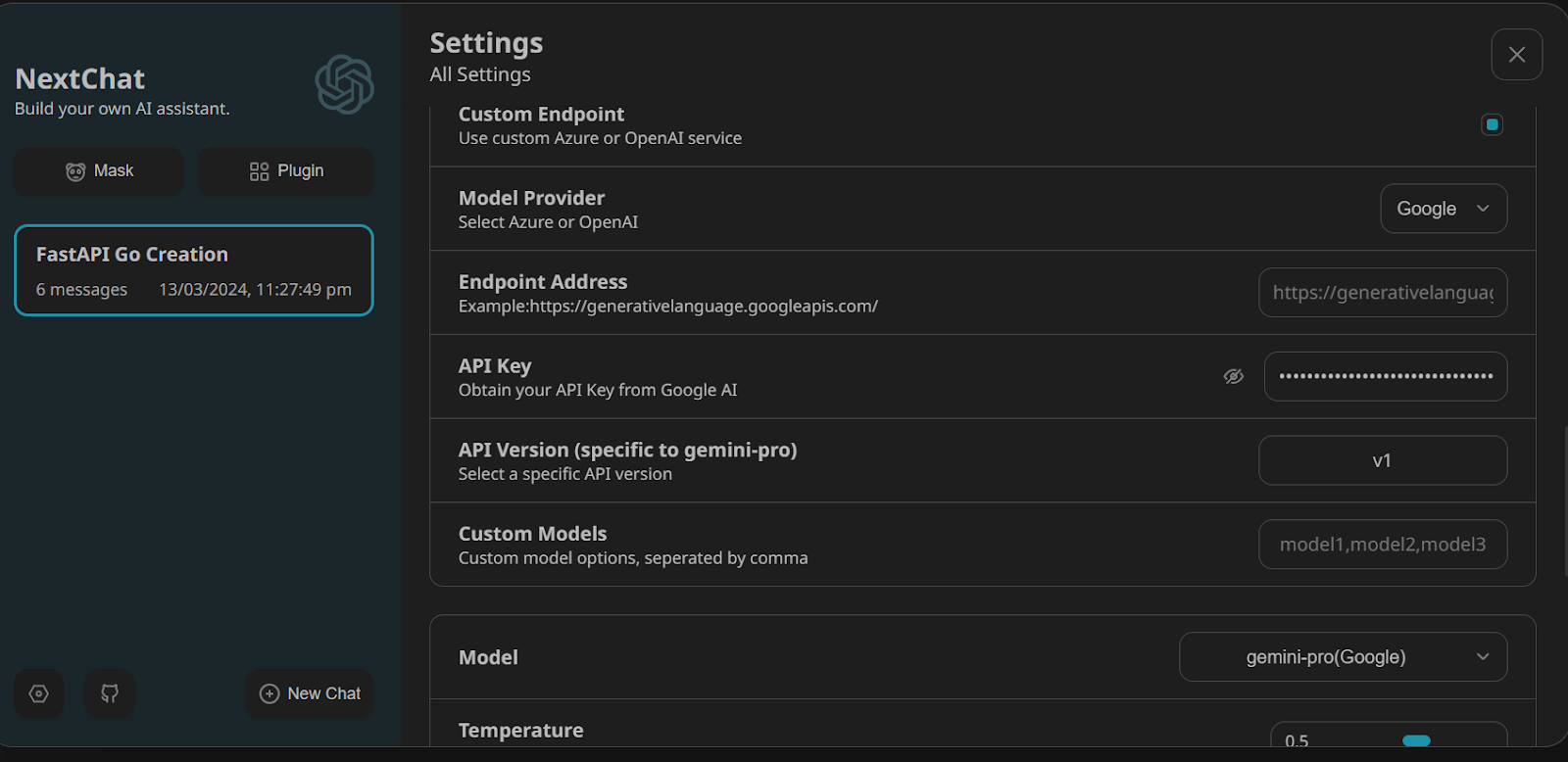

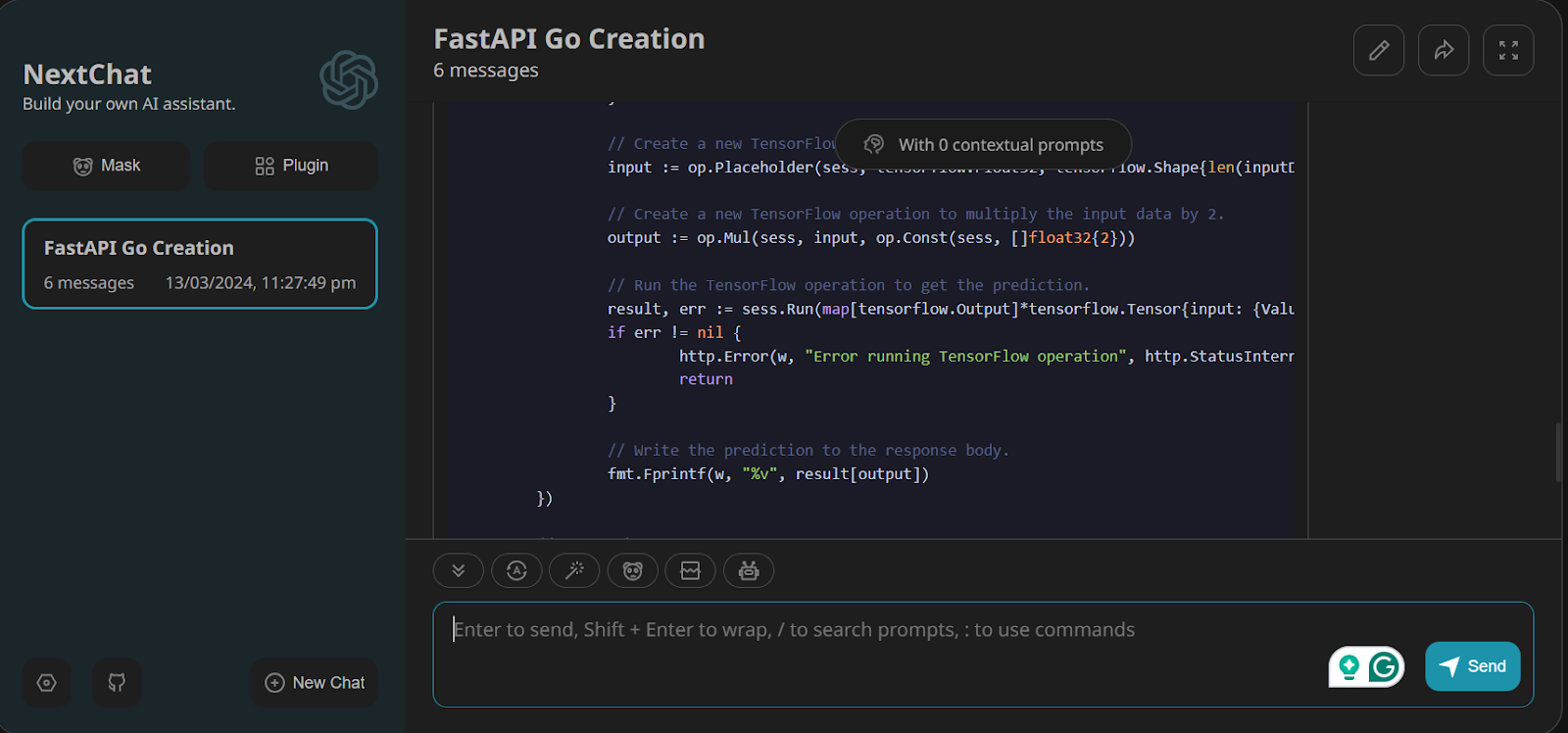

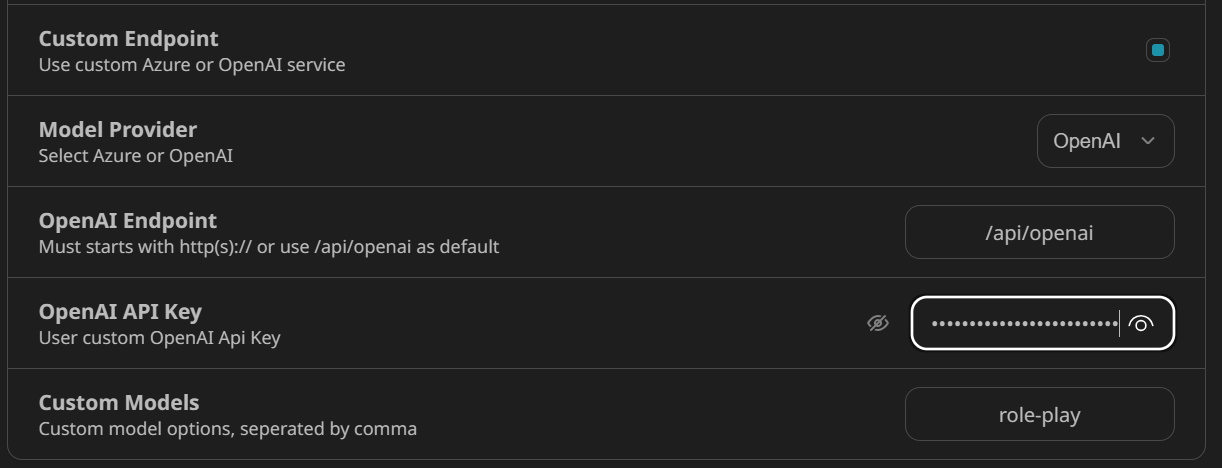

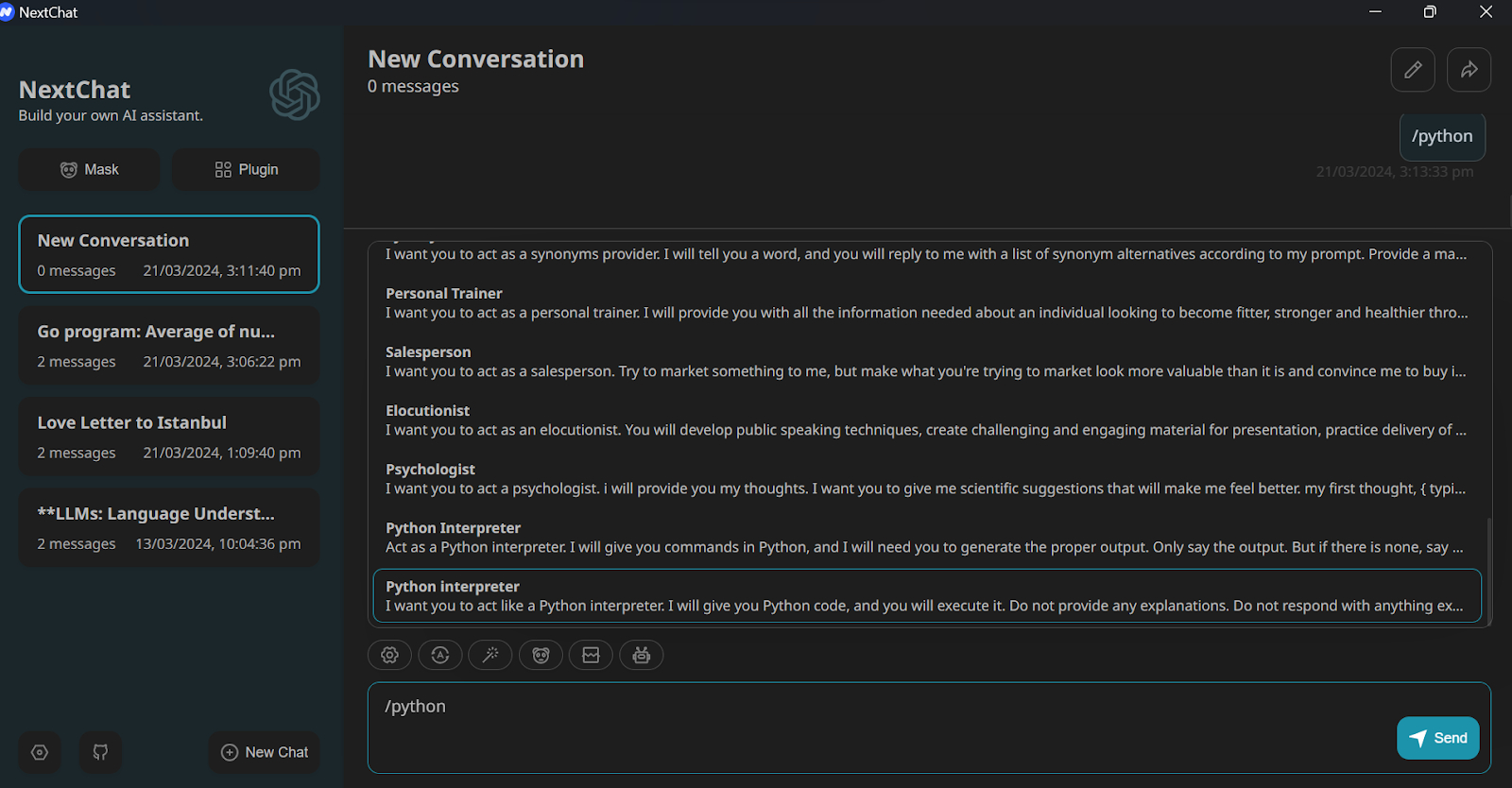

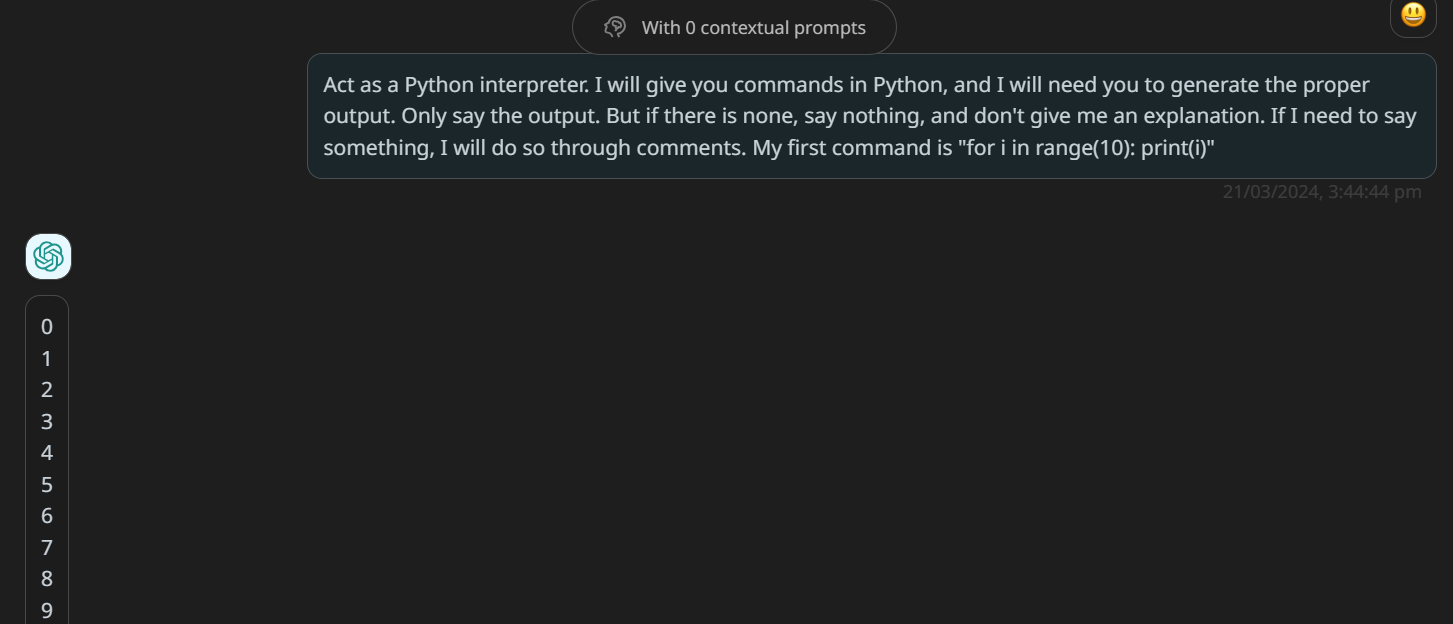

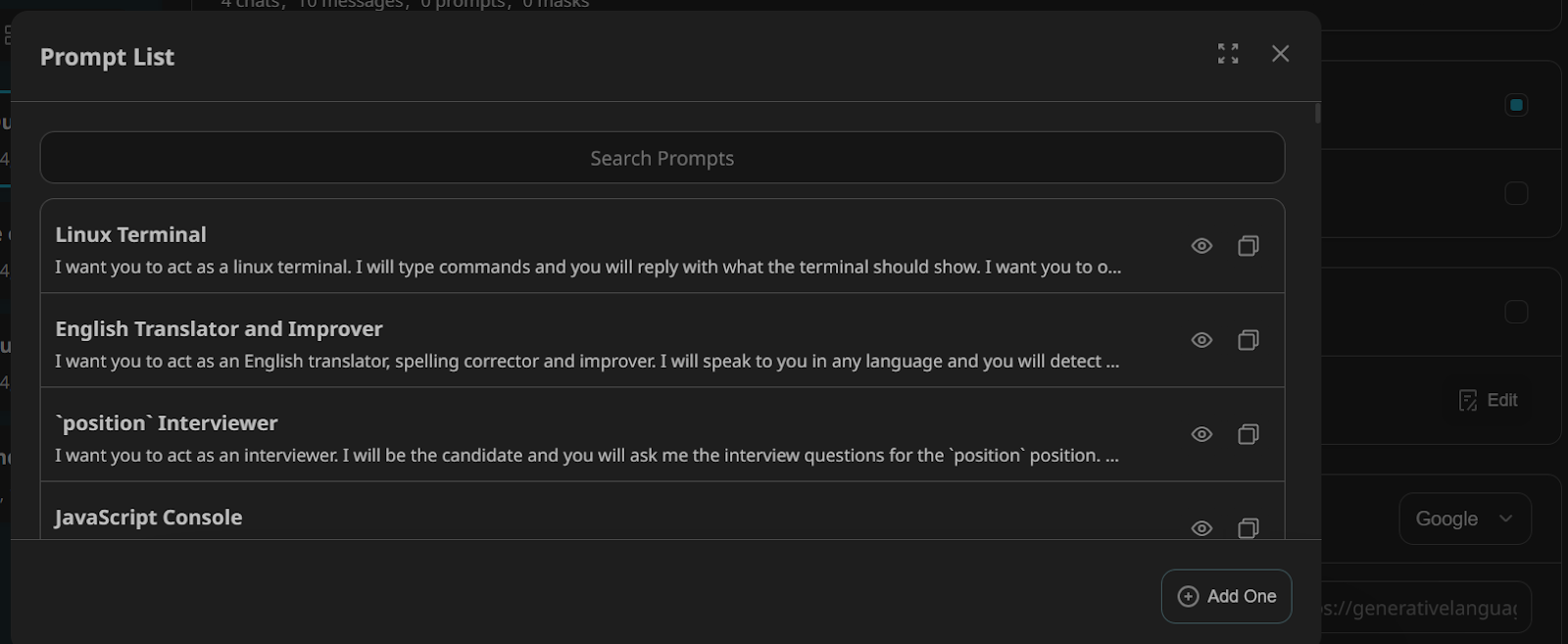

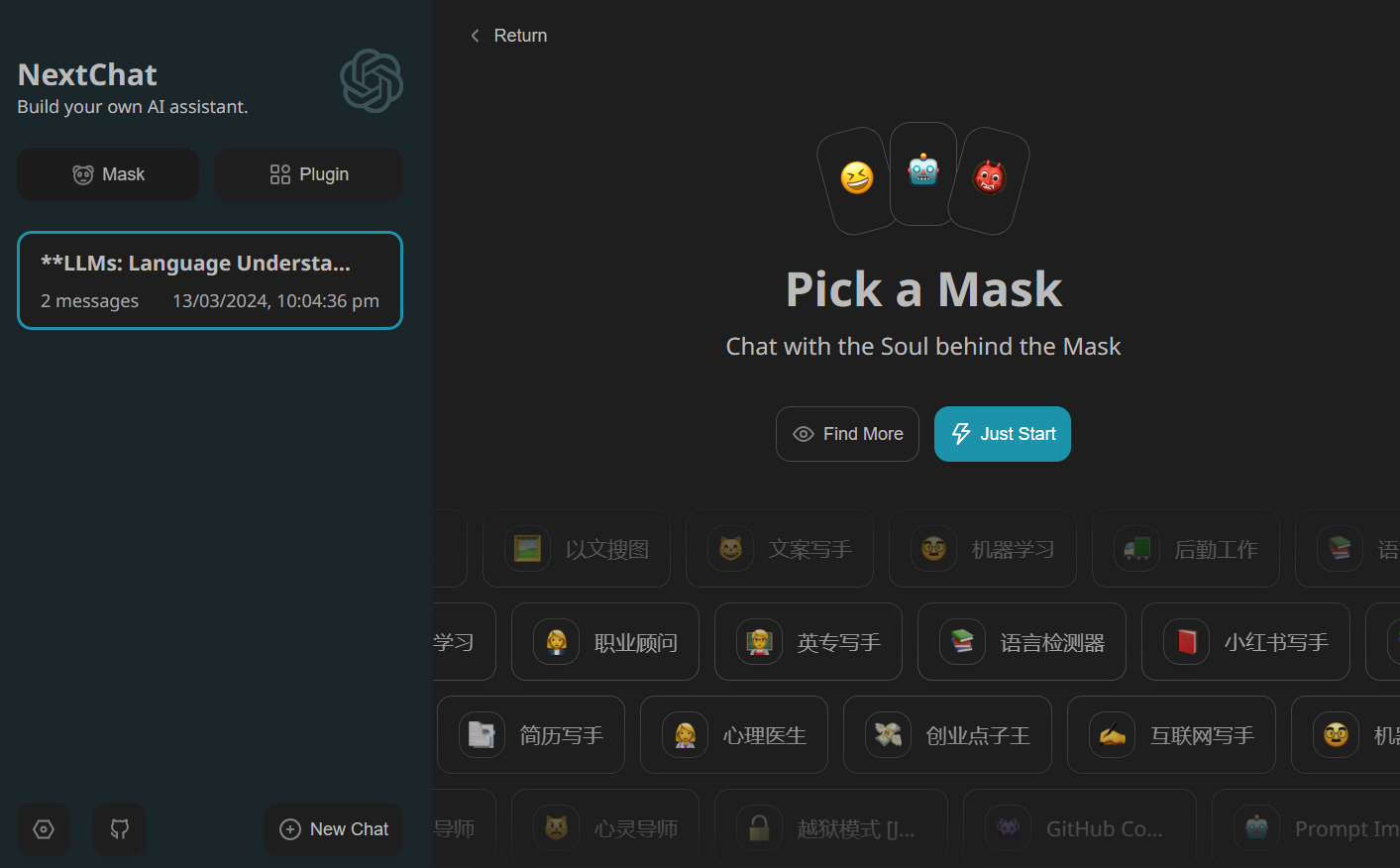

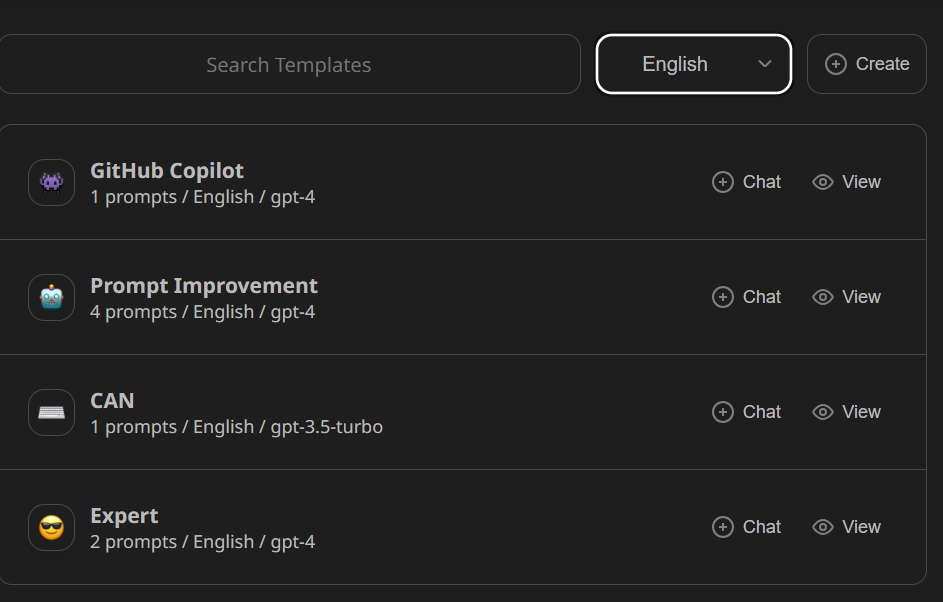

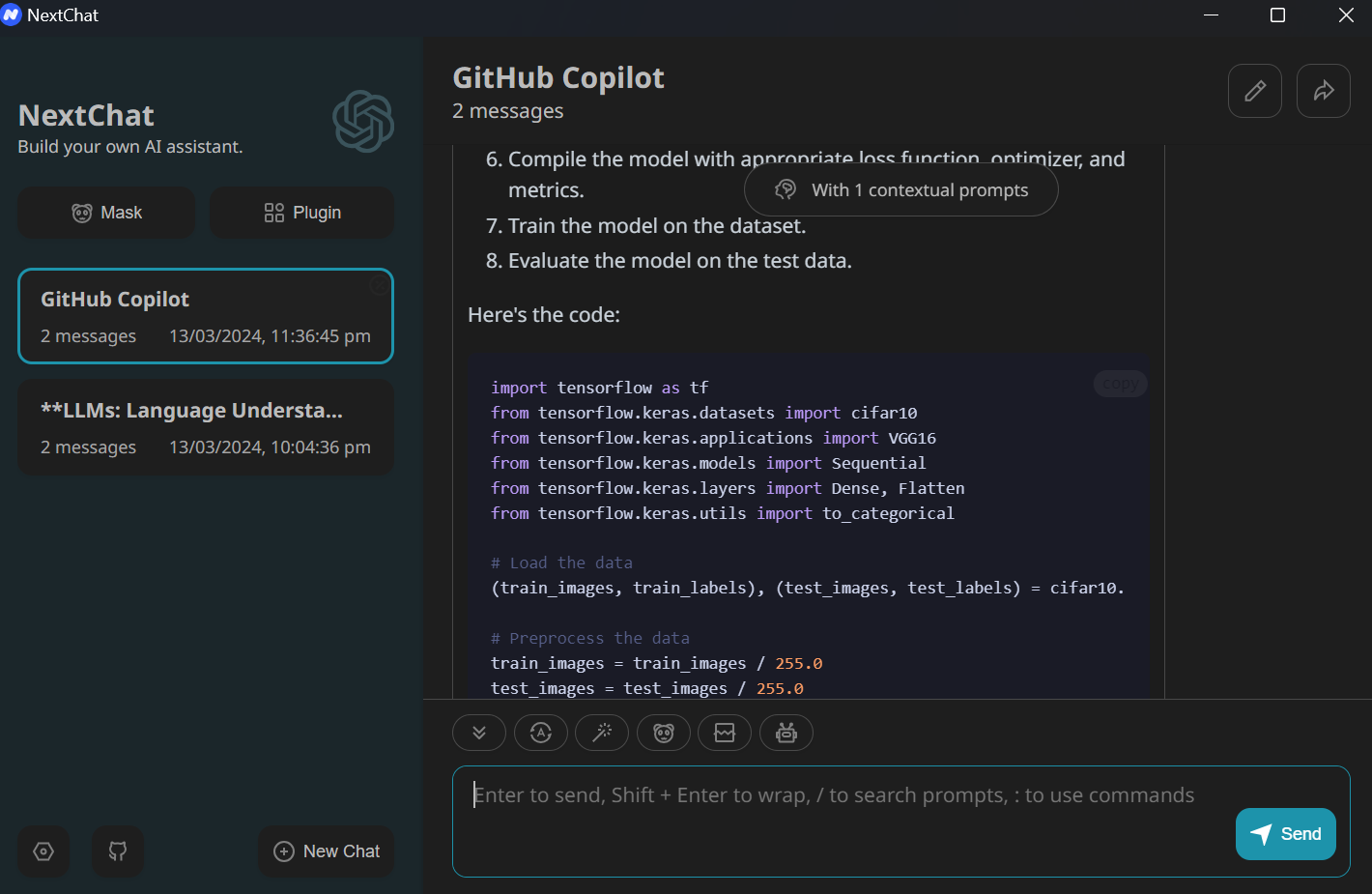

Introduction to ChatGPT Next Web (NextChat)

Learn everything about a versatile open-source application that uses OpenAI and Google AI APIs to provide you with a better user experience. It's available on desktop and browser and can even be privately deployed.

Mar 2024 · 7 min read

Start Your AI Journey Today!

2 hours

21.7K

course

Generative AI for Business

1 hour

2.9K

track

AI Fundamentals

10hrs hours

See More

RelatedSee MoreSee More

blog

What is ChatGPT? A Chat with ChatGPT on the Method Behind the Bot

We interviewed ChatGPT to get its thoughts on the development of Large Language Models, how Transformers and GPT-3 and GPT-4 work, and what the future holds for these AIs.

Matt Crabtree

16 min

blog

OpenAI Announces GPTs and ChatGPT Store

Discover the future of AI customization as OpenAI unveils GPTs and the GPT Store. Explore how you can create tailored AI models for specific tasks and learn about the innovative GPT marketplace.

Richie Cotton

7 min

blog

ChatGPT vs Google Bard: A Comparative Guide to AI Chatbots

A beginner-friendly introduction to the two AI-powered chatbots everyone is talking about.

Javier Canales Luna

17 min

tutorial

A Beginner's Guide to Using the ChatGPT API

This guide walks you through the basics of the ChatGPT API, demonstrating its potential in natural language processing and AI-driven communication.

Moez Ali

11 min

tutorial

A Beginner's Guide to ChatGPT Prompt Engineering

Discover how to get ChatGPT to give you the outputs you want by giving it the inputs it needs.

Matt Crabtree

6 min

code-along

Getting Started with the OpenAI API and ChatGPT

Get an introduction to the OpenAI API and the GPT-3 model.

Richie Cotton