Course

What is Google Gemini? Everything You Need To Know About Google’s ChatGPT Rival

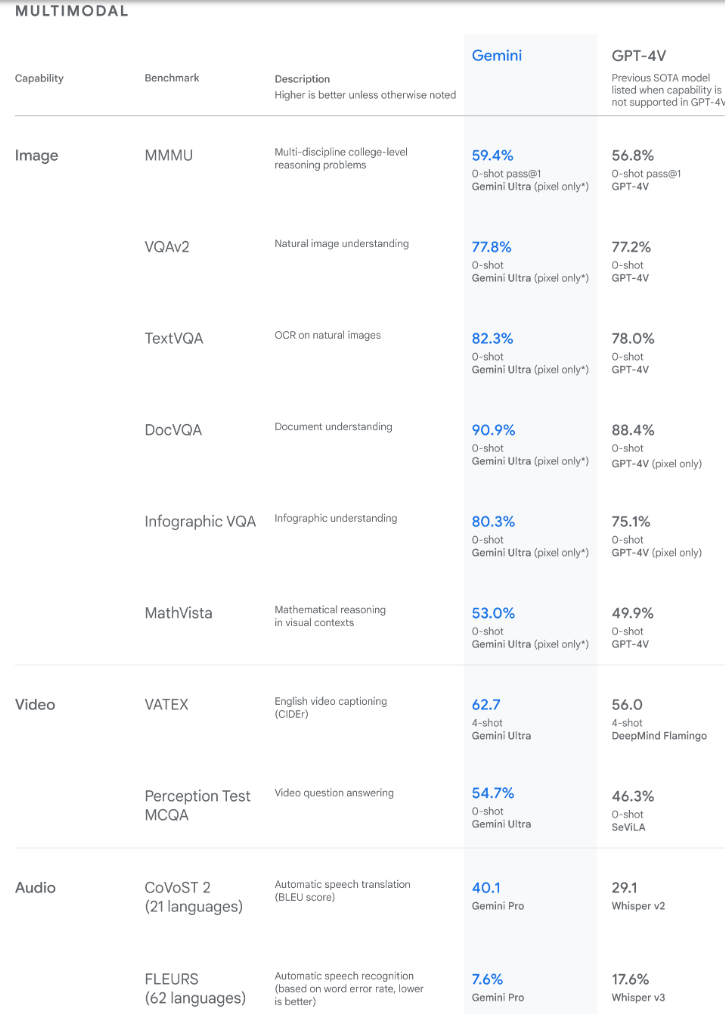

Gemini defines a family of multimodal LLMs capable of understanding texts, images, videos, and audio. It’s also said to be capable of performing complex tasks in math and physics, as well as being able to generate high-quality code in several programming languages.

Updated Jan 2024 · 9 min read

Start Your AI Journey Today!

2 hr

15.1K

Course

AI Ethics

1 hr

6.3K

Course

Generative AI for Business

1 hr

2.2K

See More

RelatedSee MoreSee More

How to Become a Prompt Engineer: A Comprehensive Guide

A step-by-step guide to becoming a prompt engineer: skills required, top courses to take, with career advancement tips.

Srujana Maddula

9 min

Generative AI Certifications in 2024: Options, Certificates and Top Courses

Unlock your potential with generative AI certifications. Explore career benefits and our guide to advancing in AI technology. Elevate your career today.

Adel Nehme

6 min

[AI and the Modern Data Stack] Accelerating AI Workflows with Nuri Cankaya, VP of AI Marketing & La Tiffaney Santucci, AI Marketing Director at Intel

Richie, Nuri, and La Tiffaney explore AI’s impact on marketing analytics, how AI is being integrated into existing products, the workflow for implementing AI into business processes and the challenges that come with it, the democratization of AI, what the state of AGI might look like in the near future, and much more.

Richie Cotton

52 min

Becoming Remarkable with Guy Kawasaki, Author and Chief Evangelist at Canva

Richie and Guy explore the concept of being remarkable, growth, grit and grace, the importance of experiential learning, imposter syndrome, finding your passion, how to network and find remarkable people, measuring success through benevolent impact and much more.

Richie Cotton

55 min

Building Intelligent Applications with Pinecone Canopy: A Beginner's Guide

Explore using Canopy as an open-source Retrieval Augmented Generation (RAG) framework and context built on top of the Pinecone vector database.

Kurtis Pykes

12 min

Semantic Search with Pinecone and OpenAI

A step-by-step guide to building semantic search applications using OpenAI and Pinecone in Python.

Moez Ali

13 min