course

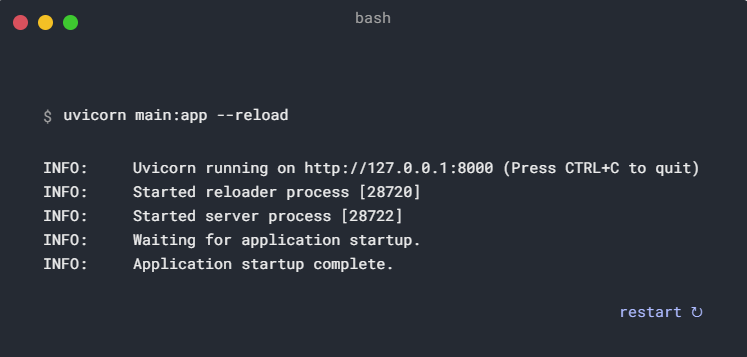

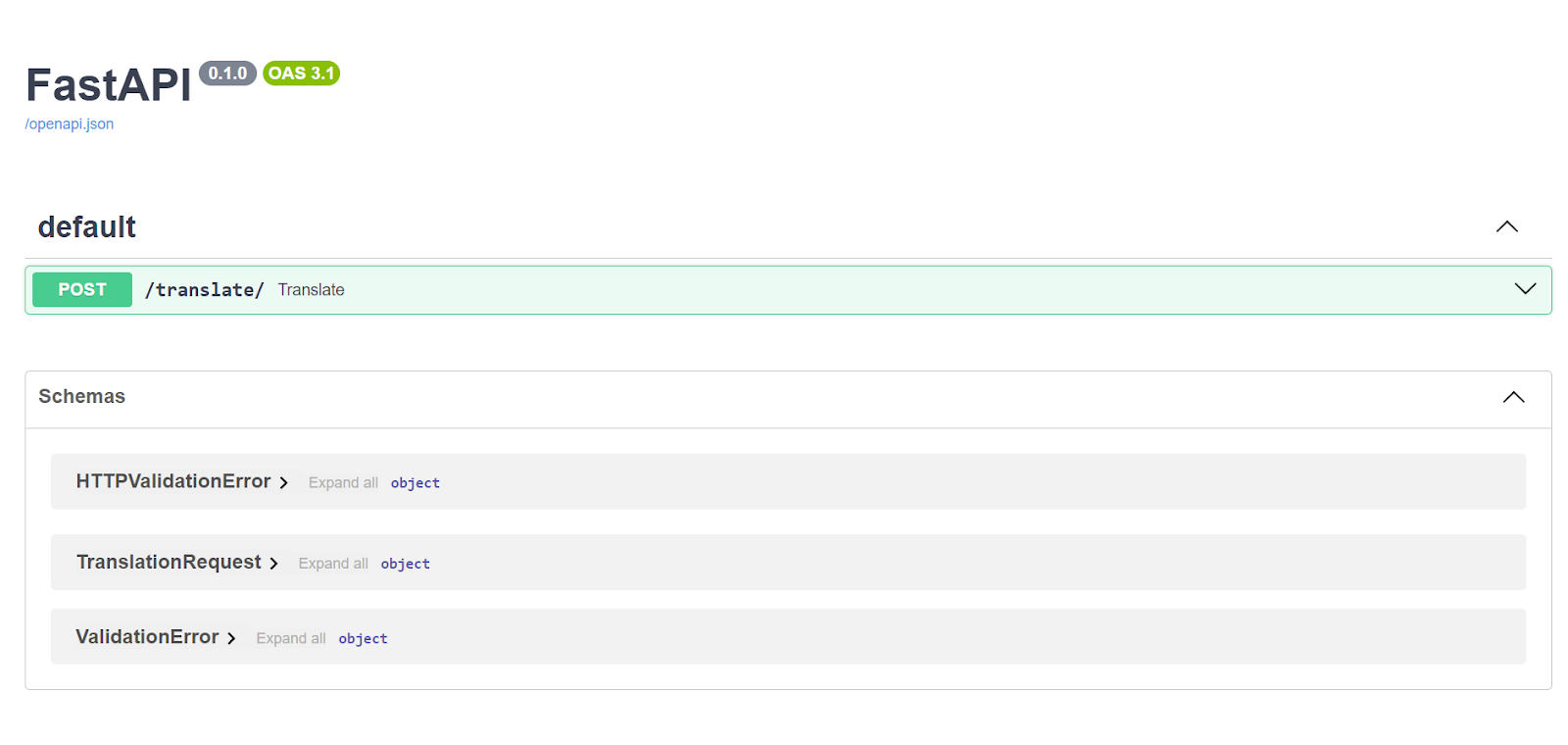

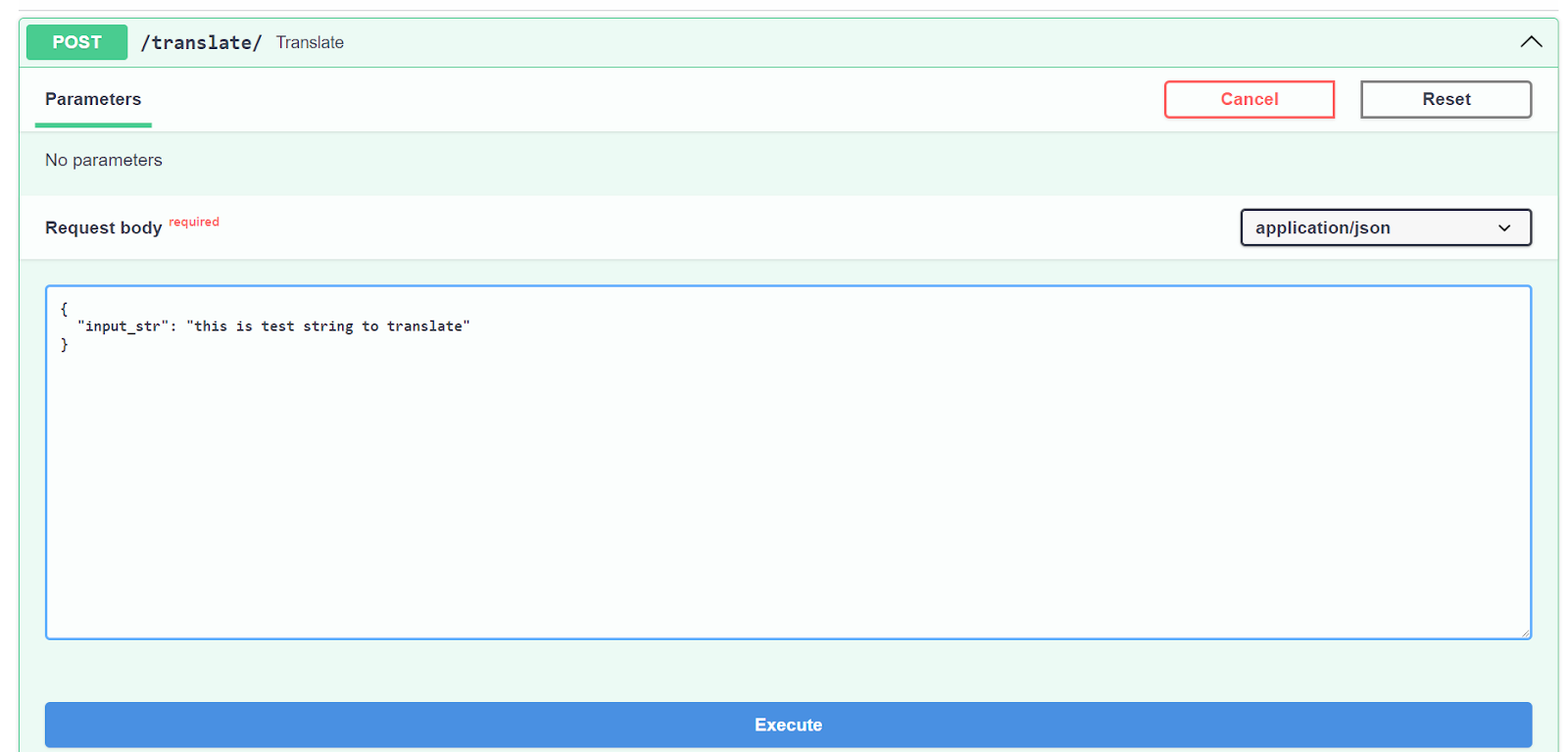

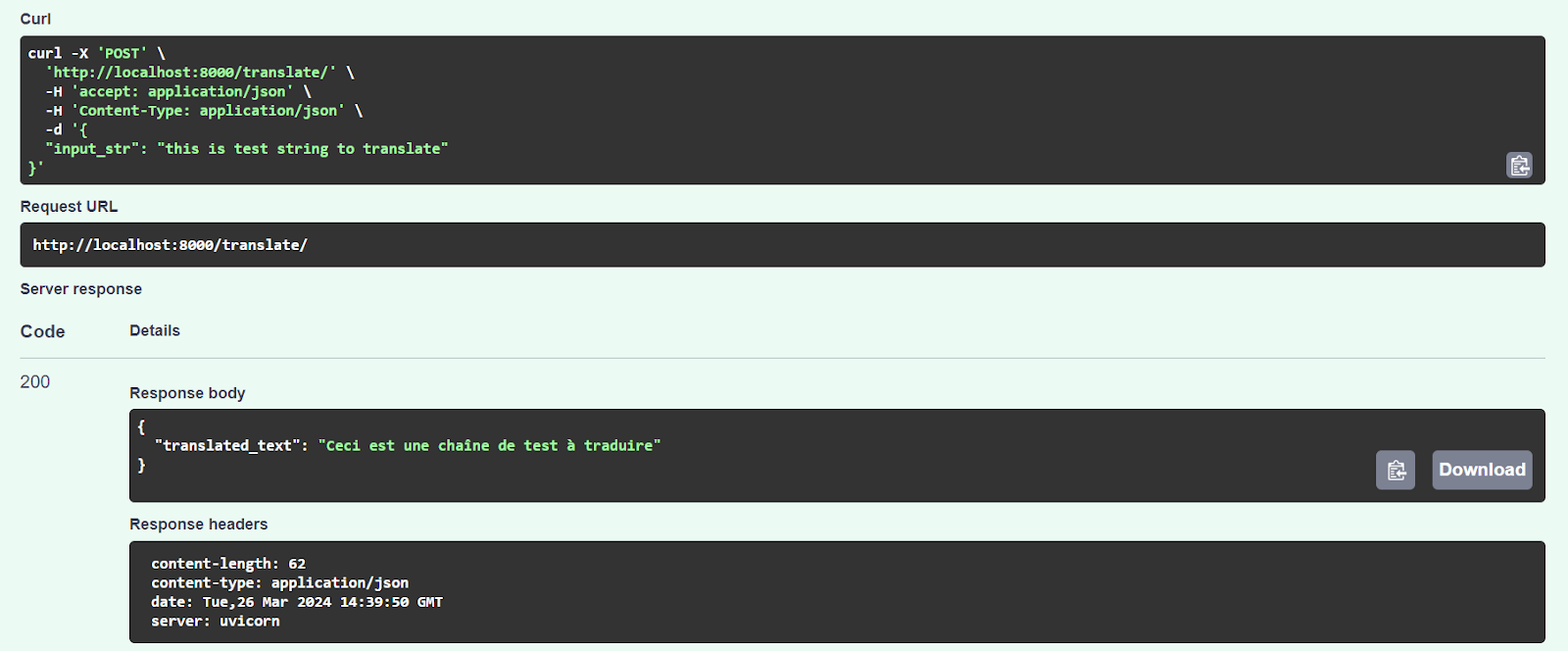

Serving an LLM Application as an API Endpoint using FastAPI in Python

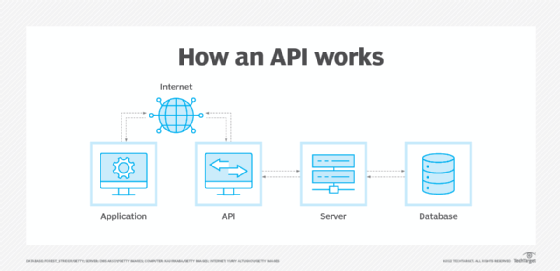

Unlock the power of Large Language Models (LLMs) in your applications with our latest blog on "Serving LLM Application as an API Endpoint Using FastAPI in Python." LLMs like GPT, Claude, and LLaMA are revolutionizing chatbots, content creation, and many more use-cases. Discover how APIs act as crucial bridges, enabling seamless integration of sophisticated language understanding and generation features into your projects.

Apr 2024

Continue Your AI Journey Today!

2 hours

20.9K

course

Developing LLM Applications with LangChain

4 hours

3.4K

course

Introduction to LLMs in Python

4 hours

3.9K

See More

RelatedSee MoreSee More

cheat sheet

The OpenAI API in Python

ChatGPT and large language models have taken the world by storm. In this cheat sheet, learn the basics on how to leverage one of the most powerful AI APIs out there, then OpenAI API.

Richie Cotton

3 min

tutorial

How to Build LLM Applications with LangChain Tutorial

Explore the untapped potential of Large Language Models with LangChain, an open-source Python framework for building advanced AI applications.

Moez Ali

12 min

tutorial

How to Train a LLM with PyTorch

Master the process of training large language models using PyTorch, from initial setup to final implementation.

Zoumana Keita

8 min

tutorial

An Introductory Guide to Fine-Tuning LLMs

Fine-tuning Large Language Models (LLMs) has revolutionized Natural Language Processing (NLP), offering unprecedented capabilities in tasks like language translation, sentiment analysis, and text generation. This transformative approach leverages pre-trained models like GPT-2, enhancing their performance on specific domains through the fine-tuning process.

Josep Ferrer

12 min

code-along

Introduction to Large Language Models with GPT & LangChain

Learn the fundamentals of working with large language models and build a bot that analyzes data.

Richie Cotton

code-along

Chat with Your Documents Using GPT & LangChain

In this code-along, Andrea Valenzuela, Computing Engineer at CERN, and Josep Ferrer Sanchez, Data Scientist at the Catalan Tourist Board, will walk you through building an AI system that can query your documents & data using LangChain & the OpenAI API.

Andrea Valenzuela