Course

Introducing Google Gemini API: Discover the Power of the New Gemini AI Models

Learn how to use Gemini Python API and its various functions to build AI-enabled applications for free.

Updated Jan 2024 · 13 min read

Start Your AI Journey Today!

2 hr

13.4K

Course

Working with the OpenAI API

3 hr

8.9K

Course

ChatGPT Prompt Engineering for Developers

4 hr

3.2K

See More

RelatedSee MoreSee More

blog

You’re invited! Join us for Radar: AI Edition

Join us for two days of events sharing best practices from thought leaders in the AI space

DataCamp Team

2 min

blog

What is Llama 3? The Experts' View on The Next Generation of Open Source LLMs

Discover Meta’s Llama3 model: the latest iteration of one of today's most powerful open-source large language models.

Richie Cotton

5 min

podcast

How Walmart Leverages Data & AI with Swati Kirti, Sr Director of Data Science at Walmart

Swati and Richie explore the role of data and AI at Walmart, how Walmart improves customer experience through the use of data, supply chain optimization, demand forecasting, scaling AI solutions, and much more.

Richie Cotton

31 min

podcast

Creating an AI-First Culture with Sanjay Srivastava, Chief Digital Strategist at Genpact

Sanjay and Richie cover the shift from experimentation to production seen in the AI space over the past 12 months, how AI automation is revolutionizing business processes at GENPACT, how change management contributes to how we leverage AI tools at work, and much more.

Richie Cotton

36 min

tutorial

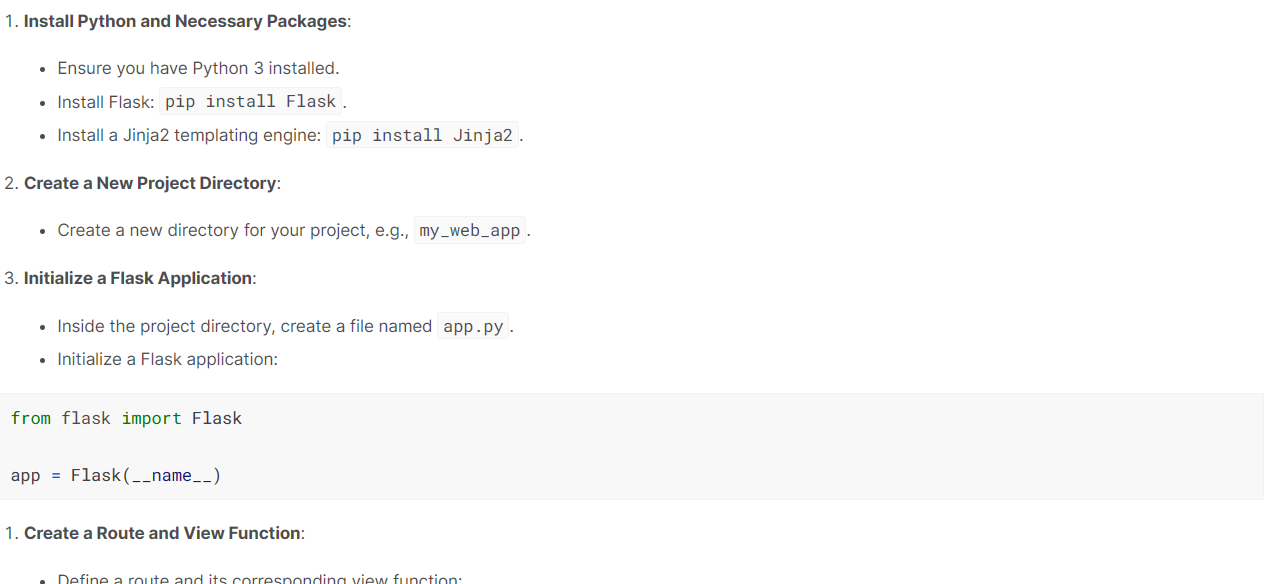

Serving an LLM Application as an API Endpoint using FastAPI in Python

Unlock the power of Large Language Models (LLMs) in your applications with our latest blog on "Serving LLM Application as an API Endpoint Using FastAPI in Python." LLMs like GPT, Claude, and LLaMA are revolutionizing chatbots, content creation, and many more use-cases. Discover how APIs act as crucial bridges, enabling seamless integration of sophisticated language understanding and generation features into your projects.

Moez Ali

tutorial

How to Improve RAG Performance: 5 Key Techniques with Examples

Explore different approaches to enhance RAG systems: Chunking, Reranking, and Query Transformations.

Eugenia Anello