Accéder au contenu principalPour les entreprises

Haut-parleurs

Formation de 2 personnes ou plus ?

Donnez à votre équipe l’accès à la bibliothèque DataCamp complète, avec des rapports centralisés, des missions, des projets et bien plus encoreConnexe

webinar

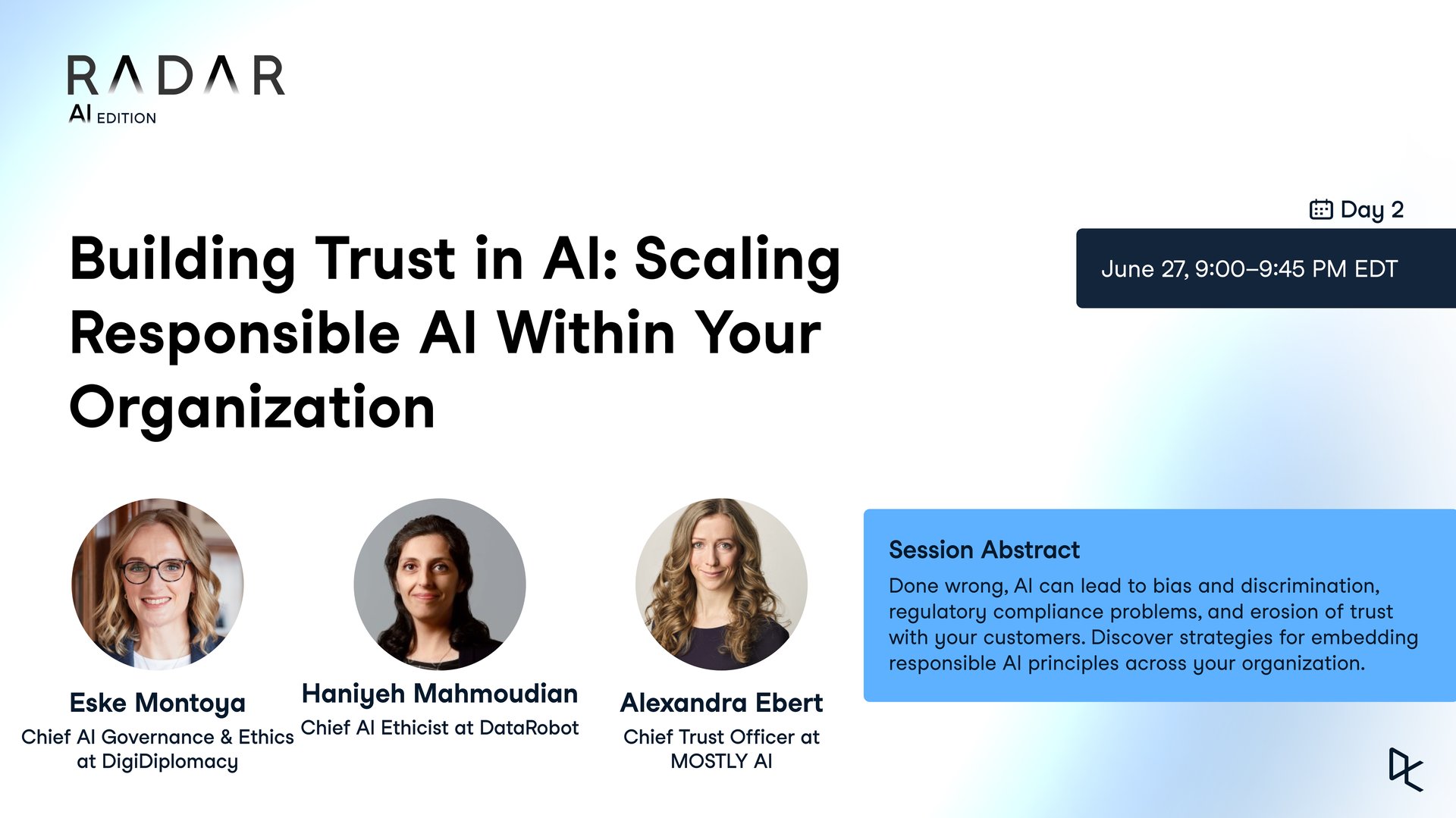

What Leaders Need to Know About Implementing AI Responsibly

Richie interviews two world-renowned thought leaders on responsible AI. You'll learn about principles of responsible AI, the consequences of irresponsible AI, as well as best practices for implementing responsible AI throughout your organization.webinar

Data Literacy for Responsible AI

The role of data literacy as the basis for scalable, trustworthy AI governance.webinar

Driving AI Literacy in Organizations

Gain insight into the growing importance of AI literacy and its role in driving success for modern organizations.webinar

Building an AI Strategy: Key Steps for Aligning AI with Business Goals

Experts unpack the key steps necessary for building a comprehensive AI strategy that resonates with your organization's objectives.webinar

Leading with AI: Leadership Insights on Driving Successful AI Transformation

C-level leaders from industry and government will explore how they're harnessing AI to propel their organizations forward.webinar

Getting ROI from AI

In this webinar, Cal shares lessons learned from real-world examples about how to safely implement AI in your organization.Join 5000+ companies and 80% of the Fortune 1000 who use DataCamp to upskill their teams.

Loved by thousands of companies